Alex

G-BAGS based controller

The heart of the controller is going to be a graph based map, made according to the G-BAGS (Graph-Based Adaptive Guiding System) described in more detail in Winkler's master theses. For now only example source code for user control of the robot is available along with some descriptions. Author Zbyněk Winkler.

Flag |

Screenshot |

Overview

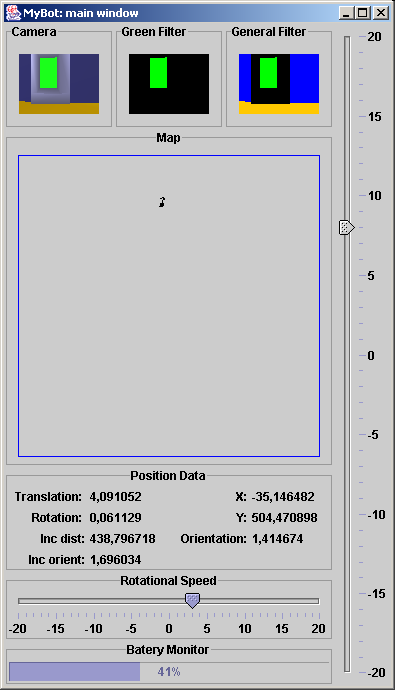

Here you can see a screenshot of the controller. The top of the window take

different camera pictures. The first is the camera “as is”. The second has a green

filter attached while the third uses a general filter distinguishing green (available)

feeder, blue walls and yellowish floor.

Under the camera row is a Map panel. In the future this panel will be used to

display current robot's believes about the world surrounding it. Right know you can

see an odometry based position. The initial position corresponds to the initial position

specified in the alife.wbt file. The following position tracking is solely

encoder based.

A panel entitled Position Data contains information about robot's movement.

Translation and Rotation fields correspond to a change in position during the last

simulation step (64ms). Fields Inc dist and Inc orient are the sums of the absolute

value of respective quantities over the whole time period. It gives raw estimate as

to what error we could expect in the calculated position and orientation displayed

in fields X, Y and Orientation. When you compare these values with the actual values

in webots (tree view) you will find out that the x-axis is flipped and so is the

orientation. The units used for position and distance measurements are millimeters

and for the angle measurements radians.

To control the robot movement you can use the two sliders. The vertical slider

is used to control the translational portion of the speed and the horizontal slider

the angular portion. The units used are the units used in webots. A single step

corresponds to 0.1 rad/s. The angular slider always adds its value to one

of the weels and subtracts from the other.

Finaly the batery monitor shows how much energy the robot has left.

Future Developement

I have decided first to build a controller that would allow me to familiarise

myself with webots developement. To build a controller that would not be too

complicated but at the same time efficiently utilized all available information

I needed to know exactly what information is available. That is the reason for

building such a hand controller. It allows the user (me) to drive wherever I think

are the right condition for testing current camera filters, reliability of odometry

data etc.

The next step is going to be the map building but still with hand driven robot.

Once I know how accurate map the robot can build I will decide on proper algorithm

for its navigation. The possibilities in consideration are:

- no suitable map can be built (I doubt it but might just not be smart enough or there might be not enough processing time) — I will resort to a skill-based approach similar to the one used in Wolf

- good enough map can be built — I will use the map in navigation algoritms described in more detail in my master theses (available in czech only)

Information about further progress will always be available here along with the

announcement in news section on the title page. Source code of the last version of

the controller probably will not be available (in order to retain certain advantage

in the competition). However the description of algorithm used will (along with some

older versions of the controller).

| version | date | source | description |

|---|---|---|---|

| 1 | 2002-12-07 | alex-1 | graphical interface for driving the robot by hand and inspecting the senzor data |

I you have any questions regarding the controller or this site in general

we would be more than happy to answer them. Feel free to use our

feedback form.