Field Robot 2010

maize silver travels to Czech Republic

This year cooperation with Czech University of Life Sciences Prague was surely worth it. Overall second place for Eduro Team and four maize „cups” shared together with another Czech team Cogito MART are hopefully self-explaining . What hardware, software and strategy we used this year, you can learn from this article …

We attended Field Robot Event 2010 with two

teams: Eduro Team, with patronage of Czech University

of Life Sciences Prague, Department of Agricultural Machines and Cogito MART

in cooperation of Mathematic-physics faculty

Charles University, department of software engineering and

Czech University of Life Sciences Prague, Department

of Agricultural Machines. At first it is easier to "pull the same end of the

rope'' and them we could better take advantage of various resources like maize

test filed or micro-bus for traveling. Finally there is proved rivality,

which drives the development of both teams forward. In reality were both teams

quite interconnected and for example sprayer was shared.

- Eduro Team FRE2010

- Milan Kroulík — the boss, overall support, preparation of test maize filed, sprayer

- Jan Roubíček — mechanics

- Tomáš Roubíček — electronics

- Martin Dlouhý — software

- Cogito MART

- Jiří Iša — software and hardware

- Stanislav Petrásek — maintenance, support

Rules

The teams competed in five categories where most of them were the same as last

year:

Basic Task

The first task was to systematically travel through rows of maize. The robot

had to be fully autonomous and had to correctly turn at the end of row into the next

one. The points were gained for distance traveled (1 meter = 1 point) in given time

limit three minutes. For every damaged plant there was 1 point penalty, for

touch of operator 5 points and for help to turn the robot at the end of row 2

points. The rows were deliberately slightly bended and the accent was put on

precision and speed. Turning at the end of row was not that important in this

task.

Advanced Task

For the second task team received code of the route (this year it was

2R-1L-2R-3L-4L-0(U-Turn)-2R-4R-1L) and robot had to follow this pattern for

entering rows, i.e. first turn to second right, then first left, then second

right again, … Here the penalty for turning the robot at the end of row was

quite high (8 points). But with this exception the rest scoring scheme was the

same as in the first task.

Professional Task

This task was strangely split into two subtasks: in the first one we were supposed

to demonstrate how our spraying device works and in the second one ability to

detect weed. The weed was simulated by artificial daisy flowers, a plastic

square of approx. size 7x7cm. Unfortunately the rules were a little bit

confusing and it was not clear that __only__ squares with

white flowers should be sprayed.

Cooperative Challenge

Here the task was to demonstrate cooperation of more robots. The result of

evaluating committee was then multiplied by factor how far the teams are: the

same school 1x, the same country 1.5x and different countries 2x.

Freestyle

The last task was freestyle, where robots were supposed to show anything at

least partially related to agriculture. The scoring was completely in hand of

the committee.

Reality

After arrival to German Braunschweig all of us were a little bit shocked. What

we had seen was something incomparable with luxury little field we had

prepared by Milan at grounds of ČZU in Suchdol, Prague.

Due to plenty of weed you could hardly see

any maize, and its small grow organizers "handled" by tons of green wooden

sticks. In mean time where we tried to recover from the first shock, Milan and

Standa took over, found some tools and within few minutes prepared new space

for testing.

Field after arrival ... |

... and afterMilan's and Standa's adaptation |

Before departure from Prague the maize leaves were quite well distinguishable,

so camera was the best sensor. In Germany was camera on the other hand almost

useless. The sticks were narrow and the color was rather to gray, so in order

of magnitude much better results gave laser range finder.

And the weather? Nice on Friday, and rain (once a while heavy) on Saturday, day

of the contest. The robots could happily enjoy the mud. On Sunday, the sun was

back again …

Course of the contest and results

Robot Eduro Maxi HD drove quite nicely, and even it made several mistakes,

it was supported by applause. On the other hand on the six-wheel UTrooper

the committee just shake heads — the machine twisted like in the death cramp

and without regular Standa's maintenance it would sure loose all wheels. It is a

machine, which we would throw on head of the manufacturer and from highs if

possible — it was really terrible :-(.

The beginning was quite full of adrenaline: few minutes before start was not

sure if the robot should turn to the left or to the right at the end of the

row. The organizers changed it several times, first team went the course twice,

and the final decision was that everybody can choose. Well, in the program it

was only difference if robot should get sequence -1,1,-1,1,… or

1,-1,1,-1,…, but the stress was surely unnecessary.

Eduro went nicely without touch, but on turning of 4th row did not handle well

some larger chunk. Up to this place it was 45 meters without touch, but there

was still enough time so judges convinced us to manual intervention and

repeated start from the 4th row. We know that it takes 20s to start laser and

that robot will enter wrong row at the end, but why not … at least it was a

show for spectators . In the remaining time robot traveled next two lines

and wrong turn was counted as second touch. In total something like 75-5-2

points (we do not know exact results yet).

The second task was more interesting. It started to rain so everybody began to

pack their water sensitive robots (even our is not waterproof, as Explorer from

last year was). Here it was quite clear what the robot should do at the end of

row, so it was paradoxly more calm. We trained well passage to next rows as

well as skipping one row, so at least the beginning of the route code was good

for us. Good was also its repetition, because on the 3rd pass was robot

manually stopped, but this time next command was the same as the first: turn to

second row to the right .

Due to slow restart robot managed only turning and 1/3 of new row so total 50

meters with one touch so we guess 45 points.

The last task, detection and spraying of weed, was the most confusing. Even now

we are not quite sure what we were supposed to demonstrate and for what were

points and penalties. Moreover we shared the spraying device, so there was

non-trivial service time necessary to unscrew it and complete the carrige.

Finally, in the row was an obstacle, which should not be touched (some other

team destroyed it so organizers probably did not insist on it, I do not

know … we have not seen it in next rounds).

From the programmers point of view went Maxík beyond expectations. The shift

between weed detection in the camera image and the moment when the spraying

device should start was approximately 75cm. But in this time weed could be

detected on the other side or another one in the same side. Shortly, at 12:30

organizers took our robots, and we had to blindly implement these "time delayed

command" during speeches of ministers, sponsors and organizers. And without any

test! And it could ruin even first two tasks … well, I would not like to do

it again, the luck was on our side. Actually not completely. Robot with high

precision detected center of weeds, and with tooting siren, blinking LEDs and

spraying that you could see holes in the ground after passing the first row

judge cried: "STOP, STOP, STOP". He said something that we are not supposed to

spray the green squares without daisy and if we are able to change it. That

time I consider him a fool, but now I think that we could try something like

verification of white in place where we found the green patches. I could

__maybe__ work. Oh well. It perfectly sprayed green squares but points probably

were not good. From the log file we can read that the robot traveled without

touch over five rows and sprayed maybe 100 times, but it was not appreciated. What

makes me happy is that in this task scored UTrooper/Cogito MART.

Freestyle and robot cooperation

Eduro Team and Cogito MART participated also in freestyle and planned to

demonstrate robot cooperation.

In freestyle was robot Eduro Maxi supposed to be connected to tracking unit

VTU10 lent by company MapFactor. This device is

usually used for tracking vehicles, but it has internal accumulator with

sufficient capacity, so it can track also things like persons or parcels where

power source is not available. VTU10 can besides current position also send

short messages between driver and dispatcher. This feature we used for sending

commands from mobile phone over tracking unit to robot. We could choose

destination on satellite images and the coordinates were send to device. In the

ideal case the robot could start within few seconds to entered goal (with

possibility of regular tracking) and after reaching the goal the robot should

report it by sending message.

I write it in conditional, because in time of presentation not everything was

ready. The main problem was in misinterpretation of ID of messages, which should

be unique over lifetime of the tracking units. This way it is possible to track

also history of messages, if they were received etc. Our program for PDA

generated the same ID for each run so the server refused to accept them. The

demo now works and you may see it on

RoboOrienteering contest in Rychnov

nad Kněžnou/Czech Republic.

We had two devices VTU10. The second device was as backup and we wanted to use

it for demo of robot cooperation. First robot tracking unit only carried (it

could be actually even human with VTU10 in the pocket), but his position was

tracked and sent do server. The second robot then was supposed to follow the

first one without necessity to see it …

I would like to thank company MapFactor for

lending two tracking units VTU10 and company

T-Mobile, for lending three SIM

cards with enabled data roaming in Germany.

Eduro Team

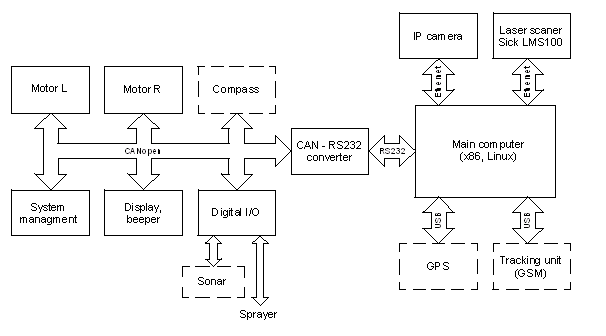

Hardware

Eduro Maxi HD is the prototype of three-wheeled outdoor robot with a differential

drive. It is the modular robotic platform for education, research and

contests. It weights about 15 kg with the dimensions of 38x60x56 cm. HD version

uses SMAC (Stepper Motor – Adaptive Control) drives with belt transmission. The

power supply is managed by two Pb batteries 12V/8Ah. The brain of the robot is

single board computer with AMD Geode CPU, 256 MB RAM, compact flash card,

wi-fi, 3 Ethernet, 1 RS232 and 2 USB ports. The RS232 port is used for

connection to CAN bus via RS232-CAN converter.

The most of sensors, actuators and other modules (display, beeper, power

management etc.) are connected to CAN bus, which forms the backbone of the

robot. The more data rate demanding sensors are connected directly to PC via

ethernet interface. Two main sensors were used for the Field Robot Event. The

weed detection was provided by an IP camera with fish-eye lens. For obstacle

detection, the laser range finder SICK LMS100 was used. The robot is further

equipped with sonar, GPS and compass, but these sensors were not used in main

tasks during the Field Robot Event.

Hardware structure |

The sprayer

The robot carried spraying system on the back. The spraying system had been

designed as model of a real agriculture machine and was shared with team Cogito

MART. The sprayer is independent module with own battery and simple interface

(3pin connector - ground, left, right). Besides spraying it blinks and beeps.

Software

The main program is running on x86 single board computer with the operating

system Linux and Xenomai real-time extension. The Xenomai is used for

decreasing of serial port interrupt latency and improvement of communication

reliability.

The low-level motor control is provided by microcontrollers in motor modules.

The main computer only sends desired speed to the units via CAN bus.

The highlevel software is written in Python. It is using routines for image

processing written in C/C++ with help of OpenCV library. It turned out that all

three tasks could be unified in one code:

contest = FieldRobot( robot, verbose ) contest.ver2([-1,1]*10) # Task1 contest.ver2([2,-1,2,-3,-4,0,2,4,-1]) # Task2 contest.ver2([1,-1]*10) # Task3

Sharp spectator maybe noticed that in the second task (Advanced Task) robot

sprayed something what looked like a weed .

The code is relatively compact and it has approx. 300 lines including logging,

prints and sprayer specific routines.

Row navigation

The navigation in the row was originally handled by camera. The camera was

much more successful on the grown maize test field in Prague when compared to

laser scanner pointing down.

The camera row navigation was based on color segmentation (green/white), where

green pixel was considered if in RGB color space G > 1.05*R && G > 1.0*B. The

image was processed from dynamic center to left/right until sum of green pixels

was above given threshold. For an example of row detection see figure:

Weed detection

For weed detection a similar algorithm was used. The only difference was

definition of bright green: (G > 1.3*R && G > 1.3*B). The weed was detected in

front of the robot so the spraying had to be delayed by time when robot travels

approximately 75cm. The implementation used extra task queue with time offset.

Laser row navigation

In Braunschweig we used laser navigation instead of camera. The organizers

decided to replenish missing maize with many approx. 30cm long green wooden

sticks which were reliably detected via laser.

The sensor was originally pointing 10deg down for detection small maize, 15cm

in Prague. This way it could see only to distance 1.7m, but we left it that way

to eliminate loss of track on uneven terrain.

The input was 541 measurements with half degree step. A simple algorithm

searched for center of row – as an obstacle was considered measurement shorter

than 1m. Also for the end of row detection we used simple rule then the space

opened wider than 85degrees on both sides.

Debugging of laser navigation algorithm slightly remained us of ASCII art from

computer stone age:

RESET ROW (0.00, 0.00, 0.0) ===== ACTION 1 ===== ' xx xxxxxxxx x x C xxx x xx xx x x ' ' xx xxxxxxxx x x C xxx x xx xx xxx ' ' x xxxxxxxx x x C xxx x xxx xx x xx ' ' x xxxxxxxxx xxx C xxx x xx x x x ' logs/cam100612_165004_000.jpg ' x xxxxxx xx xxxx C xxx xx xx xx xx ' ' x xxxxxx x x xx C xxx x xxx x x xx ' ' x xxxxxxxx x xx C xxx x xxx xxxx x ' ' xx xxxxxxxx x xxx C xxx x xx x xx ' ' x xxxxxxx xx xx C xx x xx xx x ' logs/cam100612_165004_001.jpg ' x xxxxxxx x xxx C xx x xx x ' 'x xxxxxxxx x xxx C xxxx x xx x ' ' xxxxxxxxxx x xxx C xxxx x xxx xx x ' ' xxxxxxxxxxx xx C xxxx x xxx x x ' logs/cam100612_165005_002.jpg ' xxxxxxxxxxx x C xx x x xxxxx xx xx' OFF 7 7 14 ' x xxxxxxxxx xx x C x x xxxxx xx x' ' xxxxxxxxxx xx x C x x xx xxxx x ' ' xxx xx xx xx xxx C xxxx xx xxxx xx' ' xxx xxx x x x C xxx x x xxxxx x'

If you are interested what is what, than 'x' is obstacle within 5 degree

interval, 'C' is shifting center where robot should go, and file names are

reference images from camera.

Testing, testing, testing …

At the end I would stress what it is all about … it is about non-stop testing

how far you proceed and how reliable the algorithms are. Are you saying that

you do not have a maize field? It does not matter — have a look at "weed

filed" adapted to navigation (Jirka and Honza, good work!).

Conclusion

We can recommend the Field Robot Event contest to anybody who is interested in

robotics and who has anything common with agriculture. Yes, the Czech

University of Life Sciences Prague, Department of Agricultural Machines

perfectly matches this criteria . To create successful team is not easy

(this year it was perfect!), so if you find this contest interesting and you

are for example student of ČZU, let us know and we may take you on board for

FRE2011. FRE2011 will be on 2nd July 2011 in

Herning, Danmark.

And maybe a little curiosity at the end. Do you want to know ranking of Eduro Team

in Professional Task? Eduro Team won Professional Task! There was a

mistake in Excel sheet, so Optimizer got the price, which was supposed to be on

7th place. Besides similar details, I must admit that the contest is quite

demanding to organize, and it was possible to see major improvements when

compared to last year. Please keep it that way and thank you for a nice contest

.