Robot Challenge 2014

let's beat the human race in AirRace!

Robot Challenge is well established competition with many categories and many participating robots. One of them is flying 8-figures in net protected area — AirRace. There used to be also a semi-autonomous category for the first two years, where the best competitor Flying Dutchman reached 26 rounds. It is time to beat his score with autonomous drone now! Blog update: 11/4 — Conclusion

Note, that this is not just „English translation” of Czech article. It seems

that fund raising campaign failed (well, there is still a week to go, but I

doubt it will jump to required limit), so I decided to „log” my progress at

least in English. Let me know, if you find this interesting .

We are playing with two robots" Heidi and Isabele. Both are

AR Drone 2.0 from Parrot.

The source code is available at github:

https://github.com/robotika/heidi

Here are some pictures from older Czech blog:

Links:

- Contest website: http://www.robotchallenge.org/

Blog

February 10th, 2014 - cv2

Yesterday I was fighting with

OpenCV

for Python, which seems to be just perfect tool to help us reach the goal. My

problem was that I was following only the

Python

setup and it kind of worked (loaded and displayed image, for example). But as

soon as I need to to parse video with H264 codec I was doomed :-(. There are

many comments on the web that integration with

ffmpeg is complicated, but there were already some

DLLs prepared?!

Well, next time start with

basic

OpenCV installation and then the Python part. The only critical detail is

that you have to set system path to binaries, ffmpeg DLLs in particular …

yeah, quite obvious, I know.

February 10th, 2014 - PaVE and cv2.VideoCapture

cv2.VideoCapture

is nice function for access to video stream. Thanks to integrated FFMPEG you

can read videos with H264 codec, and this is something used by Parrot AR Drone

2.0. The bad news is that it is not clean H264 stream, but there are also

PaVE (Parrot Video Encapsulation) headers.

Does it matter? Well, it does. Yesterday I have seen couple time crashing Linux

implementation, and on Windows you get several warnings like:

[h264 @ 03adc9a0] slice type too large (32) at 0 45 [h264 @ 03adc9a0] decode_slice_header error

The reason is that H264 control bytes 00 00 00 01 are quite common in PaVE

header and then the decoeer is confused. Note, that most projects ignore this:

It is OK, if you just need „some” images, but once the decoder is confused,

you loose up to 1 second of video stream until I-frame is successfully found.

So how to work around it? I had two ideas, both crazy and ugly. One is to

create „TCP Proxy”, which would read images from AR Drone 2.0 and offer them

to OpenCV via TCP socket. Another possibility is temporary file, where ret

value is sometime False, but you can replay the video in parallel.

cap = cv2.VideoCapture( filename )

while(cap.isOpened()):

ret, frame = cap.read()

There are probably more complex solutions like directly control FFMPEG API, but

it is pity to lose this otherwise nice and clean code . Any ideas?

February 18th, 2014 - I-Frames only

Well, cv2 is still winning. I tried both — temporary file as well as TCP

Proxy. It took a bit too long to start simple server (several seconds), which

is not acceptable in „real-time video processing”. Temporary file looked more

promising, but on Linux it was completely unusable. Hacking around

cv2.VideoCapture is maybe not the best idea, and proper solution with

buffer frame by frame decoding would be the right way, but …

Today's fall back was usage of I-Frames only. It does not work if you create

video from I-Frames only, but you can create new file, write there single

I-Frame, and then read it with cv2.VideoCapture. I know crazy. But it works

for now and hopefully in meantime we will find some more effective solution.

For details see

source

diff.

Heidi has now new flying code

airrace_drone.py.

It is glued together not to interfere with drone Isabele. Simple

processFrame is then in

airrace.py.

Adaptive thresholding, detection of contours and for reasonable size contours

compute bounding rectangle (with rotation).

February 19th, 2014 - Rotated rectangles

Writing a robo-blog in English is not as much fun as in Czech. At first my

English is much worse and at second I do not have a clue if there is anybody

reading it. That's something Fandorama was good for.

I've got clear feedback how many people are interested so much that they

would even support it. And almost nobody was interested in this blog in Czech,

so … you get the picture. If you find a bit of information useful for you

let me know. It helps to brighten up mine otherwise depressed spirit .

What I want to do now is revision of logged data from yesterday. The cv2 logs

are normal text files with results of image analysis + number of updates

between them. The last program was supposed to turn drone based on third

parameter of first rectangle. Just a quick hack that I see that there is some

feedback going on and that I can replay already recorded data with exact

match.

NAVI-ON -26.5650501251 -15.945394516 -45.0 -88.9583740234 NAVI-OFF

This is the output in console — angles used for rotation. It is also my first

TODO, to add there video timestamps.

182 [((817.0, 597.0), (10.733125686645508, 12.521980285644531), -26.56505012512207), ((223.17218017578125, 440.579833984375), (319.85919189453125, 60.09449768066406), -88.75463104248047)] 198 [((298.0, 508.0), (58.51559829711914, 319.50067138671875), -15.945394515991211)] 198 [((982.2500610351562, 492.25), (29.698482513427734, 17.677669525146484), -45.0), ((951.9046020507812, 507.705078125), (60.70906448364258, 84.2083511352539), -69.02650451660156)] 185 [((741.7918090820312, 247.95079040527344), (43.32011032104492, 72.9879379272461), -88.9583740234375), ((1035.0001220703125, 14.999971389770508), (16.970561981201172, 12.727922439575195), -45.0)] 199

My second TODO would be to round/truncate floating numbers to integers. It

would be easier to read and remember, my goal is to turn left/right, i.e.

something if mass center is bigger or smaller than width/2 …

182 [((817, 597), (10, 12), -26), ((223, 440), (319, 60), -88)] 198 [((298, 508), (58, 319), -15)] 198 [((982, 492), (29, 17), -45), ((951, 507), (60, 84), -69)] 185 [((741, 247), (43, 72), -88), ((1035, 14), (16, 12), -45)] 199

Better? I think so . Now I would almost guess that it is

((x,y,(width,height),rotation), but API is so simple that it is worth it to

test it:

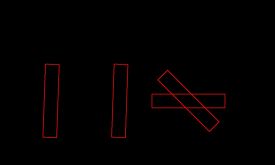

import cv2

import numpy as np

frame = np.zeros( (1200,720), np.uint8)

rect = ((223, 440), (319, 60), -88)

box = cv2.cv.BoxPoints(rect)

box = np.int0(box)

cv2.drawContours( frame,[box],0,(0,0,255),2)

cv2.imshow('image', frame)

cv2.imwrite( "tmp.jpg", frame )

cv2.waitKey(0)

cv2.destroyAllWindows()

Well, you probably know how to make it simper, but this is whole working

program, which will display image and save it as reference. The width/height of

image is swapped and I do not see there anything :-(, so it is not that great.

In general I would recommend

OpenCV-Python

Tutorials. Note, that they are for non-existing (or better to say for not so

easily available) version 3.0, and some cv2.cv.* functions are renamed (see

notes).

So how to fix the program? Back to

Drawing

Functions example. Great!

# Create a black image img = np.zeros((512,512,3), np.uint8)

so my fix is

frame = np.zeros( (720,1200,3), np.uint8)

and now I see red rectangle. With modification rect = ((223+300, 440), (319,

60), -88) and rect = ((223+600, 440), (319, 60), 0) I see that first

coordinate is really (x,y), then (width,height) and finally angle in degrees,

where Y-coordinate is pointing down … maybe I was too fast going to

conclusion. One more test rect = ((223+600, 440), (319, 60), 45), so (x,y)

is the center of rectangle! And now is time to

read

the manual . Hmm … The function used is cv2.minAreaRect(). It returns

a Box2D structure which contains following detals - ( top-left corner(x,y),

(width, height), angle of rotation ). Test first! Here is my accumulated

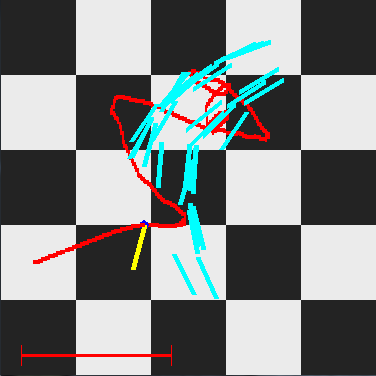

image:

So it was not that boring at the end . No wonder drone did what she did.

First it navigated to random square, then to perpendicular angle (but be aware

… width is not necessary bigger than height, see ((298, 508), (58, 319),

-15) … homework for you, was for this particular box correct to navigate to

angle 0?

February 21st, 2014 - The Plan

Aleš wrote me e-mail, that it is not clear (from my description) what is the

exact plan. And he is right!

The plan is to use standard notebook (one is running Win7 OS for Heidi, another

Linux for Isabele) where all image processing will be handled. Navigation data

plus video stream from drone is received via WiFi connection. WiFi is also

used for sending AT commands to drone. The code is written in Python. For

image processing is used OpenCV. The code is publicly available at

https://github.com/robotika/heidi.

Aleš is also right regarding task complexity. According to his estimates it

will be necessary to flight with average speed at least 1.5m/s. With video

sampling rate 1Hz (current status), that will be quite hard. It is a challenge

… like what the name of competition says „Robot Challenge”! See you in the

area!

p.s. if this blog was sufficient impulse for Aleš to install and start to play

with „OpenCV for Python” then I think that at least one alternative goal was

already reached

February 26th, 2014 - Version 0 (almost)

Version 0 should be the simplest program solving given task. In the context of

AirRace this means complete at least one 8-loop. And we are not there yet.

Camera integration always slows down the development …

In meantime I also checked

the other

competitors. There are (or yesterday were) 12 of them! So it is clear that

while in 2012 one completed round would be enough to win the competition, in

2013 it was enough for 3rd place (see Heidi) and in 2014? We

will see.

Here is the source code diff for

test-14-2-25.

There were some surprises, as usual .

Z-coordinate

Probably the biggest surprise was test recorded in meta_140225_183844.log.

Drone aggressively started and reached 2 meters height instead of usual 1

meter. That would be still OK, and soon it would reduce the height to desired

1.5 meter, but according to navdata the height was 1.43m! Moreover the

restriction for minimum sizes of detected rectangles was set to 200 pixels so

no navigation data was used/recognized.

Rectangles

One more comment about rectangles detection. Originally there was no limit

on the size of rectangle, and that was wrong. Here are some numbers so you get

an idea: at 1m the 30cm long strip is detected as 300 pixels rectangle. This

means that at 2m it should be approximately 150 pixels (current filter value).

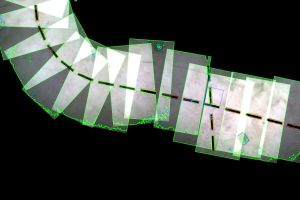

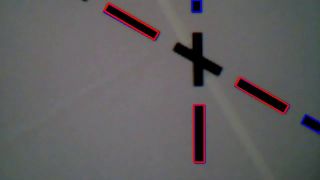

Thanks to Jakub bad rectangles are now visible in the debug the output:

Now I am browsing through recorded videos and looking for „false positive” or

how is it called — rectangles which should not be selected and were selected,

and … here is a bad surprise:

We are still processing recorded video which should be

complete. So it is possible that PaVE packet contained only part of the video

frame. Another TODO.

Corrections in Y-coordinate

It turned out that it is necessary to make also sideways corrections. Sure

enough there was a mistake in sign: image x-coordinate is drone Y coordinate

and it should be positive for x=0. Fixed.

Hovering

The most important step was insertion of hovering. Just to get the drone at

least a little bit under control. The video frames are processed at 1Hz so by

setting the proportion between 0.0-1.0 splits the time for steering and

hovering. 0.5s was too slow 0.8s too fast and 0.7 was reasonable at this

stage. The plan is to trigger hovering only when the error grows above some

predefined limit.

Results 14-2-25

The drone is extremely slow at the moment. In one minute it was not able to

complete a semi-circle. It will be necessary to recognize which part of 8-loop

is it and steer instead of go forward as default command.

Another detail to think about is going backward instead of forward. The down

pointing camera is shifted little bit back. It may simplify navigation in some

cases. Like test logged as meta_140225_185421.log, when Isabele flew across

our table (we do not have protective nets), because only in one frame it had

seen perpendicular strip … and then it was too late .

February 28th, 2014 - Bug in log process

Today I was investigating timestamps of video vs. navigation data. What scared

me a lot was five seconds gap between initial frames:

1548.280426 0 1549.273254 -16 1550.32669 <— !!!!!!!! -18 1555.131744 <— !!!!!!!! -9 1557.108245 <— !!!!!!!! -14

… here you see time in seconds and detected angle in degrees, just to

find/match corresponding frame. So what is the problem? Here is the log file

for image processing:

… [((1009, 643), (74, 48), -18), ((1013, 624), (16, 12), -45), ((464, 482), (46, 266), -7), …] 178 [] 750 [((172, 209), (15, 8), -9)] 188 [] 184 [((327, 551), (43, 239), -14), ((244, 235), (43, 216), -14)] 190 [((1045, 676), (31, 38), -45), ((268, 483), (43, 236), -16)] 199

The results are interlaced with the number of updates of drone internal loop

(running at 200Hz). The problematic number is 750 … so for such a long time

there was no result?! This would mean that drone navigation was based on video

frame several seconds old!

Well, good news is that in reality that was not so bad and the mistake is in

logging. It is necessary to have all results different in order to store

changes. Here for several seconds were no rectangles detected (i.e. empty

list []) and these results were packed into one. If there would be

timestamps and frame number the results would be always different and then it

should work fine.

It is fixed now, and ready for new test (see

github

diff).

March 3rd, 2014 - Z-scaling

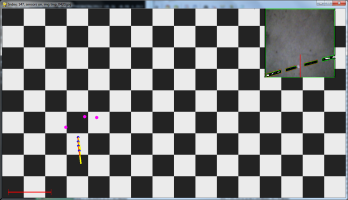

A quick observation based on today's Jakub tests: it is about strip (x,y)

coordinates scaling based on the drone height (Z-scaling). The distance is

estimated individually for each strip. I could not see any reason why three

strips in line:

are not linear in the log viewer:

?! I already told you the reason … the z coordinate is estimated separately

for each strip (based on its length) and the middle is much shorter (due to a

light reflection), so it is expected to be further away …

March 4th, 2014 - A Brief History of Time

I could not resist to use

Stephen Hawking book

title, sorry . AR Drone 2.0 has at least two time sequences: NavData and

Video. They are encoded differently (see for example

Parrot forum), but

good news is that they are probably related to same beginning of time. In

this case not Bing Bang, but only Drone Boot.

The video stream is delayed. Not much for recording but maybe a lot for fast

drone navigation. The number vary around 0.3s, but you can see also values like

0.44s and probably more. Here you can see the difference if you tight video

frame to current pose or if you keep

poseHistory:

before time correction |

after time correction |

March 5th, 2014 - test-14-3-4

Yesterday tests were quite discouraging :(. We had visitors and the best we

could do was finish 1/2 of 8-loop. After the second turn Isabelle decided to

„turn right” on the crossing and that was it. So we still do not have working

version 0!!!

Now it is time to go through the log files and look for anomalies. Starting

from the last test I can see 90 degrees jump in heading. This is probably

normal as internal compass is taken into account and it takes while before

enough data are collected.

Isabelle is not very high (approx. 1m) at the start position. It is pity that

it won't recognize partially visible strip. Moreover the recognized strip is,

due to light reflection, shorter so distance estimate is not very accurate.

There is an obvious problem with Y-coordinate correction. It is „one shot”

only, with dead zone 5cm. For 10cm error it creates beautiful oscillations .

On the other hand correction in angle with dead-zone +/- 15 degrees (!) does

not correct for first three image snapshots at all … OK, now it is clear. Who

programmed that?!

Another source of problems is failure to detect transition from circle to line.

As result circle corrections are used instead of line navigation.

There are only 3 more tries/test evenings to go, so we will have to pick from

the following list:

- switch to smaller images, stream preview video (i.e not recorded), faster frame rate

- C/Python binding to FFMPEG and parsing intermediate images (i.e. more than 1Hz video update rate)

- MCL (Monte-Carlo Localisation) based on strips position

- improve quality of strips image recognition

- big image of the floor from matched and joined mosaic images

- rectangle merging (detected two small which are split due to light reflection)

- position driven corrections

March 6th, 2014 - Cemetery of Bugs

Hopefully I can call with this title

today's

commit . Sometimes it is hard to believe how many bugs can be in few lines

of code. I already mentioned couple yesterday, so few more today:

drone.moveXYZA( sx, -sy/4., 0, 0 )

This was supposed to be replacement for hover() function in Y coordinate. The

idea was that you send the drone with the opposite speed then which is flying

now. So what is the problem? sy is not the drone speed! It is the command

after image analysis for tilt right or left. It should be drone.vy

instead.

Another bug, probably not so critical, is a block of code with condition, if any

strip was detected. If there was no strip then history of poses was constantly

increasing (in proper way it is cut with the timestamp of the last decoded

video frame). Solved via completely removing the if statement — it is no

longer needed. I no longer worry that I do not see any rectangle.

Then there was a surprise with ARDrone2.vy speed itself. I never need it,

except for drone pose computation, and so it was with signs as you can get them

from Parrot. Fixed in

ardrone2.py.

So what next? Hopefully today will Jakub test new code with reference circles.

Instead of navigation based on old images, Isabelle should now use circular

path estimated from the image. I should be much better, but will be?

March 10th, 2014 - Shadow

Jakub sent me results of Friday tests with navigation along reference line with

comment: we've got problems with shadows … and he was absolutely right:

This is not the first time when

ret, binary = cv2.threshold( gray, 0, 255, cv2.THRESH_OTSU )

fails. On the other hand it is not surprise — there are three peaks in

histogram instead of two. The worst thing on this particular picture is that

it not only does not detect any of three black strips, but it evaluates garbage

as perpendicular strip!

For quick test I used only Irfan histogram to show what I mean:

Image histogram |

Note, that I ignored red/green/blue pixels and picked blue histogram, because

there you can see the best three peaks.

March 12th, 2014 - Finally Version 0!

I can sleep again . Not much (it is 5am now), but still it is much better

. Yesterday Heidi finally broke the spell and after

several weeks completed the figure eight loop!

Are you asking why Heidi and not here sister Isabelle? Well, there was a

surprise. Isabelle does not have working compass! Yesterday I wanted to add

extra direction filtering, basically to make sure that I am following the

proper line. So I integrated magneto tag into

ARDrone2

variables. I was using last 2 minutes test from Jakub (OT that was maybe

another moment for celebration when Isabelle was able to autonomously fly and

navigate for 2 minutes) and to my surprise there were constant raw data

readings from compass:

compass: -55 -57 -191 193.7109375 187.55859375 671.484375 -120.508392334 108.018455505 671.484375 314.219329834 79.5401382446 0.0 -121.496063232 0.0 -137.727676392 0x01 2 402.916381836 -241.082290649 0.0546875

This corresponds to following named variables:

values = dict(zip(['mx', 'my', 'mz', 'magneto_raw_x', 'magneto_raw_y', 'magneto_raw_z', 'magneto_rectified_x', 'magneto_rectified_y', 'magneto_rectified_z', 'magneto_offset_x', 'magneto_offset_y', 'magneto_offset_z', 'heading_unwrapped', 'heading_gyro_unwrapped', 'heading_fusion_unwrapped', 'magneto_calibration_ok', 'magneto_state', 'magneto_radius', 'error_mean', 'error_var'], magneto))

This was kind of magic — it is almost hard to believe how data fusion of

gyro + compass perfectly hide this problem. In particular if you watch variables

heading_unwrapped, heading_gyro_unwrapped, heading_fusion_unwrapped

then first (compass heading) was constant (as well as mx,my,mz and others).

Second gyro heading was slowly changing and finally in fusion heading was

originally winner compass, but later on it was loosing against gyro . It is

really hard to believe that it worked so well and except longer flights you

would not notice it.

I could not resist and tried immediately Heidi at home in the kitchen. I cut

the motors as soon as they started to turn (it was 6am, so neighbours would

probably complain). There were two test: first drone pointing south and then

drone pointing north. Values were different, so it is issue of Isabelle.

Now, what is worse than not working sensor? Any idea? Well, there is even worse

case — sometimes not working sensor! After several tests flights with

Heidi yesterday we tried final working algorithm also with Isabelle. And it

worked fine! Do you see the persistent direction of 8 figure?

Just to get an idea, visible only form raw data, here is older 2 minutes flight

mentioned sooner:

Note, that I had to change the scale so you will see the crazy trajectory …

Fun, isn't it? Now I would guess that communication with compass module

sometimes fails and the only possibility is to power off the drone and restart

the whole system. Maybe. Another TODO to go through older log files and check

for this behavior.

So that was the bad news … now the good ones … and the title of today's

post. We finally managed to complete the 8-loop and repeat it several times.

There were three changes (see older

diff):

- decreased speed from 0.5m/s to 0.3m/s

- wait on start if no strip was detected

- fixed bug — missing abs()

The speed is obvious and does not need any explanation. The second point is

related to elimination of most bad starts — the takeoff sequence is completed

at about 1m above the ground and reasonable view from bottom camera is at about

1.5m. The algorithm slowly increases the height, but it should not leave the

start area.

And the third one is the funny one . That was the reason why Isabelle or

Heidi took wrong turn on the crossing. It was quite repeatable … fixed.

p.s. I wanted to post also link to YouTube video, but my video converter no

longer works … it looks like there were

some changes in

FFmpeg … but maybe it is only to change avcodec_get_context_defaults2 to

avcodec_get_context_defaults3 and replace AVMEDIA_TYPE_VIDEO by NULL as in

this example. It looks OK and

here is the video … note the strange

behaviour near the end. Still plenty of work to do.

March 13th, 2014 - Frame contour

Did you watch the video of the first Isabelle's

successful flight to the end? Can you guess what happened? If you have no

idea then frame number 3630 from video_rec_140311_192140.bin can help you:

And here is the report of changes near the end:

FRAME 3540 I I FRAME 3570 I I TRANS I -> X FRAME 3600 X I TRANS I -> L FRAME 3630 L L NO dist change! -0.989344682643 TRANS L -> X FRAME 3660 X I FRAME 3690 I I FRAME 3720 ? I FRAME 3750 ? I TRANS I -> L FRAME 3780 L L FRAME 3810 L L FRAME 3840 L L FRAME 3870 L L NAVI-OFF 120.001953

Isabelle wrongly interpreted this image as turn left (!) … well, yeah …

one pair looks like two following strips on left turn circle. And then there is

a transition from circle to crossing, which should not ever happen where

drone picked wrong line for navigation and started to fly loops in the opposite

direction.

p.s. note, that Heidi (older sister with several injuries from the last year)

in reality finished the 8-loop sooner, but in her last attempt

(meta_140311_191029.log) unexpectedly landed in the middle of the test.

What happened there? Low battery (on the start 39% and after landing 0%) …

OK, so now we know that if battery is low done will automatically land .

March 14th, 2014 - cv2.contourArea(cnt, oriented=True)

VlastimilD wrote: the function cv2.contourArea has parameter "oriented",

which is by default False and if set to True, it returns negative area which

means the contour has the opposite orientation and that should be white on

black i this case. So if you don't want those, setting oriented to True should

filter them out in the following "if". … and he is absolutely right! Thanks

for hint .

For details see

OpenCV

documentation.

p.s. I am sorry for misspeled name in

the

commit.

March 16th, 2014 - MP4_360P_H264_360P_CODEC

We have decided to switch to video recording in MP4_360P_H264_360P_CODEC mode.

The previous mode was MP4_360P_H264_720P_CODEC, i.e. with higher resolution

(1280x720) at 30fps. Our main motivation was to get rid of split images —

cases, where you get mixed old and new image (for details see

mixed video frame).

Another motivation was to reduce WiFi traffic and the size of recorded

reference videos. So far so good. And yes, there was at least one surprise .

The 720P video was not only with higher resolution but also on 30fps, and 360P

video is running at 15fps! For us it is actually good news, because expected

size will not be 4 times smaller but 8 times (in reality it won't be so big

difference, because large and more frequent pictures are easier to pack).

March 17th, 2014 - Scale Blindness

Did you experience the feeling when the mistake is so big, out of range,

that you won't see it? Here is the story: low resolution video does not work.

The navigation algorithm is much much worse. Why? The error is too big to be

seen … and it is already presented on all previous images .

Just for comparison here is one not working 360P image:

Example of 360P image |

Do you see now, why

cv2.THRESH_OTSU used to work fine before, but it is not working any more? No?

boring, right? The bottom facing camera has QVGA (320*240) resolution,

according to Parrot specification . That resolution is neither compatible

with 1280x720 nor with 640x360. But what is maybe not expected is that for high

resolution you scale it 3 times (720=240*3) and fill the margin with black

pixels, while for 640x360 you scale it twice (640=320*2) and cut the

overlapping pixels! Histogram for high resolution video contained

(1280-960)*720 totally black pixels …

March 18th, 2014 - Version 1 and MSER

It is still quite depressing — we would like to get 30 rounds and at the

moment we are happy if we complete 3! Well, it is called progress, but

up-to-now Version 0 with 8 times 8-loop was still better.

What is the main difference between

Version

0 and

Version

1? First of all it is the video input with smaller resolution. After

yesterday discovery we know that the image is covering only 3/4th of reality

visible on high resolution video. This means that you see two complete strips

less frequently than before. There is a new

class

StripsLocalisation, which should compensate for this by taking into account

also previous image and the movement of drone between snapshots.

There is not much time left — only one week so almost zero chance that we

will integrate Version 2, which would handle absolute localization and

compensate for multiple failures … oh well.

There was a good news at the end too: it is possible to use

MSER

(Minimally Stable External Regions) implementation in OpenCV in real-time image

processing. Isabelle was able to complete several rounds with this algorithm.

The video delay was comparable to normal binary threshold set for 5% of all

pixels count.

March 19th, 2014 - MSER and negative area

The trick with negative area does not work for MSER output (!). And by the way

it is also the function cv2.contourArea(cnt, oriented=True) which fails on

Linux:

OpenCV Error: Unsupported format or combination of formats (The matrix can not be converted to point sequence because of inappropriate element type) in cvPointSeqFromMat, file /build/buildd/opencv-2.4.2+dfsg/modules/imgproc/src/utils.cpp, line 59 0 Traceback (most recent call last): File "airrace.py", line 180, intestPaVEVideo( filename ) File "airrace.py", line 155, in testPaVEVideo print frameNumber( header )/15, filterRectangles(processFrame( frame, debug=True ), minWidth=150/2) File "airrace.py", line 31, in processFrame area = cv2.contourArea(cnt, oriented=True) cv2.error: /build/buildd/opencv-2.4.2+dfsg/modules/imgproc/src/utils.cpp:59: error: (-210) The matrix can not be converted to point sequence because of inappropriate element type in function cvPointSeqFromMat

Why it does not work is probably due to missing external contour, but it

sometimes works, so I am not sure. If you want to play, here

is the original picture, uncomment g_mser creation

(airrace.py:27)

and you can see yourself:

./airrace.py mser-orig.jpg

No, you won't see it! That's even more scary!! Forgot about oriented parameter

for MSER area!!! The stored JPG file is a little bit different, but you get

completely different results than from original video (see the sign for 1412):

m:\hg\robotika.cz\htdocs\competitions\robotchallenge\2014>m:\git\heidi\airrace.py mser-orig.jpg 1412.5 -3237.5 -7165.5 -897.5 -1488.0 -1261.0 492.5 -6224.0

vs.

m:\git\heidi>airrace.py logs\video_rec_140318_195005.bin 62 62 -6079.5 -3189.0 -1648.5 -32.5 -1202.0 -4846.5 -369.5 [((406, 49), (124, 23), -61), ((346, 209), (132, 23), -77)]

… I do not feel comfortable now :-(. For MSER just forget about area. It is

filtered out at the beginning anyway, right?

g_mser = cv2.MSER( _delta = 10, _min_area=100, _max_area=300*50*2 )

I should probably learn more about the parameters…

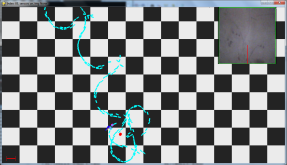

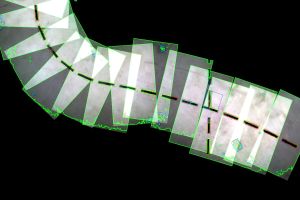

March 23rd, 2014 - mosaic.py (5days remaining)

Couple days ago I wanted to play a little, so instead of reviewing more and

more tests results, I tried to write mosaic.py — script for

collection multiple images into one. The first plan was to feed the script with

a list of images only, and the rest will be automatic. Very naive with my

energy and mental level that evening. So I drop it that night.

Later I started to play with MCL (Monte-Carlo Localization) and one crucial

"detail" is how big is the drone position estimation error? My only reference

is list of the collected images, so mosaic.py implementation would have

place even in this tight schedule . This time it was fully manual: press

arrow keys for shifting new image and q/a keys for image rotation. It worked.

But it was a tedious work even for three samples. And it did not quite solve

the original task — how big is the error?

So I slightly changed the interface and instead of list of images I am passing

there CSV file containing filename, x, y, degAngle on every line. It also

prints this on standard output when you do the correction, so you can use it

next time.

Well, so far so good. The struggle came with exporting CSV from

airrace_drone.py. Y-coordinate on images is upside-down, rotation is not in

the center but in top left corner, camera is rotated -90 degrees to robot/drone

pose … and probably much more, like image size dependent on height or tilt

compensation.

The result is still not worth presentation — it is mainly for me, to write

the notes down, so I will realize where is the mistake?

The images are added via cv2.add(img1,img2), so overlapping parts are

brighter. The shift in the turn is probably due to scaling but at the moment I

have no idea why the drone basically stops on the straight segment (white area

at the bottom). Maybe I should manually correct the mosaic, so you get an idea

how nice/useful it could be. BTW the absolute error in direction is very small

(less then degree), so compass is great (if it works at all! — we should

get replacement for Isabelle next week, ha ha ha).

So here is mosaic with shift correction only

mosaic with shift correction |

Every image is single video I-frame, i.e. 1Hz and approximately 15seconds of

flight. The black dash-line segments are 30cm long with 10cm spacing.

And here is the mosaic corrected with angle (13 degrees correction for the last

image, i.e. 1 degree per frame):

Exercise for you — how fast was Isabelle flying?

March 24th, 2014 - MSER and battery (4days remaining)

Here are some results of today testing:

- MSER is superior to normal thresholding

- there is again problem like before with normal contours and negative area:

Inverse MSER region - we finally completed 10 loops (see video), but Heidi battery died after 6 minutes

March 25th, 2014 - Bermuda Triangle (3days remaining)

My Bermuda Triangle is the

center area, where the lines are crossing, and drones are loosing their

navigation skills. The wrongly detected strip has following properties:

- it is white

- it is on the image border

- it is triangle

At first I want to attack the 3rd point, triangle. The idea was: OK, if it is

rectangle than minimum fitting rectangle will have almost the same area while

triangle will have only half of it. Does it sound reasonable?

I verified 6 minutes video that I still get identical results as in the real

flight yesterday (sometimes these "stupid" tests are the ones most important)

and it was OK. So I added area computation and print:

rect = cv2.minAreaRect(cnt) area = cv2.contourArea(cnt) print "AREA %.2f\t%d\t%d" % (area/float(rect[1][0]*rect[1][1]), area, rect[1][0]*rect[1][1])

Well, I expected percentage of covered area, but …

AREA 0.05 121 2482 AREA 1.60 4936 3084 AREA 1.26 5033 3983 AREA 0.35 253 731 AREA 1.93 1830 950 AREA 0.57 1366 2415 AREA 2.24 6819 3044

… nice, isn't it . So what the hell is that?!

It looks like it is just an array of points, unsorted. Now it makes sense to

apply convex hull as in one of MSER examples, before the contour is drawn. So I

am not looking for area, but all I need is len(cnt)

AREA 0.90 2242 2482 AREA 0.91 2806 3084 AREA 0.88 3514 3983 AREA 0.86 626 731 AREA 0.83 787 950 AREA 0.90 2178 2415 AREA 0.93 2845 3044

That looks much better .

Do you want to see numbers for yesterday image

(video_rec_140324_195834.bin, frame 17)?

AREA 0.58 1438 2475 AREA 0.57 1843 3219 AREA 0.26 4041 15301 AREA 0.32 5284 16650 AREA 1.01 624 620 AREA 0.99 818 825 AREA 0.86 1586 1850 AREA 0.85 2104 2481 AREA 0.87 1320 1519 AREA 0.85 1673 1964 AREA 0.82 2269 2772 AREA 0.91 1602 1767 AREA 0.84 2361 2794 AREA 0.91 3016 3299

First two are probably detected triangles for different threshold levels. After

that is a big crossing area and then reasonable strips. So 70% would be maybe

enough. And here is the result:

And now it is time for repeated video processing with identity assert — will

it fail? Yes, even the first very picture :-(. I had to add condition to skip

first 10 frames (seconds) … during take-off there are many random objects.

Then it went fine to frame 218 … hmm, but I do not see anything interesting

there (*). Time to re-log whole video and compare text results.

OK, there are 17 different results, frames 5, 920, 1055, 1220, 1835, 2180,

2480, 2810, 3470, 3800, 4460, 5405, 5420, 6035, 6185, 6350, 6530 (divide it by

15 to get I-frame order). Boring? Who told you that this is going to be fun?

Off topic — I can pass I-frame index to see/analyze only particular

frame, but what should I do with these "times 15" numbers? Bad mathematician

and lazy programmer end up with the following code:

testPaVEVideo( filename, onlyFrameNumber=int(eval(sys.argv[2])) )

The result looks OK, actually even better. It filters not only the bad triangles

but also slightly inflated rectangles … so far so good. We will see during

tonight tests. Here is

the

code diff.

(*) 218 was not frame number. It was number of updates between frame 905 and

920, so the first failing frame was in reality 920/15

March 26th, 2014 - Isabelle II (2days remaining)

Believe it or not, we have new drone now. It came yesterday as "hot swap" for

Isabelle with broken compass. And she already flew several rounds … thanks

Apple Store

The second most important progress is, that MSER now works also on Jakub's

computer with Linux and OpenCV 2.4.2. The workaround is

here

(I am sure there is some faster/proper way to do it directly in

NumPy, but there is not much

time left for study/experiments).

The first test went as always: Heidi took-off, detected strip, started to turn,

missed transition to straight segment and continued in the circle … damn.

The second test went fine. This scenario is already repeated like 5(?) times

… and the magic is … height. We added extra time to reach the 1.5m height

and since that (knock, knock, knock) it works fine. Here is the next

changeset.

There is left/right tilt compensation correction

implemented

now. Why? We had added strips filtering that they not only have to be spaced

by 40cm and similar angle, but that Y-coordinate offset is smaller then 25cm.

But then we have seen drone following line and on one image it is tilted to

the right and on the second image (a second later) it is tilted to the left.

The difference was only 6 degrees, but for 1.5meter height this means 15cm and

that already matters.

Another "break through" (ha ha ha) came to mine mind on the bus on the way to

CZU. We should not follow the line exactly but with an

offset! That way we increase the chance to see transition line to

circle. Here is

the

code.

OK, if you finished reading up to here … here is

video from testing (not edited). You can see

there whole team except me + one visitor.

Internal notes …

Now is time to review yesterday log files. There are 7 of them + 500MB from

Jakub. First two logs are without offset, then for speed 0.4m/s, 0.6m/s with

increasing step 0.05 per loop, 0.75m/s and 0.7m/s.

I am not able to replay meta_140325_192727.log … my notes are not very

good … I see that was the moment when we had speed 0.4m/s, step 0.05 BUT

there was constant angle correction independent on desiredSpeed. OK, now replay

works without assert.

The last log ends nicely:

BATTERY LOW! 8 !DANGER! - video delay 1.097775

… and in 5 seconds it hit the column.

Now, refactoring … I promised to delete all unused code, sigh. It must be

done. Now. MCL — gone. classifyPath — gone. PATH_UNKNOWN and PATH_CROSSING

— gone.

Diff

looks now a bit scary. Hopefully three reference tests were enough for this

refactoring. There is one more round of test tonight, so this was the last

chance.

March 27th, 2014 - Sign mutation (1day remaining)

Are you also sometimes lazy to think about slightly more complicated

mathematical formula that you just try it instead? And if it does not work, you

randomly change signs? I would call it genetics programming (to complete

the evolution you can add to this process also copy and paste). And sometimes

it is not good idea…

I suppose that I had to use it for navigation along the right turn curve. It is

like left turn, just the signs are opposite, right? Some mutants are quite

healthy, and this one was for a relatively long time! Have a look at

the video again and closely watch the

right turns (1:01-1:16, 1:41-1:57). It is easier to hear it than to see it.

What is the problem? We expected some magnetic anomalies, but the truth is that

the

signs were not quite optimal. I am not sure if I should be ashamed or happy

that we found the bug . Simply for right/left angle correction was used

the wrong side of the circle (imagine circle and its sides, … I

mean there is a place where your error is close to zero and there is also the

opposite place where you are close to math.pi, -math.pi singularity. It

actually works (as you can see it on the video), except that you steer with

maximum left, right, left, right …

OK, that was good one. There are two more issues, which I would call

nightmares. I know about them, but I am not quite sure what to do about

them:

- losing WiFi connection

- video delay

The first one looks like this:

Traceback (most recent call last): File "M:\git\heidi\airrace_drone.py", line 237, inlauncher.launch( sys.argv, AirRaceDrone, competeAirRace ) File "M:\git\heidi\launcher.py", line 82, in launch task( robotFactory( replayLog=replayLog, metaLog=metaLog, console=console )) File "M:\git\heidi\airrace_drone.py", line 215, in competeAirRace drone.moveXYZA( sx, sy, sz, sa ) File "M:\git\heidi\ardrone2.py", line 633, in moveXYZA self.movePCMD( -vy, -vx, vz, -va ) File "M:\git\heidi\ardrone2.py", line 539, in movePCMD self.update("AT*PCMD=%i,1,"+ "%d,%d,%d,%d"%(f2i(leftRight),f2i(frontBack),f2 i(upDown),f2i(turn)) + "\r") File "M:\git\heidi\airrace_drone.py", line 79, in update ARDrone2.update( self, cmd ) File "M:\git\heidi\ardrone2.py", line 258, in update Pdata = self.io.update( cmd % self.lastSeq ) rocess Process-1: Traceback (most recent call last): File "C:\Python27\lib\multiprocessing\process.py", line 258, in _bootstrap File "M:\git\heidi\ardrone2.py", line 113, in update Process Process-2: Traceback (most recent call last): File "C:\Python27\lib\multiprocessing\process.py", line 258, in _bootstrap self.command.sendto(cmd, (HOST, COMMAND_PORT)) socket.error: [Errno 10065] A socket operation was attempted to an unreachable host self.run() File "C:\Python27\lib\multiprocessing\process.py", line 114, in run self.run() File "C:\Python27\lib\multiprocessing\process.py", line 114, in run self._target(*self._args, * *self._kwargs) self._target(*self._args, * *self._kwargs) File "M:\git\heidi\video.py", line 30, in logVideoStream data = s.recv(10240) e File "M:\git\heidi\video.py", line 30, in logVideoStream rror: [Errno 10054] An existing connection was forcibly closed by the remote host data = s.recv(10240) error: [Errno 10054] An existing connection was forcibly closed by the remote host

I wanted to somehow clean the dump for you, and I did not realize that it is

actually two crashing processes with interlaced error output (*). One process

is handling navdata and sending flight commands while the other is video

processing. Both crashed on socket operation, where An existing connection

was forcibly closed by the remote host.

This happens after several minutes flight from my Win7 notebook. The drone

stops and is hovering at last position. Network is temporarily unavailable (in

the worst case the notebook can switch to another WiFi access point, if you are

not cautious enough). So what? Take a break and restart the whole program in

5 seconds? What about details like take-off, trim gyros or that first segment

is not PATH_TURN_LEFT any more?

The second nightmare looks like this

NAVI-ON !DANGER! - video delay 158.220822 FRAME 20 [-1.6 1.2] TRANS L -> I I True 5 !DANGER! - video delay 158.392327 FRAME 22 [-1.6 1.2] I True 4 !DANGER! - video delay 158.393941 FRAME 24 [-1.6 1.2] I True 3 !DANGER! - video delay 158.490557 FRAME 26 [-1.6 1.2] SKIPPED2 I True 2 !DANGER! - video delay 158.642409

And here is the story how it happened: I asked Jakub to read configuration

files form Heidi and Isabelle II, because for some unknown reasons Heidi is

still faster. Maybe more experienced? My old hacked program crashed after

the read, and it almost did not matter … except the recorded video was not

transfered, so the drone tried to do that in the next run.

You can see similar reports from my tests last year. I.e. it is expected, but

we should be careful about it. It is quite hard to navigate from more than 2

minutes old video .

(*) there are actually three failing processes: navdata, video and recorded video

March 28th, 2014 - WiFi Sentinel (0days remaining)

I cannot sleep again (the clock says 2:47am now) :-(. Yes, the nightmare(s)

again. Yesterday I took „drone day off”, but Jakub did some more tests with

WiFi failure. We had several iterations with

wifisentinel.py,

which is a script for securing WiFi connection. You write program arguments

as usual except you put this wrapper at first place, for example:

python wifisentinel.py python airrace_drone.py test

The sentinel first verifies if you are connected via one of three allowed IP

addresses. If yes then it starts the drone code otherwise it waits 3 seconds.

When the drone code crashes, for example due to Unreachable host problem,

it again waits until connection is reestablished and runs the code again and

again, and again …

Probably the funniest bug was

this

one, when instead of drone flying code it was calling self again. Then there

were some issues with shell=True … you can always put the shell name in

front, so now it is shell=False when using the subprocess.call(). Finally the

code for Python ipconfig is extremely ugly and fits only my and Jakub's

operating system — I have no idea why he choose Czech Linux , where you

get strange console outputs like:

… inet adr:10.110.13.102 Všesměr:10.110.15.255 Maska:255.255.248.0 … AKTIVOVÁNO VŠESMĚROVÉ_VYSÍLÁNÍ BĚŽÍ MULTICAST MTU:1500 Metrika:1 …

If you know how to do this better, both on Linux and Windows without need of

installing extra package, let me know. I will change it (not today and not

tomorrow).

The test was following:

- start drone program

- turn WiFi off during flight

- turn WiFi on again (after couple seconds)

The old code would crash immediately on the step 2 and drone would be hovering

in place. Surprisingly you get the same bad result also with previous revision

of the new code . Yeah, testing is important. In that case the navdata

communication channel crashed, but both video processes remaining active! At

least I learned that there is

daemon

value for multiprocess too. And then it went fine. Jakub repeated several

WiFi failures and Isabelle always recovered.

So what is the problem? There is still video delay. Say, the drone recovers

within 15 seconds. During that period it is recording area below it. It is

slightly moving, and it is still recording. Then it starts to fly and the log

file looks like:

NAVI-ON USING VIRTUAL LEFT TURN CIRCLE! !DANGER! - video delay 20.909436 FRAME 71 [-1.7 0.2] TRANS L -> I I True 4 !DANGER! - video delay 20.406242 FRAME 72 [-1.7 0.2] I True 3 !DANGER! - video delay 19.874204 FRAME 73 [-1.7 0.2] I True 2 !DANGER! - video delay 19.346308 FRAME 74 [-1.7 0.2] I True 1 !DANGER! - video delay 18.818665 FRAME 75 [-1.7 0.2] I True 0 !DANGER! - video delay 18.260648

i.e. the delay is getting smaller and smaller as the drone is downloading the

old video from period without connection. But it also tries to fly based on

these video frames!!! Believe it or not, it could be OK for couple seconds until

you have to switch from line to circle navigation. It is using only the old

video as reference but it has no knowledge how far it is on the 8-loop.

So now the dilemma: change otherwise tested code or not? Was is just luck that

it worked fine yesterday or the drone should rather stop and wait another

couple seconds? It seems that it is capable to download 2s of video within 1s,

so to decrease the video delay by second on every image … no, stupid me.

There are different times. The time difference cannot be more than 1 second. If

the processing would take 0.001s the images are recorded at fixed times, every

second, so it would decrease it by 0.999s, but it would took 0.001s. So maybe

it is not that bad.

Let's review this example FRAME 71: 20.9s delay and FRAME 103: 1.6s delay. So

32 frames and 19.3 seconds, 0.6s per frame, so approximately 0.4s it needs for

processing on Jakub's older notebook. It also means that it took 0.4*32=12.8s

in real time, at slow speed (0.4m/s) this means more than 5m! OK, I will change

the code NOW!!

I am looking at the logs in detail and it is scary, see this picture:

The default configuration is to look forward, and one of the first steps is to

switch to down pointing camera. But it will navigate based on detected strips

for a while … I am not going to change this part anyway. So you can tell even

from images that the drone was restarted.

Code for

The

Last Prague Test is ready and I can go sleep again … it is 4:46am, so I can

at least try .

p.s. yesterday came very important info from Karim (Robot Challenge

organization). The schedule is

updated and Air Race --- Each robot has to pass the homologation in order to

be allowed to participate in the competition. Please don't wait till the last

minute during the homologation time. In the competition, each robot has

multiple attempts - the best one counts. Please don't wait till the last minute

with your runs. I am waiting for confirmation but in this case we could test

STABLE (current) version and RISKY (to be prepared during drive to Vienna)

version . And we have two drones to crash! Now I have reason why to take

spare indoor protective hull . Držte nám palce

March 29th, 2014 - Falling Down (11 rounds)

It is over now. Do you know the movie

Falling Down? Somehow it is the

first association I have now . But let's start from the beginning …

… I just realized why the robot died, as always. Originally a good idea

killed that …

Yesterday evening we have studied the last Friday tests, including speed

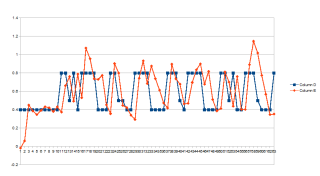

profiles. Here is the graph for max desired speed set to 0.8m/s, dropping to

0.5m/s when no strip was detected and slowing down to 0.4m/s for transitions

between turns and straight lines:

The robot at the end failed due to large shadows wrongly detected as strip. So

we added also

maxWidth

limit. That was all we changed … we were too afraid and tired to do any

other change.

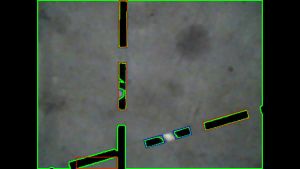

This morning we did some more changes. First of all we set the

radius

to 1.25m, because it was smaller in Vienna than in Prague. We did first test

and there were problems with video delay, crossing, and Isabelle height. So

we decided to

increase

desiredHeight to 1.7m, limited max allowed video delay to 2s and implemented

crossing detection. The crossing on Robot Challenge 2014 looked like this:

And that was it. That was

the

final code.

How it went I will leave for tomorrow morning … the hint, why robot crashed

after completed 11 loops is already mentioned … but maybe not as Jakub just

showed me the latest images.

p.s. Air Race 2014 - Isabelle CULS video is

finally uploaded … again it is without censure and editors cuts, so sorry if

you will hear some rude words …

March 31st, 2014 - 3rd place

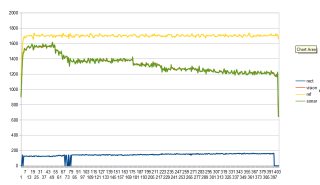

April 1st, 2014 - Altitude, Pressure and Temperature

Yesterday I tried to investigate „the falling down” problem, which caused

problems with navigation and Isabelle crash after 11th loop was completed. Here

are some data for NAVDATA_ALTITUDE_TAG:

It is a little bit hard to see but the sonar (green) and vision (red) graphs

are almost identical. These are raw values in millimeters, if I remember

correctly. The deadly reference has yellow color. Finally you can see also blue

graph of maximal rectangle size (0 for no rectangle detected) and you can see

that it was slowly growing but did not reach limit 200. So Jakub was right and

we did not broke it with maxWidth filter set to 200.

So why is the yellow reference so wrong? Well, first of all to use altitude

(drone.coord[2]) was not good idea. It is integrated over time also from

PWM and pressure sensor. It has to compensate for terrain changes and detected

obstacles (I know that the floor was flat, but Isabelle maybe did not know it).

There was also small breeze in the hall, which could cause the first bigger

drop (??).

The temperature was slowly increasing from 291 to 424 — no idea about

resolution and units, so I would guess that it is 1/10th of degree. It was

maybe getting hot due to heavier battery??

Anyway — temperature and pressure data are

parsed

now so you can use it in ARDrone2 class if you want.

I just replay some older test (meta_140326_201259.log) and the temperature

there was 515, dropping to 510 and then rising to 525 … so I am not sure if

it means 52 degrees of Celsius?! Could it be that if the drone is cold the

estimation is not very reliable and we usually tested several times so the

drone warmed up?? I am looking now at test meta_140325_180914.log and there

is the reference estimate lower than readings from sonar and vision … so I

would conclude, for Air Race do not use altitude, but only sonar and/or vision

distance from the ground estimate.

p.s. if there will be next time we will have to look forward — the video

footage would be much more interesting than to look down

April 11th, 2014 - Conclusion

Originally, I wanted to write more info about logs, wifi failures, competitors

and plans for Air Race 2015. There is no time left and priorities sifted again

… so I would like to thank the CZU team (mainly Jakub who did many many tests

for several weeks — 9GB of log files).

Did we beat the last year best semi-autonomous score? No. We did not. But the

winning team Sirin, with

navigation strategy only above the cross, could have done that. They had times

around 20s per loop, so 30 loops per 10 minutes. But they had also some

problems … as everybody.

And what about Air Race 2015? Two Russian teams mentioned that they plan to

build/use new drone, in particular Alex was mentioning

PX4 platform. There is also a plan to modify the

rules — we will see. I am definitely voting for multiple 10 minutes

attempts . That way we will have chance to test stable and risky version and

speed up if other competitors will also speed up.

p.s. if you would like to compete with your drone in a maze,

here is another contest organized by Russian

company … but there is age limit set to 18 … never mind … but it is the

lower limit