Katarina

Parrot drone Bebop

There is another toy from Parrot — drone called Bebop. My task is to prepare simple tracking of Parrot Hat (old AR Drone 2 could do that, so why not the follower?). Bebop is more professional at first sight: extra battery is part of the basic package, spare propellers, GPS, full HD camera, 8 time more powerful computer, 14 megapixels Fisheye camera with user selectable ROI … so I am quite curious how it will work from Python . Blog update: 30/4 — Paparazzi?!

This is a blog about my experience with Parrot

Bebop drone. She received "working name" Katarina … I expected that it

will be roar and originally wanted to name it

Katrina, but I changed my

mind as there was too much destruction behind that name.

At the moment I am not sure if I will write this blog in Czech or English —

we will see if the world is more interested then

Czech community …

at the moment it will be mix of both.

The drone is loan from Czech Parrot

distributor (last time it was minidrone Jessica). Thanks

Links

- Czech Parrot distributor http://www.icornerhightech.cz/

- Parrot Bebop

- Parrot Bebop Drone Hacking

- ARDroneSDK3

- Katarina github

Content

- First talk to "dragon"

- Parsing Navdata

- generateLibARCommands.py

- Commands required

- libARNetworkAL

- PING and PONG

- ACK of ACK?

- Low latency video stream

- video.py

- Camera Tilt/Pan and Emergency

- First Crash

- ManualControlException and FlatTrim

- Fandorama

- Status quo

- PCMD @40Hz

- Terminated.

- Remote distributed debugging

- Paparazzi?!

Blog

31st January 2015 — First talk to "dragon"

I would like to write some notes in parallel to

github development, similar to

other projects. It helps me to remember some

details and it could better explain my steps for random reader/developer.

Today I unpacked Bebop and powered it up. It was quite noisy even I did not take

off … there is a fan for the processor probably (?). My first task was to

take a picture but now I am happy that the drone started to talk to me .

I would recommend document Parrot Bebop

Drone Hacking. But the hint, how to establish communication with the drone,

is from here. It is

necessary to do „discovery step” before you can use ports c2d_port and

d2c_port. TCP is used on 192.168.42.1, port 44444 and you have to first

send JSON to the drone with controller_type, controller_name and

d2c_port. See

diff

for details.

The Python scripts sent:

{"controller_type":"computer", "controller_name":"katarina", "d2c_port":"43210"}

and received:

{ "status": 0, "c2d_port": 54321, "arstream_fragment_size": 1000,

"arstream_fragment_maximum_number": 128, "arstream_max_ack_interval": 0,

"c2d_update_port": 51, "c2d_user_port": 21 }

To be continued .

2nd February 2015 — Parsing Navdata

At first I would like to thank Darryl for his supportive mail . It pushed me

to get some data from d2c_port. I started with copy and paste from

Heidi code (UDP sockets), so I call them

again NAVDATA_PORT and COMMAND_PORT.

The code was simple:

robot = Bebop()

for i in xrange(100):

robot.update( cmd=None )

and because it already generated logs with date/time I have test for 1, 10 an

100 messages (see related

diff).

The structure looks similar to Jessica (Rolling Spider).

Code 2, then type of queue (?), increasing index and length of the whole data

packet. There is now

navdata.py for

separate parsing and if you run it on logged data you will see something

like:

m:\git\ARDroneSDK3\katarina>navdata.py navdata_150201_211239.bin 127 1 size 35 127 2 size 23 127 3 size 23 127 4 size 19 127 5 size 23 127 6 size 23 127 7 size 19 127 8 size 23 127 9 size 23 127 10 size 19 0 1 size 15 127 11 size 23 127 12 size 23 127 13 size 19 127 14 size 23 127 15 size 23 127 16 size 19 …

The counters are separate and so far it looks like that the 0-queue contains

only 15bytes long message.

This is the content:

02 00 01 0F 00 00 00 5D 01 00 00 99 56 57 0C 02 00 02 0F 00 00 00 5E 01 00 00 87 C8 93 0D 02 00 03 0F 00 00 00 5F 01 00 00 A7 9E A7 0D 02 00 04 0F 00 00 00 60 01 00 00 CF 39 B7 0D 02 00 05 0F 00 00 00 61 01 00 00 0D EB F7 0E 02 00 06 0F 00 00 00 62 01 00 00 A2 85 0E 0F

So again some counter and checksum or time??

In 127 or 0xFE "queue" the 19bytes long messages looks the same all the time

(except increasing counter):

02 7F 04 13 00 00 00 01 04 08 00 00 00 00 00 00 00 00 00

23 bytes message are two types:

02 7F 02 17 00 00 00 01 04 06 00 E8 6D E6 3C F5 7D 20 BC AA 4D 85 3B 02 7F 03 17 00 00 00 01 04 05 00 00 00 00 00 00 00 00 00 00 00 00 00 02 7F 05 17 00 00 00 01 04 06 00 5B 48 E8 3C 08 D0 1E BC 28 F0 84 3B 02 7F 06 17 00 00 00 01 04 05 00 00 00 00 00 00 00 00 00 00 00 00 00

And finally 35 bytes message is so far still the same.

02 7F 01 23 00 00 00 01 04 04 00 00 00 00 00 00 40 7F 40 00 00 00 00 00 40 7F 40 00 00 00 00 00 40 7F 40 02 7F 11 23 00 00 00 01 04 04 00 00 00 00 00 00 40 7F 40 00 00 00 00 00 40 7F 40 00 00 00 00 00 40 7F 40

Well, it may take a while to find corresponding file in ARDroneSDK3 …

3rd February 2015 — generateLibARCommands.py

You would not find useful file, you have to generate it first! In

particular you will need

libARCommands and probably

also main ARSDKBuildUtils.

Then you need to locate

generateLibARCommands.py

and run it with parameter -projects ARDrone3 (you will not find code name

"Bebop").

m:\git\ARDroneSDK3\libARCommands\Xml>generateLibARCommands.py -projects ARDrone3

Then you can find desired files: ARCOMMANDS_Ids.h, ARCOMMANDS_Filter.c

and ARCOMMANDS_Decoder.c. Interesting lines from ARCOMMANDS_Filter.c

are:

commandProject = ARCOMMANDS_ReadWrite_Read8FromBuffer (…); commandClass = ARCOMMANDS_ReadWrite_Read8FromBuffer (…); commandId = ARCOMMANDS_ReadWrite_Read16FromBuffer (…);

So if you look for byte commandProject = 1

(ARCOMMANDS_ID_PROJECT_ARDRONE3), you will find combinations for example 01 04

04 00 in 35bytes messages in previous post. commandClass = 4

(ARCOMMANDS_ID_ARDRONE3_CLASS_PILOTINGSTATE) and commandId = 4

(ARCOMMANDS_ID_ARDRONE3_PILOTINGSTATE_CMD_POSITIONCHANGED). Now you can lookup

decoding routing in ARCOMMANDS_Decoder.c:

_latitude = ARCOMMANDS_ReadWrite_ReadDoubleFromBuffer (…); _longitude = ARCOMMANDS_ReadWrite_ReadDoubleFromBuffer (…); _altitude = ARCOMMANDS_ReadWrite_ReadDoubleFromBuffer (…);

All three 8bytes values are 00 00 00 00 00 40 7F 40.

- ARCOMMANDS_ID_ARDRONE3_PILOTINGSTATE_CMD_SPEEDCHANGED = 5 … float _speedX, _speedY, _speedZ;

- ARCOMMANDS_ID_ARDRONE3_PILOTINGSTATE_CMD_ATTITUDECHANGED = 6 … float _roll, _pitch, _yaw;

- ARCOMMANDS_ID_ARDRONE3_PILOTINGSTATE_CMD_ALTITUDECHANGED = 8 … double _altitude;

That could be enough to get started . See related

diff.

p.s. I expected copy and paste error, but it is different "ATTITUDE" vs.

"ALTITUDE"

4th February 2015 — Commands required

I asked Darryl to repeat my first step and in meantime I also did it myself

this morning. And it did not work :-(. At least 100 messages did not go

through. When I set the limit to 10 or even 50 it was OK. So there is some

time limit when you have to send some command to the drone.

I did not mention this, but just for reference I am using Windows 7 and

Python 2.7. On the other hand it should work also on other OSs so far (at

the moment there is no dependency, but it will probably soon change and OpenCV2

and NumPy libraries will be required).

So how to send the command and which command would not be too dangerous? It is

surely not a good idea to send takeoff first . There are commands like

SetHome and ResetHome which should generate some drone response and

without flying they should not be harmful.

The encoding scheme is similar to decoding:

ARCOMMANDS_ReadWrite_AddU8ToBuffer(…ARCOMMANDS_ID_PROJECT_ARDRONE3…); ARCOMMANDS_ReadWrite_AddU8ToBuffer(…ARCOMMANDS_ID_ARDRONE3_CLASS_GPSSETTINGS…); ARCOMMANDS_ReadWrite_AddU16ToBuffer(…ARCOMMANDS_ID_ARDRONE3_GPSSETTINGS_CMD_RESETHOME…);

and

ARCOMMANDS_ReadWrite_AddU8ToBuffer(…ARCOMMANDS_ID_PROJECT_ARDRONE3…); ARCOMMANDS_ReadWrite_AddU8ToBuffer(…ARCOMMANDS_ID_ARDRONE3_CLASS_GPSSETTINGS…); ARCOMMANDS_ReadWrite_AddU16ToBuffer(…ARCOMMANDS_ID_ARDRONE3_GPSSETTINGS_CMD_SETHOME…); ARCOMMANDS_ReadWrite_AddDoubleToBuffer(…_latitude…); ARCOMMANDS_ReadWrite_AddDoubleToBuffer(…_longitude…); ARCOMMANDS_ReadWrite_AddDoubleToBuffer(…_altitude…);

The question is what is necessary to send before these bytes are encoded??

I will at least answer one Darryl's question: How could you tell about

counter, checksum, and time?? Well, I was probably wrong with checksum, and

time or better timestamp I guessed as the 4 byte number was increasing (and

I am maybe wrong). But I am pretty sure about the counter. Parrot used

that in ARDrone2 (absolute increasing uint32) and also for Rolling Spider

(uint8). The packets can be lost in UDP communication and one way how to

recognize this fact is to index them. The packets also do not have to come in

the original order although it is probably not the case with direct WiFi

connection.

5th February 2015 — libARNetworkAL

Lesson learned: if you want to play with ARDrone3 download ALL 14

repositories!!! Do not be lazy like me! Otherwise you may omit for example

libARNetworkAL. It is the place where you will find this structure:

typedef struct

{

uint8_t type; /* frame type eARNETWORK_FRAME_TYPE */

uint8_t id; /* identifier of the buffer sending the frame */

uint8_t seq; /* sequence number of the frame */

uint32_t size; /* size of the frame */

uint8_t *dataPtr; /* pointer on the data of the frame */

}

Not interested? Well, review the collected data now . 02 is type and

it corresponds to ARNETWORKAL_FRAME_TYPE_DATA — Data type. Main type

for data that does not require an acknowledge.

The next item was 00 or 7F and it is id. The seq was guessed

correctly but size is not single byte, but whole uint32, which explains

the three zeros. And the rest we were already able to decode (see

diff).

So now, what to do with the problem from yesterday? How to encode whole

command packet? Based on ARNETWORKAL_WifiNetwork.c and function

ARNETWORKAL_WifiNetwork_PushFrame it should be the same frame as we just

decoded. I would guess that like for Jessica the

type=2 but I am not sure about id. According to

ARNETWORK_IOBufferParam.h in libARNetwork ID — Identifier used

to find the IOBuffer in a list - Valid range : 10-127.

Here

is my first attempt to send command — unsuccessful :-(. But I received new

messages . In particular

04 7E 01 23 00 00 00 01 18 00 00 00 00 00 00 00 40 7F C0

00 00 00 00 00 40 7F C0 00 00 00 00 00 40 7F C0

where 04 is message with required acknowledgement

(ARNETWORKAL_FRAME_TYPE_DATA_WITH_ACK), 01 18 00 00 is

ARCOMMANDS_ID_PROJECT_ARDRONE3 = 1,

ARCOMMANDS_ID_ARDRONE3_CLASS_GPSSETTINGSSTATE = 24,

ARCOMMANDS_ID_ARDRONE3_GPSSETTINGSSTATE_CMD_HOMECHANGED = 0 … so maybe I was

sucessful at the end! . This worked for id=10, but did not work for 0x7F

and 0x40.

Confirmed . So one more small

diff.

Also for frameId=10 I received all 10+100 messages, while for other two

"random" channels it stopped before 100.

OK, so the next step will be confirmation of the received message (type 4).

15th February 2015 — PING and PONG

Well, nothing really exiting yet . I tried to confirm (acknowledge) message

of GPS home changed, and I did not succeed. But in that particular piece of

code (see original

ARNETWORK_Receiver.c:191)

there is an extra switch for handling frame.id

ARNETWORK_MANAGER_INTERNAL_BUFFER_ID_PONG. If you look this ID up you will

find that it is 0. And what is it?

It looks like that these are the 15bytes long messages starting with 02 00 XX

0F prefix. The payload is struct timespec, which should be 8 bytes

(time_t and long), probably both 4 bytes (seconds, nanoseconds).

Time 176.160592961 Time 177.181624819 Time 178.202618948 Time 179.362471135 Time 180.38329366

So it looks like PING every second, and because it is not PONGed the connection

is lost after a while (I guess).

There was a small surprise regarding UDP packets. One packet can contain

several messages and thus simple logging (see

diff)

is not sufficient. So far I have seen only combination of standard messages

with these PING messages, but who knows …

Update:

- the UDP packets are now split for reliable record and replay + asserts On/Off (diff)

- I got ping-pong working and tested it on 1000 messages (diff)

- thanks to Jumping Sumo sample I know how the configuration looks like … frame.id = 10/127 for normal messages, 11/126 for acknowledged

- I received new message 04 7E 02 0C 00 00 00 00 05 01 00 38 which should mean ARCOMMANDS_ID_PROJECT_COMMON = 0, ARCOMMANDS_ID_COMMON_CLASS_COMMONSTATE = 5, ARCOMMANDS_ID_COMMON_COMMONSTATE_CMD_BATTERYSTATECHANGED = 1, value 0x38=56% … so it is probably OK, that I did not receive this always

16th February 2015 — ACK of ACK?

There was a big mistake in the code yesterday — yes, I wrote that code. It is

necessary to pack payload for normal command but special cases like ACK or

PING are already prepared to be sent as-they-are (see

fix).

This means that ping-pong was probably not working, only the communication kept

the drone busy. After the change I received strange 8 bytes messages. They had

frameType 0x1 (ARNETWORKAL_FRAME_TYPE_ACK) and they were coming with

frameId 0x8B?! In HEX code it is more visible. Now I would guess that it

was acknowledge of my (wrongly composed) acknowledge of original home

changed or battery status changed from frameId 11 = 0xB.

I am convinced now that frameId 10 is for sending standard messages

without acknowledgement and 11 for any (?) messages for which I would like

to get acknowledgement. I did not succeed to send ACK probably, because the

message from the drone was still repeated. The plan is to try (again)

frameType 0x1 (ARNETWORKAL_FRAME_TYPE_ACK — Acknowledgment type.

Internal use only … but I am probably working on "internal" now), and

frameId = 0x7E + 0x80 … I wonder what is the chance of success :-(.

p.s. yesterday I have seen the drone die on low battery again. The last message

was something like Battery 32%, so the main computer eats a lot or it has

set auto-shutdown threshold relatively high.

p.s.2 it looks like it worked

(diff)

…

17th February 2015 — Low latency video stream

It is time for the next step. I found

ARCOMMANDS_ID_ARDRONE3_MEDIASTREAMING_CMD_VIDEOENABLE in the list of IDs

and tried to call it (see

diff).

There were many new messages almost 1kB long and they looked like:

03 7D 7D F4 03 00 00 07 00 01 00 1F 00 00 00 01 27 42 E0 28 … 03 7D 7E F4 03 00 00 07 00 01 01 1F DE 11 5A 4E 70 5E BE F6 … 03 7D 7F F4 03 00 00 07 00 01 02 1F 96 C4 44 3E A3 7F 0E 0D … 03 7D 80 F4 03 00 00 07 00 01 03 1F D1 8B 07 A2 57 2A 86 C7 … …

At first I was happy to see 00 00 00 01, which is used as start tag in

H.264 video codec. I cut that part and tried to replay it with old

Heidi code. I saw

image patches of reality, but also many errors.

Later I recorded longer communication (instead of 100 messages it was 1000 and

5000) and I was surprised that not all packets arrived and that they are

repeating. Why? The answer is simple: they have to be acknowledged.

Note 03 at the beginning — this is a new type of frames

(ARNETWORKAL_FRAME_TYPE_DATA_LOW_LATENCY). Also note, that there is a new

frameId FD and according to

Jumping

Sumo Receive Stream sample could be the acknowledge frameId equal to

JS_NET_CD_VIDEO_ACK_ID = 13.

Both I/O structures look reasonable (see

libARStream/ARSTREAM_NetworkHeaders.h):

typedef struct {

uint16_t frameNumber; /** id of the current frame */

uint8_t frameFlags; /** Infos on the current frame */

uint8_t fragmentNumber; /** Index of the current fragment in current frame */

uint8_t fragmentsPerFrame; /** Number of fragments in current frame */

} __attribute__ ((packed)) ARSTREAM_NetworkHeaders_DataHeader_t;

So in my example 07 00 01 00 1F is frameNumber = 7, frameFlags is

so far always 1 (FLUSH FRAME), fragmentNumber is increasing and 1F is

fragmentNumber.

Now it is time to implement video packet acknowledgement:

typedef struct {

uint16_t frameNumber; /** id of the current frame */

uint64_t highPacketsAck; /** Upper 64 packets bitfield */

uint64_t lowPacketsAck; /** Lower 64 packets bitfield */

} __attribute__ ((packed)) ARSTREAM_NetworkHeaders_AckPacket_t;

i.e. there are up to 128 frame fragments which have its own confirmation bit.

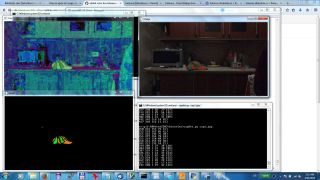

18th February 2015 — video.py

It looks like the video packet confirmation works (see code

diff).

Note, that the frame fragments can be in random order and there could be

also some duplicities. Because I wanted to see the video and I was not

patient enough, I wrote simple brute force utility which sorts the

fragments in the memory and creates video file (see

video.py).

And it works.

I am using code from Heidi to replay the video file

(rr_drone.py or

directly

airrace.py). But

because the file does not contain PaVE headers any more, you can directly

load it with OpenCV:

import cv2 cap = cv2.VideoCapture( "video.bin" ) ret, frame = cap.read() cv2.imwrite( "first-video.jpg", frame )

and this is my first result:

first successfully transfered video frame |

I know that the picture of the side view of our fridge is not very exciting.

If you want just to see the frame(s), use:

cv2.imshow('image', frame)

cv2.waitKey(0)

with wait in milliseconds or 0 for pause until user press any key. And finally

if you want your code to be as it should be clean it all at the end:

cap.release() cv2.destroyAllWindows()

Yesterday I finally took off. It was only in Free Flight 3 application but

still it was exciting. The bad news is that Bebop has the same problem as

Rolling Spider — you cannot land until you complete takeoff sequence :-(.

That means troubles and I hope Parrot will soon change this in the future

firmware.

The second observation was related to image stabilization — I did not notice

it before, or there is some setting to turn it on/off, but if you tilt your

drone the image does not tilt . The same if you point up and down it remains

stable. The application asked for magnetometer calibration before (which I did

not because I was afraid that it will take off), and yesterday we calibrated

it. So maybe that was why it is now working?

What do to next? I plan to try move image ROI (region of interest), switch to

HD video resolution (from the picture you can see that it was 640x368), take

14Mb resolution picture … and probably finally takeoff, move and land

autonomously .

20th February 2015 — Camera Tilt/Pan and Emergency

Yesterday I experimented with camera tilt and pan

(diff).

It was fun, because it worked immediately . Yes, except detail, that the byte

for setting camera angle is signed, so packing once failed, but the fix was

trivial.

I implemented also utility

play.py —

basically a short code for playing video as I was describing it in the previous

report. Now, as soon as you finish your test, you can replay your video

directly from navdata log file.

This morning I tried first takeoff(). It is always exciting, kind of

adrenalin fun, because you do not know what will happen. I was holding Katarine

tight, and called robot.takeoff() followed by robot.emergency() from

Python script, i.e. cut motors as soon as you start them. What would you

expect to happen? The propellers were still turning after the script

termination!

You can turn Bebop upside down and that stops the motors, but still … it was

surprise even I was ready for everything.

Here

is the code plus extra lines for parsing new messages: calibration info,

flight number info and piloting state.

I suppose that there will be the same problem like with

Rolling Spider, i.e. I will have to wait until take off

sequence is completed before I can land :-(. BTW this is one of the reasons I

am bit afraid to takeoff in this narrow space.

21st February 2015 — First Crash

„I told you there will be a problem!” … and yes, there was. I partially

moved furniture in the living room to have enough space for the first takeoff

test (code

diff).

The first test was over quickly: battery low, battery critical and stop, so the

propellers hardly twisted.

I swapped batteries (Bebop has second battery set in the standard package,

which is very nice), took off and during hover the drone slided a little bit to

the left, hit the wear drier and landed. The video is not very exciting, so I

picked at least one video frame recorded by the drone, when it is

falling/landing and it sees its own protective hull:

Later that day I did also outdoor test with Free Flight 3 application. And

that looked even more scary. There was a light breeze and at one moment the

drone was completely uncontrollable (WiFi connection lost?). I wander how the

black box works and if there are any data stored? I am also curious if by

default was the Flight No XXX recorded?

Sigh. Nothing. Based on Bebop hacking

page I would have to have enabled Black Box in /etc/debug.conf. During

the tests at underground garage we probably turned off automatic video

recording, so there is nothing from yesterday. Sigh. On the other hand the

quality of recorded garage video is great (full HD, stable image).

22nd February 2015 — ManualControlException and FlatTrim

Katarina is finally slowly learning the same commands as

Heidi. Today I added ManualControlException, something

like red Emergency STOP button on every robot. For the flying drone this means

land as soon as you hit any key on the keyboard. The bad news is that there

is no platform independent kbhit so at the moment it works only under

Windows. There was implementation with pygame on Linux version (Isabelle),

but I did not test it yet and it is necessary to create some window and

initialize pygame library. An alternative is Joris's Linux version — TODO

(let me know if you would like to use Katarina's code on Linux or Mac). Here is

the slightly bigger

diff.

Note, that

apyros/sourcelogger.py

is copy from

Heidi code.

Any exciting moments? … well at least two. First of all the drone was

always moving to the left as soon as it switched to hover mode. So

yesterday it was not necessary the breeze, but probably missing flat trim.

Fixed. Now it stays in place.

The second funny moment was that I forgot land() command in normal run.

When I hit the emergency button everything was fine, but then I let that be,

actually I hit the button outside the try..except block and the drone was

still flying and flying. New lesson is that you can grab the Bebop with the

same trick as ARDrone2 — one hand from the top, so you do not disturb down

pointing camera and sonar, and then the second hand from the bottom, and twist

up-side-down.

The last step I did today was some cleaning (see

diff),

so now command are in separate file, trim() is finished as soon as drone

confirms it and takeoff() and land() is terminated by flying state

change.

What next? Redirect incoming video frames to extra working thread and look

for two-colors cap:

23rd February 2015 — Fandorama

I will switch this blog to Czech language for a while. I did not expect it,

but at the end the

fandorama rising

project was successful

There will be surely updates on

github, and I will probably write

major changes/discoveries in both languages, but … let me know if you find

this article/blog interesting, and if I should explain or translate some

parts.

20th March 2015 — Status quo

It is hard to believe that it is almost a month since I switched to Czech

„blogging”. There is some progress but it is still far from perfect . In

particular I am fighting now with streamed video which sometimes stops and

I have to power down the drone to restart it. No idea why.

On the other hand on-board video recording works fine, so I have „internal”

reference, but that is useless for autonomous navigation. It is somehow related

to flying … maybe I have to send ping messages myself to keep the drone

going??

I did some experiments in our garden and it turned out that the grass is not

well suited for landing (it was good in one crash case, but now I mean

„standard successful automatic landing”). That was old task for

ARDrone2 when the detection was done by Parrot's code. This

is no longer true, so I have to do it myself:

There is new folder

behaviors, where

you can find experimental navigation to box

navbox.py.

Image multiprocessing is prepared but not fully integrated yet. It should be

fun as soon as the video stream is reliable.

What else? I did some experiments with navdata and black box according

to the forum thread

Hacking the Bebop. Both

collects data on-board, including sonar and EKF estimates, but it is not

streamed over network :-( … see

Nicolas note on

GitHub.

There is new

Parro

Bebop Protocol document. The motivation to write it down was from

British forum, but

no idea, if it was really useful for anybody …

Yeah and some fun from

American forum … a

fracking genius … can read hex like Neo reads the Matrix! … ha ha ha

. No, not really. But speaking of HEX … I did some (more) experiments

with H.264 codec decoding for ARDrone3 (in Czech

H264 Drone Vision). And surprise, surprise …

the codec is different. While ARDrone2 was sending only 16x16 macroblocks, you

get finer compression on Bebop with sub-blocks down to 4x4. This means that the

H264 navigation code

needs some work to be done to port it on 3rd generation of Parrot's drones.

22nd March 2015 — PCMD @40Hz

This one is worth mentioning immediately. Now I know why the video streaming

fails once a time! The reason is timing of PCMD messages … but let's start

from the beginning.

On Saturday I finally created example when the video streaming fails without

need of flying (see

diff).

Later I wrote even simpler example

(diff),

i.e. as soon as I sent the first PCMD the video stopped and was not restored

until next power down and power up.

So what to do? I am glad I wrote to

ARDroneSKD3/libARCommands/issues.

And surprise surprise I got answer today!

When using the PCMDs, you should make sure that your send them with a fixed

frequency (we use 40Hz / 25ms in FreeFlight), even if you're sending the same

command every 25ms.

A change in the PCMD frequency is considered by the Bebop to be a "bad wifi"

indicator. In this case, the bebop will decrease the video bandwidth (up to a

"no video" condition if the indicator is really bad) in order to save the

bandwidth for the piloting commands. When the PCMD frequency become stable

again, the video bandwidth will rise again.

This explains a lot - in particular why it sometimes worked . If it was

fixed loop like flying to requested altitude it was OK, but as soon as I

switched to another task the timing was broken.

Here

is small hack confirming Nicolas answer … it works, even for the second run.

But … yes, there is still but, only during the times when the Tread is on

sending PCMD at 40Hz, so I am asking now if it is necessary to send PCMD all

the time, or if there is a way how to say „I do not want to send more

PCMD!”.

8th April 2015 — Terminated.

Well, I know that The Day will come, but still it strike me out unprepared .

The distributor asked me to return the drone because of some presentation. OK,

what can I do. Katarina is not mine. I could buy one, but do I want to??

Interestingly enough it was after the Eastern weekend when I decided that if I

want to move forward I will have to write the firmware myself or use/learn from

others. Yesterday I downloaded

ardupilot-mpng

and paparazzi repositories … and

then I received that mail.

Somehow I lost the motivation to practise landing yesterday and do the cap

tracking with multiprocessing today. I deleted videos and thumbnails from the

drone and I will return it in two hours … Goodbye Katarina!

14th April 2015 — Remote distributed debugging

Thanks Charles and others for mails, queries and issues on GitHub . I am not

giving up yet . I do not have Bebop now, but that will probably change in

May 2015. So now I am remotely debugging like with dropping incomplete

video frames

(diff).

This one I did from old log files, but I can repeat the problems even from your

new log files . Now I am waiting for Charles's confirmation that fix worked

also for him.

At the moment I am studying paparazzi

code for ARDrone2, but note, that there is also

Bebop

board code. The goal is to stream missing navdata like raw sonar readings …

and maybe more. We will see.

30th April 2015 — Paparazzi?!

I had to smile yesterday, when I received notification from GitHub (regarding

Mavlink

seems not to work issue): If you really need to do it before the next

firmware release (in the coming weeks), you can maybe look at Paparazzi. I

can't provide support on it, but I think they support the bebop. It is not very

easy, so maybe a small wait is better

It was hard to believe that this is the Parrot answer, but yes, it was. So I am

probably on the right track learning

Paparazzi

for ARDrone2 at the moment, and I can confirm that reading navdata on

Heidi onboard

works.

Regarding Katarina I merged DEVELOP back to master — the issue with

navigation Home is not solved (see

Issue #7) and it is

probably related to minimal height above the ground. Aldo did some experiments

at 10 meters with string, but what he would need is rather MavLink.

If nothing else then at least battery status and include paths in Linux are

fixed and that was the main reason why I merged it.