Husky

experiments with commercial platform

Husky is commercial platform manufactured by Clearpath Robotics. It is planned to be an active helper on a small farm, where it will cut the grass near electric fence and assist with material handling. Blog update: 3/6 — dnipola.sk/Encoders

Our robot Husky was tested for the first time on competition

Robotem rovně. Here is

short video of robot in motion.

The source code was

very simple (basically set 30% power to both motors), but still it was success

— I had the robot for couple hours only.

It looks like that Czech public is not very interested in this project (see

results of this fandorama

project) so I will try to write my experiences and test results here in

English. If you have any question or comment please do not hesitate to ask

(contact form is at the end of this article).

Links:

- Website of manufacture: http://www.clearpathrobotics.com/husky/

Content

2014

- Github

- IMU

- End of IMU mystery

- USB devices

- ROS without ROS?

- Subscriber client

- Hello Robot

- ROS /imu/data

- Hello ROS Family

- node.py

- replay metalog

- Heartbeat

- Joyride

- Quaternions and loose wheel/axis

- Goodbye Husky!

2015

- Husky at CZU

- Simple Depth Viewer

- To the Wall

- depth_stream.start()

- followme.py ver0

- go.py into sun

- Husky & Heidi (first test)

- 2 days to RR2015

- H+H Network (failure)

- The Contest Robotem Rovně 2015

- USB Ghost

- OS Upgrade

- ssh tunnel

- Compass calibration

- imu.py

- dnipola.sk/Encoders

Blog

29th July 2014 — Github

Last couple of years I prefer to program in Python. So I was very happy that

Husky comes with Python wrapper. Unfortunately it looks like the focus of the

company is mainly on ROS (The Robot Operating

System) which we will probably use at the end too, but somehow I trust more

Python. Yeah, and I do not know ROS much …

The official wrapper (for registered users only) is fine, but I miss

communication logging and simple way of testing from recorded log files.

Because of that I started alternative wrapper which is available on

github. I still use the official wrapper

as reference and it helped me yesterday to solve three bugs in generation of

command packets:

- length byte in message header should be smaller

- there was a mistake with alignment (probably also causing the first mistake)

- I was using protocol version 0 instead of version 1, which is described in the documentation

The good news is that Husky moves now and all its sensor data are properly

logged and can be replayed any time after the trial. I could already observe

current rising when the robot was pushing against the wall and I saw couple

millimeters readings from encoders.

Well, I did not mention important „detail” that the whole platform can be

controlled via serial line with command packets. You can read sensor data as

response to query commands or you can set frequency how often they should be

reported automatically. It reminds me CAN bus on Eduro robot,

so I am quite happy with this setup .

31st July 2014 — IMU

Odometry with encoders looks fine so next step is to get compass/magnetometer

working. I expected that it will be easy and that I will just request data via

0x4600 or 0x4606 message (code

diff), but … no, something is wrong .

While I get response that MAX_SPEED is set to 1 m/s and MAX_ACCEL to 4 m/s2

confirmation of Magneto messages fails with response ['0x2', '0x0'], which

means [1] Type not supported - The platform configuration being used does not

support this command or request.?!

IMU (looks like UM6-CHR) is obviously

mounted inside Husky. I wrote to CPR (Clearpath Robotics) support and got

response how to test presence of IMU, but in ROS:

administrator@cpr-sylv-01:~$ rostopic list /clearpath/announce/robots /diagnostics /diagnostics_agg /diagnostics_toplevel_state /encoder /husky/cmd_freq /husky/cmd_vel /husky/data/differential_output /husky/data/differential_speed /husky/data/encoders /husky/data/power_status /husky/data/safety_status /husky/data/system_status /husky/robot /imu/data /imu/mag /imu/rpy /imu/temperature /inertial_ekf/odom /joint_states /joy /plan_cmd_vel /rosout /rosout_agg /tf /tf_static

So you see there /imu/data and yes, if you query them via rostopic echo

/imu/data you get readings like:

header:

seq: 6366

stamp:

secs: 1406748289

nsecs: 74127

frame_id: imu_link

orientation:

x: 0.5765191582

y: 0.4495936349

z: 0.4151515331

w: 0.5414056704

orientation_covariance: [0.03235951438546181, -0.022549884393811226,

-0.028850223869085312, -0.02254987321794033, 0.019496535882353783,

0.02177048847079277, -0.028850214555859566, 0.021770477294921875,

0.03033282235264778]

angular_velocity:

x: -0.00532632599807

y: -0.013848447595

z: -0.00213053039923

angular_velocity_covariance: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0]

linear_acceleration:

x: 0.013000455

y: 1.05505101

z: -0.095031495

linear_acceleration_covariance: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0]

So IMU is connected and sends some data. So what is the problem and how it

could be fixed? Now I am going to fall back to original Python wrapper if it

also fails. Here is modified horizon snippet:

def platform_magnetometer(code, payload, timestamp): print(payload.print_format()) horizon.add_handler(platform_magnetometer, request = 'platform_magnetometer') horizon.request_platform_magnetometer(subscription = 1)

Time to power on Husky in bedroom …

administrator@cpr-sylv-01:~/md/hacking$ Traceback (most recent call last): File "./mag.py", line 20, inhorizon.request_platform_magnetometer(subscription = 1) File "/home/administrator/md/hacking/clearpath/horizon/__init__.py", line 449, in request_platform_magnetometer return self._protocol.request('platform_magnetometer', locals()) File "/home/administrator/md/hacking/clearpath/horizon/protocol.py", line 297, in request self.send_message(message) File "/home/administrator/md/hacking/clearpath/horizon/protocol.py", line 346, in send_message raise utils.UnsupportedCodeError("Acknowledgment says Bad Code.") clearpath.utils.UnsupportedCodeError: Acknowledgment says Bad Code.

OK, so it is not necessary my fault.

1st August 2014 — End of IMU mystery

How is it possible that I cannot see IMU on serial port when it is working in

ROS?! I was going to sniff USB communication to get the answer … but I would

not found it there . Any idea?

Hint:

administrator@cpr-sylv-01:~$ ls /dev/ttyUSB* /dev/ttyUSB0 /dev/ttyUSB1

Stupid me — sometimes, even for programmer, it is better to open the box

instead of looking for the bug in software.

Thanks to Martin C. from CPR support:

I have gotten word back from one of our software engineers. He said

that since Husky doesn't have a built in IMU, only the motor and encoder

messages are supported. To interface with the UM6 IMU that you have in your

Husky, you can use this legacy Python module:

http://aptima-ros-pkg.googlecode.com/svn/trunk/imu_um6/src/um6/driver.py

So IMU is separate module connected to separate USB port!

I still did not get nice data from IMU:

administrator@cpr-sylv-01:~/md$ python driver.py Read Pkt: BAD CHECKSUM CMD 172 Success: True CMD 176 Success: True CMD 175 Success: True rx pkt Read Pkt: BAD CHECKSUM rx pkt Read Pkt: BAD CHECKSUM rx pkt Read Pkt: BAD CHECKSUM rx pkt

… but I still consider it as success, for the moment.

19th August 2014 — USB devices

There are four USB devices plugged into Husky robot:

- USB-serial converter for Husky platform (motors, encoders, …)

- IMU unit

- Logitech joystick receiver

- Prime Sense Sensor (a'la Kinect)

Originally I wanted unified data source, similar to CAN bus messages, test and

log each device separately and then integrate them together. Current status is

not very encouraging. I can talk to Husky platform, but that is the only good

news.

I can read data from joystick via pygame on Win7 (I

tried also Eduro

joy.py but my

impression is that I am getting random buffered results from

/dev/input/js0). There is a possibility to install pygame on Husky,

but is it right direction?

I do not have any success talking directly to IMU. It should be

/dev/clearpath/imu (symbolic link to /dev/ttyUSB0) running at 115200,

but again I am getting rather some random bytes and checksums are not valid.

Regarding Prime Sense Sensor — it works on Win7 with open source drivers and

I did not test it on Husky yet. That would be 2nd phase. The first task is to

record and replay (drive again) recorded path.

So what to do?! One possibility is to give up low level access (I am not very

comfortable with that) and use ROS as middleware. ROS seems to be working — I

see data from IMU (rostopic echo imu/data) and from Joystick (rostopic

echo joy). It can handle multiplex of all sources. It can also log them,

probably … it is just such a monster that I still hesitate to cross that

line. Again I would like small "hard coded" client, which does not need ROS

library and connect to something, request data, read and parse them into Python

class. Is then rospy "must have"?

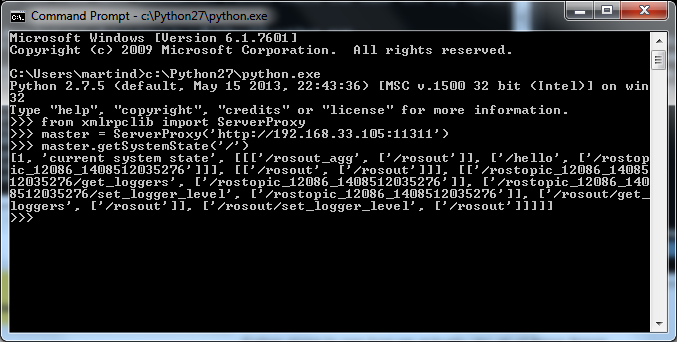

20th August 2014 — ROS without ROS?

There is a light at the end of the tunnel. Maybe . I am trying to get data

from existing ROS nodes, but I would like to avoid ROS installation (for

Windows). I start to learn it — I had some idea, but there are tons of texts

you do not really want to read … until I found nice article (short with

images) on robohub.org:

And then simple usage in

I did not fully understand why they provide readers images for vitrual machines

until I tried to install it on older machine with Ubuntu. There is no general

installation it depends even on which particular version of Ubuntu you have.

And the one I have is the last supported by hydro.

Recommended installation would take more than 2GB(!), which I do not have

available on that old machine. So I went for Bare Bones, approx 100MB of

packages, followed instructions on

ROS wiki and it went fine.

I tried Hello Robot example

and it worked (avoid copy and paste, or make sure you have corrected quotation

marks):

martind@martind-ThinkPad-R60:~$ rostopic pub /hello std_msgs/String “Hello Robot” ERROR: Too many arguments: * Given: [u'\u201cHello', u'Robot\u201d'] * Expected: ['data'] Args are: [data]

And then I came over ROS

Technical Overview. It was rather "lucky accident", but as soon as I read

Most ROS users do not need to know these details... I know this is for me

. This is the piece I was looking for. After simple test on Ubuntu I could

connect also from Win7. See the „proof”:

Python test talking to ROS |

So there is a chance …

21st August 2014 — Subscriber client

A long time ago I was thinking how to split processing power on

robot Eduro. I asked my robo-friends if it is a good idea to

use XMLRPC (Remote Procedure Call) to

put it all together. Their answer was hard to forget: Well, then you will

have TWO problems!

ROS is using XMLRPC. I am trying to create simple Subscriber client, i.e.

piece of code which can receive IMU, data for example. The begging was fine —

the client has to talk to the ROS Master via XMLRPC if there is any

Publisher providing such information (see

ROS Master API). In particular it is

necessary to call registerSubscriber(caller_id, topic, topic_type,

caller_api).

What are the parameters? caller_id is string ROS caller ID. Here I

would guess that it can be any string describing my client node (I picked

"hello_test_client"). Then there is the topic and topic_type.

Because I want to test it on

Hello Robot example it

should be "/hello" and ""std_msgs/String", I hope. And then

troublemaker caller_api. It is supposed to be string API URI of

subscriber to register. Will be used for new publisher notifications.. I was

surely coding this wrong, because I am getting:

[-1, 'ERROR: parameter [caller_api] is not an XMLRPC URI', 0]

Do I really have to create server in order to receive publisherUpdate

remote procedure call? I am trying to write simple client, and from most (all?)

examples I saw so far the clients subscribe and then wait until there is any

publisher available. Is not there an option to drop this part completely? I

would not mind to have there parameter blocking or timeout that if

there is no publisher within given period it will fail. Why my poor client has

to build also XMLRPC server just for service it does not care of?

OK, solved … see

github.

I am sorry for hard coded IP addresses — it is just test between PC running

Ubuntu and Win7 laptop. There is

hello.py which

tries to subscribe to ROS Master and

hello_server.py

which would accept remote calls (copy from Python documentation). Note, that

server does not call it immediately. But you are not allowed to fill there

empty string. On the other hand "http://blablabla:123" worked too:

m:\git\husky\ros>hello.py Publishers: ['/rosout_agg', ['/rosout']] ['/hello', ['/rostopic_2157_1408558967708']] [1, 'Subscribed to [/hello]', ['http://martind-ThinkPad-R60:48116/']]

Now it is time to talk to the node 'http://martind-ThinkPad-R60:48116/' and

convince it to open connection for me …

p.s. I did not receive any publisherUpdate even when I stopped /hello

and added some sleep() … so hello_server.py side is not working

properly yet.

22nd August 2014 — Hello Robot

Done. I finally convinced the other ROS node to send me (Win7 Python client)

Hello Robot!

m:\git\husky\ros>hello.py Publishers: ['/rosout_agg', ['/rosout']] ['/hello', ['/rostopic_2157_1408640226737']] [1, 'ready on martind-ThinkPad-R60:49065', ['TCPROS', 'martind-ThinkPad-R60', 49065]] - - - - - - - SIZE 174 message_definition=string data callerid=/rostopic_2157_1408640226737 latching=1 md5sum=992ce8a1687cec8c8bd883ec73ca41d1 topic=/hello type=std_msgs/String - - - - - - - SIZE 15 Hello Robot - - - - - - - Traceback (most recent call last): File "M:\git\husky\ros\hello.py", line 77, indata = soc.recv(4) socket.timeout: timed out

Here is

the

code diff — very ugly, nor parsing what I got from the node but rather

patching of patches until I finally got answer. It was not that trivial as it

may sound now, so let's review where I made mistakes …

The very first problem was in specification of the communication protocol —

the last parameter in publisher.requestTopic(). I was using

ROS Slave API as documentation and it was

supposed to be: List of desired protocols for communication in order of

preference.. Somewhere else I read that there are actually only two

(TCPROS and

UDPROS) so it took me while to realize why

it is list of lists?! Never mind, at the end the documentation was correct,

i.e. the last parameter is [ ["TCPROS"] ].

Great, after several unsuccessful attempt I finally got [1, 'ready on

martind-ThinkPad-R60:49065', ['TCPROS', 'martind-ThinkPad-R60', 49065]], so

my /hello node can be accessed via port 49065. I opened socket, connected

to it, but I did not receive any data. I supposed that it is because of buffering

so I played (probably wrong) with socket.TCP_NODELAY, timeouts etc. I tried

to get 4 bytes of message length, guided by this

nice descriptive example.

Nothing.

From Technical Overview 6.1

Example it looked like client has to only connect. I should rather read

TCPROS page, where there was an important

information: A TCPROS subscriber is required to send the following

fields.... So client has to talk to node first.

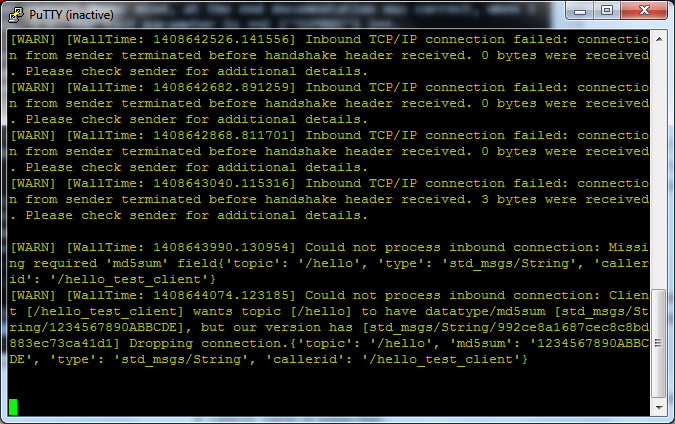

After a while I realized that there are tons of warnings in "/hello" node

terminal:

Warnings from /hello node |

So I added several key=value pairs with 4 bytes length prefix, random

md5sum and then from warnings I got correct md5sum … yeah, very dirty way. At

the end I received what I sent but still not the data . So the answer from

node starts with message description (again) and the second "packet" is the

real data Hello Robot string.

What I currently do not understand is why client has to specify message

structure, when it first talks to the server, why it has to repeat it when it

talks to publisher and finally why publisher repeats that when it talks back to

subscriber.

I could not resist to do another test: instead of providing std_msgs/String

I tried to send number. If you need to know how to specify number

this std_msgs wiki should help. Note, that it

is case sensitive, so in my case I tried rostopic pub /hello std_msgs/Int32

123. Strange thing is that when I asked server, which node provides /hello

std_msgs/String I got both. I stopped the one with string and this is what I

got from node:

error=topic types do not match: [std_msgs/String] vs. [std_msgs/Int32]

So I really do not understand why I have to say to ROS master what type of

message I need …

TIMEOUT i.e. time to run to work …

25th August 2014 — ROS /imu/data

As soon as I got Hello Robot from /hello node I tried to receive

/imu/data from Husky. I

hacked

hello.py and recorded received data. Now I understand that there is a big

difference between std_msgs/String and message_definition=string data.

The first is only a name while the other is message description. It was quite

clear for /imu/data node, which provides message (topic type)

std_msgs/Imu, and has many sub-elements.

So when you talk to ROS master you give it topic and only topic type (I am

still not sure why), but once you start to talk to publisher you have to

specify correct MD5 of the message structure. The good news if that if you do

not know it the publisher responds with something like your md5 is not

matching and that publisher has this md5. So you can start to talk to

publisher again, this time with its MD5 … and then you get complete message

description, including comment!

For std_msgs/Imu I received:

SIZE 2329 callerid=/um6_driver latching=0 md5sum=6a62c6daae103f4ff57a132d6f95cec2 message_definition=# This is a message to hold data from an IMU (Inertial Measur ement Unit) # # Accelerations should be in m/s^2 (not in g's), and rotational velocity should be in rad/sec # # If the covariance of the measurement is known, it should be filled in (if all you know is the variance of each measurement, e.g. from the datasheet, just put those along the diagonal) # A covariance matrix of all zeros will be interpreted as "covariance unknown", and to use the data a covariance will have to be assumed or gotten from some oth er source # # If you have no estimate for one of the data elements (e.g. your IMU doesn't pr oduce an orientation estimate), please set element 0 of the associated covarianc e matrix to -1 # If you are interpreting this message, please check for a value of -1 in the fi rst element of each covariance matrix, and disregard the associated estimate. Header header geometry_msgs/Quaternion orientation float64[9] orientation_covariance # Row major about x, y, z axes geometry_msgs/Vector3 angular_velocity float64[9] angular_velocity_covariance # Row major about x, y, z axes geometry_msgs/Vector3 linear_acceleration float64[9] linear_acceleration_covariance # Row major x, y z ================ MSG: std_msgs/Header # Standard metadata for higher-level stamped data type----SIZE 587869811 s is generally used to communicate timestamped data # in a particular coordinate frame. # # sequence ID: consecutively increasing ID uint32 seq #Two-integer timestamp that is expressed as: # * stamp.secs: seconds (stamp_secs) since epoch # * stamp.nsecs: nanoseconds since stamp_secs # time-handling sugar is provided by the client library time stamp #Frame this data is associated with # 0: no frame # 1: global frame string frame_id ================ MSG: geometry_msgs/Quaternion # This represents an orientation in free space in quaternion form. float64 x float64 y float64 z float64 w ================ MSG: geometry_msgs/Vector3 # This represents a vector in free space. float64 x float64 y float64 z

What is surely wrong is SIZE 587869811 … but it is probably my mistake —

I did not read all SIZE 2329 bytes … I guess. It should be clear from

replay of log file.

Confirmed = in the log file it looks OK.

Here

is parsing IMU data (hard coded, for now). And here some outputs:

22227 1408728589 709250449 8 imu_link (-0.007217399499999999, -0.7315086163, -0.6816246364999999, -0.015408308699999998) 22228 1408728589 760128957 8 imu_link (-0.0073516766999999995, -0.7315086163, -0.6816246364999999, -0.015475447299999999) 22229 1408728589 811034145 8 imu_link (-0.007586661799999999, -0.7314414776999999, -0.6816582057999999, -0.015676863099999997)

It is nice that every message contains sequential number and timestamp. What I

am not sure is frame_id in particular with comment 0: no frame, 1: global

frame when I received string imu_link …

27th August 2014 — Hello ROS Family

OK, now I have hacked whole ROS family: subscriber, publisher and XMLRPC server

(for details see

github).

It is still quite dirty, but I can receive hello string and send it. The next

step would be to finish parsing of other Husky messages, and try to publish

command to move. To be honest I am not very happy about it — I am thinking

about crazy hybrid, where sensors would be taken from ROS while I would talk to

the platform directly, logging all currently unnecessary data, like

currents and temperatures on both motors.

p.s. I did not found overall ROS Husky messages documentation … so

probably the simplest thing is to look for description files directly on the

platform.

p.s.2 Husky moved! with pseudo-ROS-Python code . I searched for

*.msg in /org/ros/hydro directory. There are plenty of them and the one

I needed was geometry_msgs/Twist which contains two vectors: one for linear

and one for angular velocity. I am not sure if it is good to commit another

IP-comment-hacking patch … but without that it would not be clear what I did

… so here is another

tunnel

digging to Husky ROS code.

29th August 2014 — node.py

I am currently trying to put all bits I learned from /hello experiments

together and create ROS node which will subscribe to several topics and

publish at least one (send drive command) — see

node.py.

At

the moment it is logging couple Husky ROS topics, each communication into

separate file, and it creates also metalog recording order of all received

messages.

I decided to use stupid single-threaded solution (based on

KISS principle). Note, that

there is in reality second thread handling XMLRPC server, but I would ignore it

for the moment. The timeouts are set to 0.0 so it is actively pulling all TCP

connections — not nice, but the machine has to do something anyway. I may

change it later to couple milliseconds. If you read Python documentation you

would hope for socket.timeout exception. Well, I was also naive … so you

get socket.error instead and it is different on Windows and Linux. It is this code:

try:

data = self.readFn( 4096 )

except socket.timeout as e:

print e # it should contain partial data

except socket.error as (errno, errStr):

assert errno in [10035,11], (errno, errStr)

# Windows 'A non-blocking socket operation could not be completed immediately'

# Linux (11, 'Resource temporarily unavailable')

data = ""

As I see it now it is surely wrong as socket.timeout does not handle

received data. For timeout exception may already read couple bytes and there is

no other way how to pass them to data. But I did not get timeout yet (OK,

to be sure I added assert

there).

Now I would guess that you either get some bytes i.e. no timeout or there is

nothing ready and thus you get socket.error.

Other than that it looks fine, except there is a partially expected issue with

buffering: when I run node.py remotely from Win7 the metalog looks

something like this:

… /husky/data/encoders /husky/data/encoders /husky/data/safety_status /husky/data/safety_status /imu/data /imu/data /imu/data /imu/data /husky/data/power_status /husky/data/encoders /husky/data/encoders /husky/data/safety_status /husky/data/safety_status /imu/data /imu/data …

but on Husky it is fine:

… /imu/data /husky/data/encoders /imu/data /husky/data/safety_status /husky/data/power_status /imu/data /husky/data/encoders /imu/data /husky/data/safety_status /imu/data /husky/data/encoders /imu/data …

2nd September 2014 — replay metalog

Finally! Last week I made a stupid mistake and could not find it — I forgot

to call self.master.registerPublisher in order to get contacts to clients.

Now this is fixed and node.py now also provides simple topic publishing

(see

gihub

diff).

There is also a

newer

version, where node.py accepts name of metalog (instead of master and

node IPs) and then you can replay whole run. This morning it was tested only on

publish /hello and subscribe /hello, so I am looking forward to do it

on Husky (with publishing velocity command).

3rd September 2014 — Heartbeat

Today I moved with Husky couple centimeters using NodeROS.update() (see

code diff).

Sure enough there were some bugs, and one was related to failure of 100%

reproducibility, i.e. major bug (later found in update() function).

The older version of update() checks all subscribers and repeats that in

cycle until at least one sends data. The problem is that sometimes only one

subscriber sends the data while next time it can be three subscribers, for

example. And it is not clear from metalog which update got which topics

updated. It is now fixed with extra '—' separator … not very scientific,

but clear what is going on. The metalog now looks like this:

_imu_data140903_064426.log _husky_data_encoders140903_064426.log _husky_data_power_status140903_064426.log _husky_data_safety_status140903_064426.log _joy140903_064426.log _husky_data_system_status140903_064427.log _husky_cmd_vel140903_064427.log /husky/cmd_vel /imu/data /husky/data/encoders /husky/data/power_status — /husky/cmd_vel /imu/data /husky/data/safety_status /husky/data/encoders — /husky/cmd_vel /imu/data /husky/data/safety_status /husky/data/encoders — …

And what is the heartbeat from today's title? Well, not all topics are

equal and not all of them are frequent and regular. I need one, which is

regular and which will dictate robot frequency of control. In the case of

Husky it is /husky/data/encoders — update() is not finished until

there is at least one update of encoders is received.

4th September 2014 — Joyride

I started new class

HuskyROS to

experiment how difficult it is to use my „node”. One of the first experiments

was to drive forward when you hold GREEN button and terminate program when you

press RED (see

code).

It moved but it was shaking a lot. Why? Well, on boot up there is another

colliding process husky_joystick … so the first step was to kill it.

Now I was seriously moving — instead of 10cm test in bedroom it moved 2m

in the entrance hall . But it was not turning … so I changed the ROS

message

# This expresses velocity in free space broken into its linear and angular parts. Vector3 linear Vector3 angular

to this:

return struct.pack("dddddd", speed,0,0, angularSpeed,angularSpeed,angularSpeed)

… then it turned for sure. The correct solution is on

github.

7th September 2014 — Quaternions and loose wheel/axis

What I like on robotics is that it is quite varied. One moment you try to learn

quaternions and the very next moment you have to deal with broken wheel. It is

not computer science only and it is also not mechanics only — it is a bit

of everything. So let's start from the beginning …

This weekend I took Husky for outdoor testing, finally. I started with two

simple functions goStraight() and turn(). And I ended there too. For

the moment I ignore signs so it is always going forward and always turning

left. The data from encoders, when going straight, were reasonable — I took

average of left and right traveled distance, and moved slowly (0.1 m/s) so I

ignored speed ramps … and maybe the system takes care of that??

The troubles come as soon as I was turning. I did not try that much at home,

you know carpet, and even in the garden it made "nice" holes in the lawn. At

first I tried to turn 90 degrees based on odometry data. I was quite naive. It

is basically the difference of left and right traveled distance, but to get

the angle you also need robot width … or better distance between left and

right steering wheel. Husky has 4 wheels, 2+2 connected with a chain, so that

have to skid. And they do. So once I set WIDTH to measured 55cm it turned

only approximately 40 degrees (instead of 90) [it was probably skidding

diagonally so the distance could be 1m instead].

Then there was much more serious problem: the left front wheel looked a bit

(approximately 1cm) pulled off and you could wiggle it a bit. I was not so big

deal when going straight, but it was stopper for turning. There was no other

way than to open the robot.

I must say, that the Husky robot is very nice done. Extra plus was that all I

needed was a set of hexagonal wrenches. And the problem? It looks like there

was missing lock on that particular wheel axis and thus it was no longer in the

bearing. It is possible that I did something wrong when loading/unloading

the robot to/from a car, but … it should be a bit industrial and

survive. There was no lock laying around so it is possible that it is missing

for a long time …

Quaternions — it is quite a while when I worked with them, and I forgot

everything. Why do I need them? Well, the robot orientation in /imu/data is

defined by Quaternion. If you do not know what it is then have a look at

wikipedia: Quaterinion and

conversion

to Euler angles. After some messing around (you can tell from commented

lines in IMU code) I found the magic formula:

self.imu = math.atan2(2*(q0*q1+q2*q3), 1-2*(q1*q1+q2*q2)) # along X axis

After that Husky turned close to 90 degrees once, but like 130 degrees next

time. It is probably integration of compass, gyros and accelerators … but I

am not sure.

My last experiment was with /imu/mag, which is really the direct source I

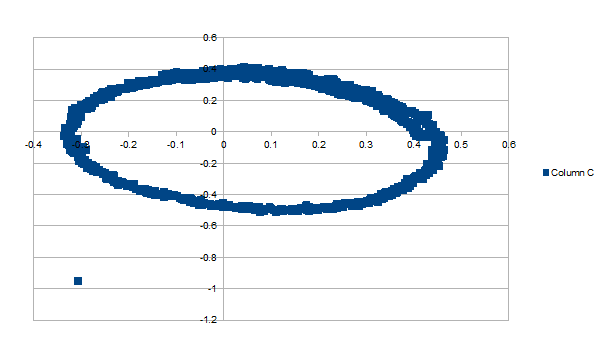

want.

Compass readings for more than one complete rotation |

There is one outlier reading but other than that it looks almost circular

and center is close to (0,0). Some calibration/transformation would improve it,

but it could be good for the first record & replay.

After that Husky stopped to talk to me. The weather was very nice today, so

maybe the processor case was getting too hot?? Maybe there is another ROS topic

describing it??

One more note, that setting velocity command (0,0) actually switches to neutral

and robot will start to move if it is on a small slope. So is there some

command for active braking?

11th September 2014 — Goodbye Husky!

Husky went home yesterday night, back to France. There are still some

unresolved issues, like the snap ring for the front left wheel, but there was

some progress anyway. I was in touch with Martin from Clearpath Robotics and I

learned that there is a jumper on motor controller which defines default

behavior: "coast" mode or "brake" mode. Our Husky had it in "coast" mode, so

when I send command (0,0) robot would move downhill by itself.

It is necessary to open both front and back cover in order to change the

jumpers. I did that and find out that one connector for temperature

measurements (my guess, 3-PIN connector with resistor loop) was loose. I did

not know how to put it back, so I started to open front cover again (it is 8

screws + 2 big ones for the bumper). In the middle of the operation I realized

that I took a picture of the motor controller before I changed the jumper, so I

could copy that:

The bad news is that there is no way how to control braking from the software.

You have to choose. If you are in the terrain braking is surely the choice —

I rather carried Husky uphill because without the brake it could be fatal

disaster. On the other hand if you want to just push the robot around, like

when you load it to the car, you want "coast" mode. Note, that pushing will

generate enough power that robot will start braking (even if you removed the

battery and it is in E-stop mode).

What else? Probably the PrimeSense sensor. Sylvio bought it a year ago, but

then the company was bought by Apple (see

the

discussion of upset users). The websites www.primesense.com and

openni.org are no longer working. There is a

source code on github, but it probably

won't be supported any more (click on the title link, for example).

ROS was supposed to support Kinect and PrimeSense, and after reading several

websites and almost corrupting robot installation I gave up. No ROS. An

alternative seems to be new OpenNI 2.0 driver available in beta stage:

http://structure.io/openni. When I downloaded it for windows it work

immediately. Also on Linux I could use NiViewer, but Husky does not have

monitor so you get freeglut (./NiViewer): failed to open display … note,

that this was already good news! The others were like

/opt/ros/hydro/lib/openni_camera/openni_node: symbol lookup error:

/opt/ros/hydro/lib/libnodeletlib.so: undefined symbol:

_ZN3ros7console5printEPNS0_10FilterBaseEPvNS0_6levels5LevelEPKciS7_S7_z or

Open failed: USB interface is not supported!. Note important choice is 32

vs. 64bit installation — the other will not work (Husky required 64bit,

Sylvio notebook 32bit).

OK, so I was able to theoretically run some program, but how to get the images

and depth data? It turned out that there is small Python wrapper already

available: https://pypi.python.org/pypi/primesense and although the short

example had a small mistake (get_sensor_info() requires some parameter,

probably sensor index), I got first Husky depth data . If you need image use

dev.create_color_stream(). Now I have two raw arrays as last memory of

Husky …

Is this „The End”? No, this is just the beginning! A friend of mine added ssh

tunnel in start-up script, so whenever is Husky connected to the internet I

could work on it. [Yes, I did not mention this detail — you can plug in

Ethernet cable in the WiFi router and then you get both: old Access Point and

robot connected to the Internet]. So that was the motivation to get the

PrimeSense working. Now I could get at least some reference pictures what

is/was going on 1000km away … could be fun … or nightmare .

3rd February 2015 — Husky at CZU

Today I finally spent the afternoon playing with Sylvio's robot Husky. It is

parked now at the university (CZU — Czech University of

Life Sciences Prague) for the winter (?). The hardest part was to recover mine

almost half a year old memories, even with the help of this blog .

Question: where is the starting point, what was the last used script?

Answer: the best version worked with ROS, i.e.

ros/huskyros.py.

It has two parameters (IP of the ROS master and ROS client).

Question: how to get images/depth from PrimeSense?

Answer: this part was not in github but was still left on Husky. There is

python wrapper and I was able to get the data with initial version of

openni2/getpic.py.

Note, that there is a bug in current getpic.py script. I wanted to use

gziped data, but if you write array with 16bit values the write() works for

normal file but not for gzip. TODO. Now I have collected data but reader reject

them that the file size does not match the real size:

File "C:\Python27\lib\gzip.py", line 349, in _read_eof

raise IOError, "Incorrect length of data produced"

IOError: Incorrect length of data produced

And how was Husky driving? Well, not much and very shaky. Why? I realized that

when I was going home … there was another controller running since bootup and

I forgot to kill it. Next time, hopefully with processing of depth data.

10th February 2015 — Simple Depth Viewer

Yesterday I completed simple depth

openni2/viewer.py.

In particular I fixed reading 16bit distance array. This simple script displays

the row data from PrimeSense. The decision if the file contains depth data or

color data is based on its size only. Expected is 320x240 array and depth has 2

bytes per pixel while color has 3 bytes. There was necessary some mirroring and

swapping colors. Also note, that depth in millimeters is divided by 20 and

uint8 value is used in gray scale image (i.e. resolution 2cm and max distance

5m). Finally value 0 probably means missing information, so it is ignored for

minimal distance.

Depth auto-portrait |

There is also a fix of writing gzipped data — I could repeat the bug (?) with

numpy 16bit array (see

diff),

and then fix it with array of bytes

(diff).

I hope that this will help today .

The next step is to integrate this distance sensor with driving. Prepared is

sketch of

followme.py

for collection of some real data. This robot behaviour was very popular on

robot Eduro laser scanner — see

video or source code

eduro/followme.py.

11th February 2015 — To the Wall

Thare was a big contrast between the afternoon and the evening. I exchanged few

mails with Ruud about 100 nodes ROS system and later that day I was trying to

get Husky to move with PrimeSense sensor (few ROS nodes). While the OpenNI2

driver with a viewer is working fine and fast on Win7, I was not able to get

ROS node working half year ago. I could try upgrade, but I would not be sure

that it would work after that at all. At the end I was glad for at least

slowly working sample in Python. Sigh.

So where are we now? Husky moves and collects pictures and depth data from the

PrimeSense sensor (see

diff).

If there is an obstacle within 1 meter it stops. The motion is very slow

(0.1m/s) because also the data collection is extremely slow. I hacked logging

of two threads communication (in this sense if Ruud quite right, that Python is

not robotic middleware and I do not have clean ready-to-go classes for

communication, logging, replay etc.) and it takes in average 30 cycles = 3

seconds!? Well, but at least we started.

Surprises? If I ignore my stupidity with typo of Husky IP address at the

beginning — it has to be on fixed network 192.168.1.x and I wrote 192.162.1.x

so no wonder it did not want to connect. There was another IP issue: If I run

HuskyROS with parameters localhost localhost it worked but not for

followme.py!? Desperately I tried 127.0.0.1 and that worked in both

cases — no idea why :-(.

Finally when I was driving in the school corridor the distance estimation

sometimes crashed:

Exception in thread Thread-2:

Traceback (most recent call last):

File "/usr/lib/python2.7/threading.py", line 551, in __bootstrap_inner

self.run()

File "./followme.py", line 32, in run

self.minDist = centerArea[mask].min()/1000.0

ValueError: zero-size array to minimum.reduce without identity

This was easy to fix. I used mask for non-zero numpy elements (I was glad that

numpy is installed on Husky machine) and if there was too much free space it

was looking for minimum of empty array. The same fix was also necessary in the

viewer

(diff).

Jakub via PrimeSense |

Distance image |

TODO list:

- sync depth and color data collection

- try Process instead of Thread

- detect human and floor

- navigate to free space

- follow human

- outdoor test

12th February 2015 — depth_stream.start()

I feel little bit stupid now. I was convinced that 32-bit OpenNI2 driver did

not work on my laptop, but … did I really tried that? Was not I dealing with

Linux on Husky?? Python 2.7 32bit did not work with 64bit DLL, which is not

surprising. When I run 64bit Python 2.7 the

getpic.py

worked without any modification.

Now I installed also 32bit version from

structure.io/openni and it still did not work

… for a while. And now it works. Both 32bit as well as 64bit version. I

believe that Windows did not release the OpenNI2.dll from the memory

(???) so I was still using 64bit version even the file was already replaced.

And it works as slow as on Linux .

So my apologize to Linux driver — it is the depth_stream.start() which is

very slow. Once you start it and only grab data with

depth_stream.read_frame() then it is much faster (approx 10Hz). Here is the

diff.

If you would like to read a bit about the sensor technology have a look

at this older article.

24th February 2015 — followme.py ver0

There is version 0 of

followme.py

(hash):

The code is relatively simple. It scans depth image approximately at 3Hz,

selects the nearest point in given ROI and turns that direction with speed

proportional to the distance.

There is TODO for version 1 — narrow search area centered around previously

detected nearest object (similar to Eduro version). You can see that the red

circle (nearest point) sometimes jumps to wall or some other nearby objects.

p.s. this video was created by

viewer.py.

23rd April 2015 — go.py into sun

I has been a while again. There were school lectures and I was playing with

Katarina so hardly any progress with Husky :-(. The weather

was great two days ago, so I finally did some outdoor tests with PrimeSense

sensor. I wrote very simple

go.py script: if

there is an obstacle on the left, turn right. If the obstacle is on the right,

turn left. If it is very close STOP and otherwise go slowly forward. I see that

Here is the code core:

if prev is None or prev < safeDist:

robot.setSpeedPxPa( 0, 0 )

elif prev < safeDist*2:

# turn in place

if obstacleDir > 0: # i.e. on the left

robot.setSpeedPxPa( 0.0, math.radians(-10) )

else:

robot.setSpeedPxPa( 0.0, math.radians(10) )

else:

robot.setSpeedPxPa( maxSpeed, 0 )

It was so simple that it worked within 10 minutes (yes, it was mostly copy and

paste from folowme.py) with logging so that I could travel back and forth

the corridor and then I could go outside. I expected that it will not work at

all in the sun, but it was not that bad. But the sun light is surely limiting

which you can see from this short video log file:

The red circle is the nearest obstacle and when approaching the corner you get

nice oscillations. But other than that it moved fine.

The logs from outside in the bright sun are mostly black = no depth

information at all. On the other hand it was enough if somebody walked close,

cast a shadow and in that the distance was measured.

13th May 2015 — Husky & Heidi (first test)

I am preparing demo for the competition Robot go

Straight!, which will take place in Písek this weekend (16th May 2015). This

time I would like to show combination with ARDrone2 Heidi.

The idea is that Heidi would fly approximately 1 meter above the Husky robot

and stream video as navigation data for the ground platform. The plan is to

reuse code

heidi/rr_drone.py

from last year.

Yesterday I was finally happy with navigation above oriented roundel — see

guide/lesson7.py.

I plan to change the configuration from down pointing camera to front

(ARDrone2 API supports detection of oriented roundel even if you do not use the

down pointing camera for video streaming). Heidi's part is ready for

„integration testing”.

The troubles were with Husky. I repeated go.py test (using emergency STOP

button to pause the robot) and 3 times it rebooted. The battery was probably on

the edge, but … I added battery status

(diff)

so now in robot.power is fraction of charge based on ROS

/husky/data/power_status. This value started at 0.37, with

occasional drop to 0.25, but the first computer restart happened at 0.30. In

the second run it rebooted at 0.22 where I expected that it is still safe to

use it. At the moment I am not sure if it was battery issue — if yes, I may

have troubles because the battery does not charge quickly if no then I do not

know :-(.

My next plan is to properly handle Emergency STOP. It is OK for stopping, but

if you unblock it and there was some former move command Husky „jumps” …

yes, that could be another reason for reboot even in the second case I made

sure that there was an obstacle in front of the robot so it would first send

(0,0) motion command.

The first and only video is not very exiting (Husky did not move), so at least

one picture from Heidi's down pointing camera after takeoff:

14th May 2015 — 2 days to RR2015

Well, it will be hard.

Robotem

Rovne 2015 is going to start in 48hours and I do not have properly working

version 0. Moreover Husky was making some bad jokes yesterday: when I started

the go.py with eStop pressed after unblocking it reboot the internal PC?!

Repeatably (3x). It worked when eStop was not pressed, but then it „jumped”

after release. I added small pause in the code to send 0 velocity command even

after eStop was released

(diff),

but it is hard to tell if that helped (I have Husky at home and there is really

no space to move around). I was so desperate that I wrote to CPR support and I

would like to thank Martin C. for his quick response . If nothing else it

was good that there is somebody I can talk to about the issue …

...the commands received during estop are not buffered, and Husky will always

act on the latest commands received. The timeout still applies. This means that

if the commands are stopped before the e-stop is released, the Husky shouldn't

move. If the commands are continued, the Husky will move at the full speed

commanded once the e-stop is released."

… which is something I am doing now, so we will see during further testing.

It could be related to some other issues — I am putting landing zone as lid

of the Husky, maybe the PrimeSense is taking too much power, or the battery??

We will see.

This morning I tried to install OpenCV via

Install-OpenCV.git but it

failed due to:

The following packages will be upgraded: libglib2.0-0 libjpeg-turbo8 libpixman-1-0 3 upgraded, 137 newly installed, 0 to remove and 243 not upgraded.

… at least I suppose that this was the problem and not

WARNING: The following packages cannot be authenticated! libglib2.0-0 libatk1.0-data libatk1.0-0 libavutil51 libgsm1 liborc-0.4-0 …

So I tried to upgrade, which is surely not good idea in such a short remaining

time, and hit another „wall” (at least for me, unexperienced Linux/Ubuntu

user):

$ sudo apt-get upgrade Reading package lists… Done Building dependency tree Reading state information… Done You might want to run 'apt-get -f install' to correct these. The following packages have unmet dependencies: ros-hydro-roscpp : Depends: ros-hydro-cpp-common (>= 0.3.17) but 0.3.16-0precise-20130919-0111-+0000 is installed Depends: ros-hydro-roscpp-traits (>= 0.3.17) but 0.3.16-0precise-20130919-0231-+0000 is installed E: Unmet dependencies. Try using -f.

Using force does not sound good to me, so there will be no OpenCV for

RR2015 and I will probably run drone part from laptop.

TO BE CONTINUED

15th May 2015 — H+H Network (failure)

Last night and this morning I tried to setup Heidi+Husky network, and I did not

succeeded yet. According to document From The Desk of The Robotsmiths: The

Husky may be connected to the Internet in two ways. The first is by connecting

the WAN port on the Microhard radio to a live Internet connection. The second

is by logging in to the Microhard via wifi, and changing its SSID and wifi

password to match that of an existing wifi network in your location.

Year ago I used the first case and it worked, although Sylvio had problems

probably due to colliding IP addresses. The ARDrone2 talks over Wi-Fi, so this

time I need to use the second option. I found

manual for similar router

(in Husky is VIP2-2400) and there I found what I needed, i.e. that I have

to change Access Point (An Access Point may provide a wireless data

connection to many clients, such as stations, repeaters, or other supported

wireless devices such as laptops etc.) to Station/Client (A Station may

sustain one wireless connection, i.e. to an Access Point.). I could fill SSID

of our home Wi-Fi with password and the connection was established. I could see

nice colors with signal bar, but … I could not ping outside (Destination

Net Unreachable).

With the drone it was similar or rather worse. Router IP 192.168.1.1 is

colliding with the drone IP, so first I changed that to 192.168.1.13. I also

tried to change Gateway to Router, but in my case it is like monkey

trying to do some programming :-(. So far no luck.

18th May 2015 — The Contest Robotem Rovně 2015

There is an hour for robot homologation (8am-9am) followed by four competition

runs. The task is simple — just go straight 314 meters without leaving the

park road (see interview

about the competition history in English). This year there were 25 competing

robots. Moreover new „specials” sub-category was created where flying,

walking, omni-directional and other non-standard robots were separately

evaluated.

What a pain! We arrived with both robots (Husky + Heidi)

shortly after the big tent for participants was build. I started go.py,

which I was using already for several weeks, and:

administrator@cpr-sylv-01:~/md/ros$ ./go.py 127.0.0.1 127.0.0.1 Go straight … METALOG: logs/meta_150516_020733.log STARTING /node_test_ros at http://127.0.0.1:8000 Listening on port 8000 … Traceback (most recent call last): File "./go.py", line 157, ingo( metalog, assertWrite, ipPair ) File "./go.py", line 80, in go robot = HuskyROS( filename=metalog.getLog("node"), replay=metalog.replay, ipPair=ipPair ) File "/home/administrator/md/ros/huskyros.py", line 33, in __init__ filename=filename, replay=replay, assertWrite=assertWrite ) File "/home/administrator/md/ros/node.py", line 90, in __init__ logStream = LoggedStream( self.requestTopic( topic ).recv, prefix=topic.replace('/','_') ) File "/home/administrator/md/ros/node.py", line 111, in requestTopic assert len(publishers) == 1, publishers # i.e. fails if publisher is not ready now AssertionError: []

What?! This never happened to me so I started again, and again … still the

same result. I was bit in panic mode, rebooted the Husky … the same again.

When I calmed down I modified the assert

(diff)

so I know what publisher is failing.

AssertionError: ('/imu/data', [])

OK, I can live without IMU, at least for the homologation. Then I remembered

that I can read ROS data directly from console, so

administrator@cpr-sylv-01:~/git/husky/ros$ rostopic echo /imu/data ERROR: Cannot load message class for [std_msgs/Imu]. Are your messages built?

??? The command is maybe wrong, but it does not matter now. It did not help.

After reboot I got:

administrator@cpr-sylv-01:~$ rostopic echo /imu/data WARNING: topic [/imu/data] does not appear to be published yet [WARN] [WallTime: 1431759925.748272] Inbound TCP/IP connection failed: connection from sender terminated before handshake header received. 0 bytes were received. Please check sender for additional details. [WARN] [WallTime: 1431759926.051634] Inbound TCP/IP connection failed: connection from sender terminated before handshake header received. 0 bytes were received. Please check sender for additional details. [WARN] [WallTime: 1431759926.361188] Inbound TCP/IP connection failed: connection from sender terminated before handshake header received. 0 bytes were received. Please check sender for additional details. …

Nice. I removed IMU from my code. Guess what? Another assert with publisher

failure. This time with motor controller and there is no way to drive Husky

without motor controller. Dead End.

I tried simple Python code (without ROS) from last year:

administrator@cpr-sylv-01:~/md$ ./rr.py

Exception in thread clearpath.horizon.transports.Serial.Receiver:

Traceback (most recent call last):

File "/usr/lib/python2.7/threading.py", line 551, in __bootstrap_inner

self.run()

File "/opt/ros/hydro/lib/python2.7/dist-packages/clearpath/horizon/transports.py",

line 465, in run

message = self._get_message()

File "/opt/ros/hydro/lib/python2.7/dist-packages/clearpath/horizon/transports.py",

line 536, in _get_message

chars = self._serial.read(1000)

File "/usr/lib/python2.7/dist-packages/serial/serialposix.py", line 449, in read

buf = os.read(self.fd, size-len(read))

OSError: [Errno 11] Resource temporarily unavailable

Traceback (most recent call last):

File "./rr.py", line 28, in

horizon.set_differential_output(30, 30)

File "/opt/ros/hydro/lib/python2.7/dist-packages/clearpath/horizon/__init__.py",

line 244, in set_differential_output

self._protocol.command('differential_output', locals())

File "/opt/ros/hydro/lib/python2.7/dist-packages/clearpath/horizon/protocol.py",

line 287, in command

self.send_message(messages.Message.command(name, args, self.timestamp(),

no_ack=(not self.acks)))

File "/opt/ros/hydro/lib/python2.7/dist-packages/clearpath/horizon/protocol.py",

line 372, in send_message

raise utils.TimeoutError("Message Timeout Occurred!")

clearpath.utils.TimeoutError: Message Timeout Occurred!

I expected that some other running process is using /dev/ttyUSB1 so my

program cannot open it, but … surprise there was no /dev/ttyUSB1!!! I had

like 5 minutes remaining for the homologation so I took Heidi, tried

rr_drone.py from

last year, left the road and hit the lamp in 2 meters from start.

Never-the-less I was listed at the end, after all homologated robots, so I

could still compete …

So where is /dev/ttyUSB1?! I unplugged and plugged IMU and motor controller

USB cables and that was the major breakthrough. My luck was back

administrator@cpr-sylv-01:~$ ls /dev/ttyU* ls: cannot access /dev/ttyU*: No such file or directory administrator@cpr-sylv-01:~$ ls /dev/ttyU* /dev/ttyUSB0 /dev/ttyUSB1

I do not know how to restart all failing ROS nodes and after reboot the USB

devices disappeared again so I focused on direct COM and my former program

(diff)

and again I was getting strange reports:

0x8010 Traceback (most recent call last): File "./husky.py", line 180, intestRR2015( com ) File "./husky.py", line 154, in testRR2015 robot.update() File "./husky.py", line 83, in update timestamp, msgType, data = self.readPacket() File "./husky.py", line 57, in readPacket length = ord(self.com.read(1)) File "/home/administrator/md/logit.py", line 32, in read s = self._com.read( numChars ) File "/usr/lib/python2.7/dist-packages/serial/serialposix.py", line 449, in read buf = os.read(self.fd, size-len(read)) OSError: [Errno 11] Resource temporarily unavailable

or

0x8200 0x8202 0x8800 Traceback (most recent call last): File "./husky.py", line 180, intestRR2015( com ) File "./husky.py", line 154, in testRR2015 robot.update() File "./husky.py", line 83, in update timestamp, msgType, data = self.readPacket() File "./husky.py", line 59, in readPacket assert length+notLength == 0xFF, (length, notLength) AssertionError: (242, 83)

The Clearpath Python wrapper probably hides this, because ./rr.py worked.

Husky was announced for the first run. I was so thrown off by the Husky behavior

that I did not give Heidi enough attention. I started the drone from my

notebook, then unblocked eStop to run old code on Husky and then the show began. It

was exciting until Heidi landed between moving Husky wheels! I know immediately

what it was — the time was 60s after start … yes, I had still testing

version for Heidi

(diff

also with changes after 2nd run) with timeout set to 60s. Pity, but I hit eStop

fast enough so Heidi was still as one part.

In the second run Heidi already had much longer time for the

hoverAboveRoundel() task. It only went bit too high and after approximately

10m (on the first crossing) lost Husky. Yes, there was no coordination of both

robots (I may describe dropping off functionality list at the end of this).

After that I increased the speed and aggression of corrections. I also changed

camera view to front camera as it may collect more interesting images.

In the third round I warned the spectators about parameter changes (without any

chance to test them) and yes, the drone was much more aggressive. But it was

also much more interesting to watch. As in previous runs if the target (landing

platform with oriented roundel) was not detected the drone moved slowly up.

When detection worked for last 100 pictures (the bottom camera runs at 60Hz)

then it was moving down to 1 meter above the roundel. As far as I remember both

robots cooperated in this run and it was terminated due to Husky leaving

road.

For the final round I modified Husky code. Yeah, it was big change

(diff)

… instead of horizon.set_differential_output(30, 30) I called

horizon.set_differential_output(30, 33) and the robot moved almost

straight. I had to stop it not because of the leaving the road but because of

queue of people in front of one kiosk. I was not complaining, because I lost

Heidi somewhere in the middle of the way so 90 meters/points was more than what

I could hope for.

Timeout - so here is at least video from the first run. Note, that the drone

starts with front video and then switches to bottom view.

19th May 2015 — USB Ghost

Last night I tried to read something about USB devices and how they can be

virtually unplugged. The situation was the same as during the contest —

missing both /dev/ttyUSB0 and /dev/ttyUSB1. It turned out that I was

actually lucky, because index 0 and 1 depends on the order how you plug-in the

cables and motor controller was expected on /dev/ttyUSB1.

In particular I was looking for use cases of /sys/bus/usb/drivers/usb/bind and /sys/bus/usb/drivers/usb/unbind.

administrator@cpr-sylv-01:~$ ls /dev/ttyU*

ls: cannot access /dev/ttyU*: No such file or directory

administrator@cpr-sylv-01:~$ lsusb -t

6-1:1.0: No such file or directory

6-2:1.0: No such file or directory

/: Bus 09.Port 1: Dev 1, Class=root_hub, Driver=xhci_hcd/2p, 5000M

/: Bus 08.Port 1: Dev 1, Class=root_hub, Driver=xhci_hcd/2p, 480M

/: Bus 07.Port 1: Dev 1, Class=root_hub, Driver=xhci_hcd/2p, 5000M

/: Bus 06.Port 1: Dev 1, Class=root_hub, Driver=xhci_hcd/2p, 480M

|__ Port 1: Dev 2, If 0, Class=vend., Driver=, 12M

|__ Port 2: Dev 3, If 0, Class=vend., Driver=, 12M

/: Bus 05.Port 1: Dev 1, Class=root_hub, Driver=ohci_hcd/2p, 12M

/: Bus 04.Port 1: Dev 1, Class=root_hub, Driver=ohci_hcd/5p, 12M

/: Bus 03.Port 1: Dev 1, Class=root_hub, Driver=ohci_hcd/5p, 12M

|__ Port 5: Dev 3, If 0, Class=vend., Driver=xpad, 12M

/: Bus 02.Port 1: Dev 1, Class=root_hub, Driver=ehci_hcd/5p, 480M

/: Bus 01.Port 1: Dev 1, Class=root_hub, Driver=ehci_hcd/5p, 480M

administrator@cpr-sylv-01:~$ lsusb

Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

Bus 002 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

Bus 003 Device 001: ID 1d6b:0001 Linux Foundation 1.1 root hub

Bus 004 Device 001: ID 1d6b:0001 Linux Foundation 1.1 root hub

Bus 005 Device 001: ID 1d6b:0001 Linux Foundation 1.1 root hub

Bus 006 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

Bus 007 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub

Bus 008 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

Bus 009 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub

Bus 003 Device 003: ID 046d:c21f Logitech, Inc. F710 Wireless Gamepad [XInput Mode]

Bus 006 Device 002: ID 0403:6001 Future Technology Devices International,

Ltd FT232 USB-Serial (UART) IC

Bus 006 Device 003: ID 067b:2303 Prolific Technology, Inc. PL2303 Serial Port

I also tried to complete the upgrade with -f option, but failed again on

grub-probe: error: cannot find a device for / (is /dev mounted?). Installation finished. No error reported. /usr/sbin/grub-probe: error: cannot find a device for / (is /dev mounted?). dpkg: error processing grub-pc (–configure): subprocess installed post-installation script returned error exit status 1 Processing triggers for libc-bin … ldconfig deferred processing now taking place Processing triggers for initramfs-tools … update-initramfs: Generating /boot/initrd.img-3.2.0-54-generic

I am mentioning this if it could be related to ghost behavior because after

reboot I have seen the USB devices:

administrator@cpr-sylv-01:~$ find /sys/bus/usb/devices/usb*/ -name dev /sys/bus/usb/devices/usb1/dev /sys/bus/usb/devices/usb2/dev /sys/bus/usb/devices/usb3/dev /sys/bus/usb/devices/usb3/3-5/dev /sys/bus/usb/devices/usb3/3-5/3-5:1.0/input/input9/event9/dev /sys/bus/usb/devices/usb3/3-5/3-5:1.0/input/input9/js0/dev /sys/bus/usb/devices/usb4/dev /sys/bus/usb/devices/usb5/dev /sys/bus/usb/devices/usb6/dev /sys/bus/usb/devices/usb6/6-1/dev /sys/bus/usb/devices/usb6/6-1/6-1:1.0/ttyUSB0/tty/ttyUSB0/dev /sys/bus/usb/devices/usb6/6-2/dev /sys/bus/usb/devices/usb6/6-2/6-2:1.0/ttyUSB1/tty/ttyUSB1/dev /sys/bus/usb/devices/usb7/dev /sys/bus/usb/devices/usb8/dev /sys/bus/usb/devices/usb9/dev

I do not know.

22th May 2015 — OS Upgrade

I decided to go ahead with upgrade of Husky OS. Martin from CPR confirmed that:

Our Indigo release is available and stable. There was quite a bit of clean up

and new features, so I would indeed suggest upgrading! Instructions to do so

can be found here. Be sure to back

up your system just in case! Then it was a kind of when if not now and who

if not me question (there are three events at the beginning of next month and

I need Husky working for the demo).

There were couple minor issues like I do not have a keyboard or monitor at home

(we use only laptops and „smart” phones), so I borrowed one at work and used

TV as monitor. Yes, Husky is now our second „dog” and lives in our living

room. And yes, you can imagine how happy is my wife about it.

The second detail was USB disk. Based on the suggested link

http://wiki.ros.org/Robots/Husky I downloaded relatively small (29MB) ISO

image and used Universal-USB-Installer-1.9.5.9.exe to create bootable USB

drive. That worked fine for other disk and other system, except that here I

selected last option Try Unlisted Linux ISO. The format of USB disk for

some unknown reason failed and maybe that was the detail causing problems later

— Husky BIOS did not see it (I could mount it and use it with the system,

but not from BIOS). So I used another USB disk, format it manually and then I

could see two more options in boot list (F7 option). I did not write it down

but one started from T and that worked while the other from U, which

did not work.

There was another „stopper” before this step — it is highly recommended

that you backup whole disk and the first step new installation will do is to

wipe your disk! Do I really want to reinstall all the packages I installed over

the year? Will it work in new system? It is hard to tell and I am sure I forgot

some details like automatic ssh-tunnel for remote access …

The instructions were clear enough, I would maybe only stress a little bit that

the internet is must have and you should have rather fast connection to

download all the packages. Actually I had to fill only IP, mask, gateway and

DNS server and the rest was automatic up to changing the computer name. There

was one moment when I was particularly nervous (586/718) when for several

minutes nothing was happening (based on „progress bar”)?! But now is the

system replaced.

I tried ./rr.py, but it did not work, because Python wrapper

(clearpath.horizon) is not installed. Then I tried my

husky.py, which

worked, but Husky did not move. I suppose that I did not configure sending

necessary messages which was formerly done with ROS on boot up. It was 1am so I

rather went to sleep and starting now at 5am … is it really so much fun?

As my grandfather would say: „it is done, just to finish it”.

27th May 2015 — ssh tunnel

This note is rather for myself if I ever have to reinstall Husky again. I had

working ssh tunnel in start up script so whenever was Husky connected to

the internet I was able to connect to it from dedicated server. It worked fine

for me. A friend of mine did it for me and I did not know all the details. And

after reinstall I lost that.

There were two issues: how to start it always on boot and where is home for

root user (for .ssh configuration). The first was solved with

/etc/rc.local file. It was necessary to add line before exit 0 and

redirect input, output and error output, and run it with &.

The second was sorted with hint from

askubuntu.com

in particular line

sudo su - root

and then with echo $HOME I got searched /root. Yes, like total

beginner. Now it establishes tunnel again and I am installing OpenCV (again).

There is still unsolved issue with IMU, which requires calibration. This means

turn in place which I would rather do outside …

p.s.

OpenCV 3.0.0-rc1 ready to be used

28th May 2015 — Compass calibration

It is still Wednesday evening, but … before I finish, it could be already

Thursday. I was bit lazy to carry Husky outside so I decided to do the

calibration at home at the end. This was after I read the source code of

calibrate_compass

where you can see what it will in reality do:

- turn for 60 seconds

- the speed is 0.2 (radians) which corresponds to 12 deg/sec

- in total two slow complete spins

Result:

administrator@cpr-sylv-01:~$ rosrun husky_bringup calibrate_compass rospack: error while loading shared libraries: librospack.so: cannot open shared object file: No such file or directory Traceback (most recent call last): File "/opt/ros/indigo/bin/rostopic", line 35, inrostopic.rostopicmain() File "/opt/ros/indigo/lib/python2.7/dist-packages/rostopic/__init__.py", line 1753, in rostopicmain import rosbag File "/opt/ros/indigo/lib/python2.7/dist-packages/rosbag/__init__.py", line 33, in from .bag import Bag, Compression, ROSBagException, ROSBagFormatException, ROSBagUnindexedException File "/opt/ros/indigo/lib/python2.7/dist-packages/rosbag/bag.py", line 65, in import roslz4 File "/opt/ros/indigo/lib/python2.7/dist-packages/roslz4/__init__.py", line 33, in from ._roslz4 import * ImportError: libroslz4.so: cannot open shared object file: No such file or directory ROS appears not to be running. Please start ROS service: sudo service ros start

Hmm, strange. I found

roscd-and-roslaunch-problem

that this was due to not properly set LD_LIBRARY_PATH. After setting it

export LD_LIBRARY_PATH=/opt/ros/indigo/lib/

it went through

administrator@cpr-sylv-01:~$ rosrun husky_bringup calibrate_compass Started rosbag record, duration 60 seconds, pid [2483] Started motion commands, pid [2484] [ INFO] [1432750402.337673856]: Subscribing to /tf [ INFO] [1432750402.347888180]: Subscribing to /imu/data [ INFO] [1432750402.356517429]: Subscribing to /imu/mag [ INFO] [1432750402.372649791]: Recording to /tmp/calibrate_compass.oXJK/imu_record.bag. Test underway. Time remaining: 0 Shutting down motion command publisher. Waiting for rosbag to shut down. Computing magnetic calibration. Calibration generated in /tmp/calibrate_compass.oXJK/mag_config.yaml. Copy calibration to /opt/ros/indigo/etc/husky_bringup? [Y/n] [sudo] password for administrator: Restart ROS service to begin using saved calibration.

OK fine.

It turned out that the LD_LIBRARY_PATH was actually by default set:

administrator@cpr-sylv-01:~/git/husky/ros$ env | grep LD LD_LIBRARY_PATH=/opt/ros/indigo/lib:/opt/ros/indigo/lib/x86_64-linux-gnu OLDPWD=/home/administrator

but the problem was with my favorite tool screen (I use it for the case

the connection is broken).

On the other hand /imu/rpy still does not work (it is in rostopic

list, but:

administrator@cpr-sylv-01:~$ rostopic echo /imu/rpy WARNING: topic [/imu/rpy] does not appear to be published yet

The same for /imu/temperature or /husky/data/encoders. The

/imu/data works fine.

p.s. in meantime I started to work on alternative backup solution. I picked

several years old board alix3d2 from

„intelligent home” project, changed static IP to 192.168.1.12, set gateway to

192.168.1.1 and dns-namespaces to 8.8.8.8, plugged Ethernet cable into Husky

switch, installed git and python-serial, downloaded

husky.git, inserted USB for motion

control and IMU, and was almost ready to go. Minimal dependences, much lower

power consumption (I know for other projects we may need stronger PC). I had to

change the code a little

(diff)

to request basic info from motion controller (originally pre-requested by ROS

master), and that was it. Husky moved and logged all data.

29th May 2015 — imu.py

You'll learn to love ROS, just you wait ;) … this sentence is repeated in

my mind again and again (the author is Martin from CPR). I do not know. Maybe

if everything works, but it usually does not. It is not ROS failure, it is

just complex system under development. The problem can be in the cable, bad

configuration, burned capacitor or who knows? For me it is still extra level

which sometimes hides the problems and makes the whole thing even more complex.

Friends at Robotem Rovne 2015 said „we

use ROS, we have to, but we do not like it”. They told me stories about

colliding libraries and dedicated systems only for ROS. On the other hand

roboauto is using ROS for their autonomous

car and also commercial mobile platforms

mir-robotics is using ROS. Also

apple harvesting

robot was using ROS. I do not know.

At the moment I do not have working ROS system. I believe that it is just some

simple configuration somewhere, but somehow I prefer to learn and understand

the foundations on which are the ROS nodes based. I had problems with IMU year

ago, so this time I started from scratch (see

imu.py today's

history). It looks OK, it is

well documented and I am

starting to be confident to build demo based on it for next Tuesday

(http://dnipola.sk/). At the moment

imu.py is parsing only

temperature, but I also see what messages it is sending:

UM6_GYRO_RAW_XY = 0x56 UM6_GYRO_PROC_XY = 0x5e UM6_MAG_PROC_XY = 0x60 UM6_EULER_PHI_THETA = 0x62 UM6_TEMPERATURE = 0x76

And the driver expects Euler angles and quaternions … so maybe the

calibration actually broke the IMU node??? But it was not sending the

/imu/rpy before, so probably not. Note, that now I am using another board,

not the mini PC with ROS, so it could be interesting if the ROS node is

changing UM6 configuration?? I am just running out of time, so „another day”.

3rd June 2015 — dnipola.sk/Encoders

It is early in the morning and I am preparing Husky for today's show. Yesterday

it went fine — I was using only

rr.py code from

Robotem rovně and I was flying with the

drone above the heliport sign. So far so good. Note, that I installed

clearpath_py_r920.tar.gz on both computer boards in order to run rr.py.

I made two observations:

- the battery was really „drained” by the hungry computer — using the alternative board solved the problem. Husky was running all day on one battery charge … yes it was mostly off, or not moving but still 100 times better than when I was watching power bar going to zero before. Also there were no overheating issues even during the hot day.

- the communication once a while fails and it looks like the Husky temporarily stops (very short „blink” of red light)

Today I planned to repeat the yesterday show, maybe with some extra maneuvers,

so that people will see the drone also turning. The simplest solution was to

add extra bits of code into husky.py

(diff)

and I was surprised (again) to see raw data from encoders with status of

emergency STOP button:

True (2, 0, 0, 0, 0) True (2, 0, 0, 0, 0) False (2, 0, 0, 0, 0) False (2, 0, 0, 0, 0) False (2, 1, 0, 25, 20) False (2, 3, 3, 34, 32) False (2, 8, 7, 28, 27) … False (2, 218, 212, 11, 6) False (2, 218, 212, -23, -17) True (2, 215, 210, -32, -24) True (2, 214, 208, -29, -26) True (2, 214, 207, -29, -24) True (2, 214, 207, -29, -24) … True (2, 214, 207, -29, -24) True (2, 214, 207, -29, -24) False (2, 214, 207, -29, -24) False (2, 214, 0, 0, 0) False (2, 216, 2, 35, 49)

So shortly after eStop was released the second encoder was reset to zero while

the first encoder continued from origin value?! I am still convinced that

something like this can case the „jumps” I was observing when using ROS

couple weeks ago … sigh.

Just remark, I would not use eStop for starting/pausing my program but it is

the only button available on Husky :-(.