John Deere X300R

modified garden tractor into autonomous robot

OSGAR is a long term project which will probably incorporate more than one robotic platform in the future. The project started in 2014 when we decided to modify school garden tractor John Deere X300R into autonomous robot. The project is carried on in cooperation with the Czech University of Life Science Prague. Blog update: 26/03/2023 — web refactoring

Team

- Milan Kroulík — project leader (CZU/TF)

- Stanislav Petrásek — mechanics (CZU/TF)

- Tomáš Roubíček — electronics (RobSys)

- Jakub Lev — software/testing (CZU/TF)

- Martin Dlouhý — software (robotika.cz)

2014

The idea is relatively old — our garden desires mowing every second week and I

would not mind some „helper”. Yes, in meantime autonomous lawn mowers made

serious advance, but it is not only our garden and there are much larger grass

areas which require regular maintenance (castle gardens for example ).

In 2014 we also started school project, where self-driving tractor should cut

grass and regularly monitor trees in a orchard. You could probably use GPS for

basic navigation, but there are many obstacles (trees), which have to be

avoided. You would need sensors for this task and if you have them then you can

use them also for navigation.

This „orchard task” is similar to navigation in the row of maize field in

Field Robot Event contest. We already got some

experience there (and prices) with our robot Eduro. The

very first experiment was to take Eduro, mount it on the top of the tractor

and manually drive it through orchard to collect some data .

14th May, 2016 — Contest „Robot, go straight!”

The Czech season of outdoor competitions for autonomous robots begins in May in

Písek. It is called Robot, go straight!

(in Czech „Robotem rovně”) and autonomous robots should only navigate

straight on paved road in central park. The track is 314m long and only small

electrical vehicles are allowed.

In 2015 we asked organizers if it would be possible to make an exception so

that we could participate with our modified garden tractor John Deere X300R.

The permission was granted but we did not use it — the tractor was not

ready yet.

In 2016 it looked much more promising because the school tractor was supposed

to be at exhibition in Brno, one month before the contest. But there were

problems with hydraulics components and this exhibition program had to be

canceled.

But we wanted to push the project forward! We joked with Standa and Tomáš, that

we can short safety switch under the seat and use it as emergency stop and then

just put brick on the gas pedal and version 0 is ready. Well, what I

did not know was that this „joke” will turn true and in reality we will need

it for homologation and the first run:

The contest is great. It runs all day (there are four runs), so we had enough

time to continue to work on the robot. In the second run the robot was already

driven by Python code and pedal was controlled via CAN bus. We even collected

several points and „good bye” joke was in the 4th run, when the tractor run

out of gas [we are so used to regularly check the battery status, but not

the gas — new experience].

For more detailed story see

Robotem rovně 2016 article.

4th June, 2016 — Contest „RoboOrienteering”

The contest „Robotem rovně” was great, because it got us started. Finally!

But how to proceed? We were quite exhausted from the contest so we took a break

next Tuesday (our group meets up once a week). And then I realized that

contests are the only way how to push out project further, i.e. complete

steering mechanism. The only related contest (as far as I know) was

RoboOrienteering, but the dead-line was

deadly. We had to finish the robot in two weeks (mainly Tomáš and Standa). The

deal was set that if I can write navigation software in two weeks they will

have the hardware ready. And so we fight again!

The task for RoboOrienteering 2016 was to navigate to differently evaluated

orange cones (their positions are known few minutes before the start — the

teams receives USB disk with configuration text file), and drop there golf

balls. There are two radii: 2.5m for double and 5m for single score.

Version 0 — the simplest thing which can possibly work — was here much

harder. If nothing else to score a single point it was necessary to have

integrated GPS, functional ball dispenser, and also robot had to be able to

avoid collision with obstacle (part of homologation).

My part was relatively easy — if you do not have any feedback data (encoders

or position of steering wheel) then your program has to be very simple. My

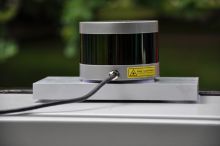

primary goal was to integrate new sensor

Velodyne VLP-16, so the first tests

were simple collision detection and STOP, something we wanted to have already

in Písek.

You can have a look at video from the first

outdoor test. It was not perfect. You can see „gaps” which corresponds to

dropped UDP packets, and at one point you can see tractor jumping back when

obstacle was detected. There was a non-trivial lag between reality and

processed data.

Yes, there was a bug in (my) software. After the program start it was waiting

for start button. The Velodyne socket was already opened but I started to read

it after button was in on position … well, fixed, no so many things damaged,

ready for competition.

The tractor first autonomously steered just few hours before the contest (we

arrived in Rychnov at 1am and started testing at 5am in the parking lot. So far

so good. Unfortunately none of us is expert in hydraulics and we did not know

that there is too much pressure in the system. It broke during homologation (it

was bit uphill so I had to increase the gas) — but over the day Standa with

colleagues somehow magically managed to fix it even without proper tools. [now

it is revised and working fine]

Video from RoboOrienteering 2016

For more info see the article from RO16.

23rd November, 2016 — Hydromotor video

Standa sent me link to very nice descriptive video how transmission works in

our John Deere tractor:

16th May, 2017 — „Robotem rovně” (again) and the first garden test

Last weekend we participated in contest

Robot go straight! in Písek. It was

primarily show how far we moved since the last year (brick on pedal to pass

homologation). The sensor set was: odometry (encoders on front wheels with

position angle of the left wheel), GPS used for reference, laser scanner SICK

LMS100 and dual IP camera with wide angle lenses. For the first two runs we

basically tuned the steering and collected real data of the environment. In

the afternoon we added simple correction from camera data to avoid green areas

(yes, this is a bit strange for lawn mower ).

The second day we tested our newly developed „cones algorithm” in the garden.

For version 0 it is sufficient to prove that we can repeat given pattern 10

times (or better infinite times, but we do not want to wait so long). You can

see it on the video bellow. We tried also to turn the cutting mechanism on,

but surprise surprise John Deere turns it off whenever the mower is in backward

motion. So we switched to a bit boring oval and did some experiments in higher

grass.

Please forgive me the quality of the video — it is taken by digital camera 12

years old (which is still good for pictures but not for videos).

18th October, 2017 — Mapping cones (ver0)

Yesterday we collected some data with autonomous John Deere for creation of a

map with positions of traffic cones. The traffic cones are used both for

definition of the area where the robot should perform mowing and also for robot

localization. Currently the laser scanner LMS-100 is still used as primary

measurement sensor and camera as backup.

The navigation algorithm for version 0 was quite simple:

- select cone which is in front of you

- steer wheels towards the cone

- while the distance is more than 2 meters go

- otherwise turn 90 degrees left and repeat

Sure enough we expected that this version will not be perfect, but the basic

idea worked quite fine. We managed to run only 3 tests before the nightfall:

one in front of the CZU machinery hall on the street and two in the test field

area parking lot. In the first test there were the cones placed too close to

each other and JD did not manage to turn and align to the next cone in time. We

faked it with „moving cone” to return semi-autonomously back to base.

The other tests on the small parking are were more interesting. In particular

the choice in front of you defined as the closest angle to straight line was

not very good. A small tree trunk as well as strong steel cable supporting

some construction were, from laser point of view, classified also as cones. So

the robot moved towards them instead of the original cone. Next version will

have to take into account also distance to the detected "cones" and ideally

verify them with camera.

The traces as well as the code are publicly available:

- code is in feature/test171017 branch

- traces are described on github wiki

You can replay logs with viewer with command line

python.exe navpat.py replay –view osgar_171017_155343.zip

8th November, 2017 — Robotour Marathon (attempt 0)

You probably noticed that there is a new contest for outdoor autonomous robots:

Robotour Marathon 2017/18. In order to

participate, your robot has to be able to autonomously navigate on paved road

mapped in OpenStreetMap. Jakub and Standa

recorded last week following video:

Note, that the video was cut, due to human intervention (you can download the

whole log file here). For

navigation was used

demo_wall.py

from the last year.

In general, autonomous navigation from point A to point B is useful also for

small garden robots, so hopefully you will see some updates there next year

…

22nd June, 2018 — John Deere at Field Days

John Deere was presented at Field Days 2018 at

Všestary near Hradec Králové. Autonomous navigation within area defined by four traffic

cones was shown.

26th March, 2023 — Web refactoring

Note, that there was a "minor" web refactoring as OSGAR was further developed

(mainly for DARPA Subterranean Challenge and autonomou tractor John Deere is no longer

as active as it used to be five years ago. But this may change soon again, but let's keep the track separately.

There are 3 new websites now: