Move Your Robot 2017

3rd year of Naio challenge

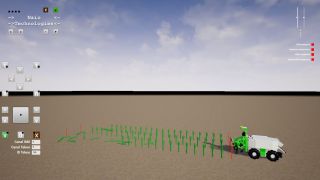

MYR2017 is outdoor contest for autonomous robots organized by French company Naio Technologies. The participants have to first demonstrate that they are able to control Naio robot in Simulatoz (yes, Z is correct) provided by the company. Then only the best teams can run their code on the real robot. The first prize is 1000 EUR in each category (simulation, real navigation and free style). Blog update: 1/12 — Summary

Eduro Team Blog

25th November 2017 (5 days remaining)

The competition is part of FIRA

(International Forum of Agricultural Robotics) organized by

Naio Technologies. Two of mine

colleagues from the Czech University of Life Science in Prague are going there

to see the presentations about robots … but not the competition.

Three days ago I received mail, that it's possible that you participate at

the contest from distance !!!

There is not much time left, so I will focus on „what is going on”. First of

all it is necessary to download Simulatoz.

The simulator is based on Unreal Engine, but

it requires Ubuntu Linux (other ports are not planned). It is also quite

demanding — my first experiments with Virtual Box were not very

successful.

Useful information about Naio robot communication protocol contains

Move-Your-Robot-2017-Technical-Note.pdf.

There is relatively small set of data messages corresponding to sensors:

accelerometer, gyroscope, GPS, Lidar (1 degree resolution, 180 degrees field of

view), odometry, and raw stereo camera images. You can control motor PWM and

actuator payload (up/down).

Our source code is growing here: https://github.com/robotika/naio

How far are we? Well, I had to install Ubuntu on another notebook, so now I can

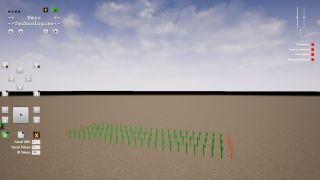

see the three testing worlds (simple one, stones and stones with grass). Our

robot so far just moves only straight, even across the plants … but it moves!

Joan, our remote referee, sent me three log files, with comment: Going only

forward, the robot ran into the leaks :) better fix this before :p But it was

moving in France! (from encoders data around 3 meters) At that time I could

not force it to get it moving on my machine … until Jakub from the team test

it on his machine with result 45cm (total traveled distance)?! It looks like

the speed is dependent on the computer and then I realized that even my robot

moved in the Virtual Box but only 3cm per 1000 messages!

Why am I doing this? It is fun, for sure, but it also correlates with our long

term goals for Robotour Marathon. The next

year we would like to add there an option to run the code on other robots a

vice versa, so remote

Move Your Robot is

exactly the first hand experience. We will see.

26th November 2017 (4 days remaining) — World1 ver0

OK, there is not much progress in simulated robot Naio driving, but … at

least some bits:

- record and replay is 1:1, including --force parameter to skip output assert

- there is class Robot with parameter functions get and put

- unittests are useful … see test_robot.py and test_logger.py

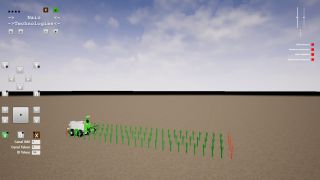

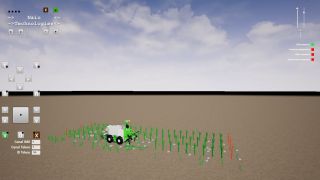

The robot moves and is able to complete World1 (well, it was able to do it

even by just turning motors on, but this looks smarter).

The results for World2 are not satisfactory yet (one quick test only):

There was only one stopper, but it forced me to finish the implementation

of 1:1 replay:

Fix

odometry ID … there was fix in the documentation, so make sure you have the

latest version!

Note, that there is also log file format change (new version starts with

"Pyr\x00"). Now it contains not only Naio messages but also timestamps (4

bytes with microsecond resolution), channel (currently used only for

Input(#1) and Output(#2), but the plan is also to record video channel).

27th November 2017 (3 days remaining) — Log annotations

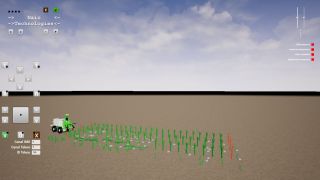

The robot finally managed to navigate to the end of the row, move extra 1.2

meters, turn 90 degrees right, move 0.7 meters, turn 90 degrees right and enter

next row. It could do it 10 times, but only in World1 (see

the

code)

For World2 it is completely crazy. The laser is positioned near the ground

and does not distinguish between grass and the leek. I tried to log also the

camera (see PR3, but these are raw

(752 x 480) stereo images, i.e. 722kB! I tried to record just 1 meter motion

and I collected 3 images only.

Well, originally I wanted to talk about Stream 0, which was so far used for

debug info and now it also contains tags for turn and straight motion …

but it is too late, so maybe after the contest … sorry.

28th November 2017 (24 hours remaining) — Testing Day

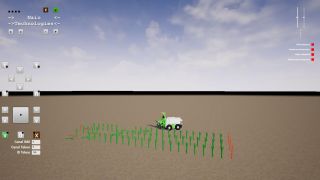

Today is the Testing Day when teams in Toulouse/France test their

algorithms on the contest simulator machine and also on the real field robots.

Our status is not great this morning, but at least I finally understand, what

is the goal, I hope! Yes, I am a bit slow … see

pull request 4 related to outside

field. The robot is supposed to handle all leeks, including the borders from

outside …

Here you can download log

file cutting the corners, with this result:

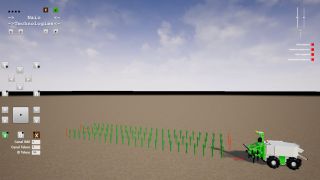

Yes, the code still does not properly handle slightly more complex scenarios

like World2 (you can

download 70MB log file with

stereo video stream).

And the result:

The plan is to ask Joan to do some remote tests on the real robot (there are

new command line parameters like --test 1m), fix the world 1 corners and

try more complex algorithm for simulated worlds 2 and 3.

7pm — testing is over

I must admit that it is not easy to develop, test and run autonomous robot

remotely. I think that Joan had quite hard time, because I can be quite

demanding when robots are involved.

I got first logs in the morning, still from the simulator. Joan wrote me: At

the end of the line, it turns too strong, miss the re-entry, but finally get a

outside line, and follow it, but after it get lost, and start to do crap and i

had to close it :p

In the afternoon was Naio robot finally available so Joan recorded --test

1m, --test 90deg and --test loops. I did not get any comment on

testing 1 meter, but turn did not work properly: test 90deg, on the robot it

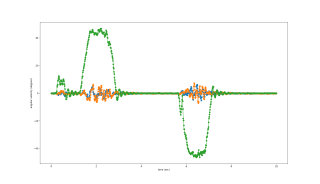

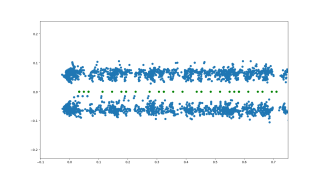

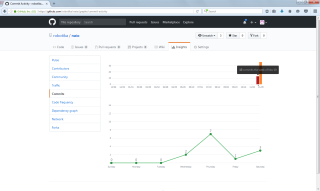

seemed more a 45 to the right and back to the left.. Here is the graph of

gyro angular speed:

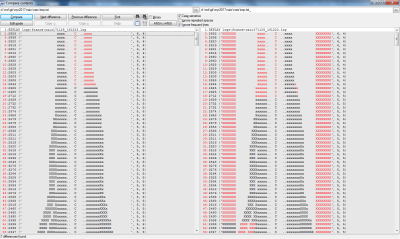

The reason was relatively simple, see the times of laser updates:

0:00:00.002013 0:00:00.053964 0:00:00.105295 0:00:00.157111 0:00:00.208913 0:00:00.261305 0:00:00.313005 0:00:00.364111 0:00:00.416124 0:00:00.467384 …

i.e. laser on the real Naio robot is running at 20Hz, while in simulator it was

only 10Hz. Yes, my fault — I used 0.1s directly

in the

code. Sure enough I forgot to update both copies (yes, you read it correctly!)

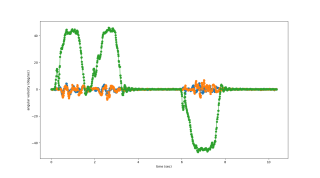

… I must refactor it. So another test finished: almost 90 on the go, but no

way back.. But the log was strange:

At the moment I still do not have any idea what happened there?! The motor

commands were constant for the whole time period. I know that the angle

integration with potentially variable timedelta is not exact, but …

strange.

Joan also confirmed the differences: You are right, on the simulator, we

decreased frequency to improve performance. Note in the robot, you will have

often similar data from one frame to another, so 10hz on the simulator was not

a so bad idea :).

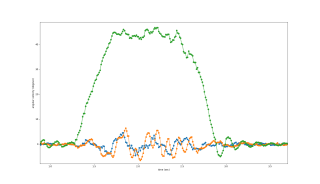

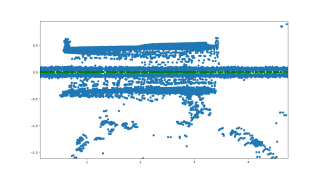

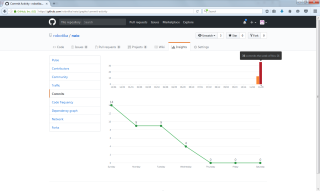

Then the --test loops: now the loops, it ran 6m without stop. … but I

do not know if it should or should not stop. This is the integrated laser

view:

Note ultra near laser reflections — the simple algorithm was basically blind.

Also the distance traveled reported by logparser.py is Total distance

11.90m … so another difference between reality and simulator?

So what now? To focus on better navigation on real robot or simulator?

Joan wrote: Tomorrow morning, we run simulation contest, and afternoon on the

robot, may be we can get some data, but not sure, as other teams will do some

tests on the real robot too.

The tomorrow (29/11) schedule is:

- 10h-12h : épreuve simulateur

- 14h-16h : épreuve plein champ

- 16h-17h : épreuve champ libre

- Lieu : Quai des Savoirs (Toulouse)

9pm — update laser "dirt"

Well, the „problem” with laser is due to the limited view (which is in

reality mentioned in the PDF). See the ASCII art difference below:

… so the real laser sees the robot itself for angles: (-135deg, -90deg) and

(90deg, 135deg). It does not influence the navigation except recognition of

empty space. Fixed.

29th November 2017 — The Competition Day

2am — Night Shift

I woke up to correct the cutting corners: the idea is that if for bigger radius

you get the same result as for smaller one then you are already on the field

edge and you should not correct towards the plants any more. But, as

XP advocates, test first

so I did. Jeeeeez! The fixes for real robot definitely broke everything even

for World1. :-(

Here are the reference tests:

- naio171129_015756.log — it missed the entry to the field, navigated on the outer edge back, then turned to empty space, lost direction and crossed the field in 90 degrees destroying all plants on the way.

- naio171129_020503.log and naio171129_021127.log — it was too far from the field, so it directly evaluate it as „this is the end of row” and start U-turn and moved from the field.

There is no longer need to think „what next?” because it is obvious. (Yeah, I

was thinking that I will give up the simulation league, but that round is at

10am while real robot is in the afternoon … priorities are clear now)

… it is time to implement enter the field.

3am — enter_field ver0

OK, here is the first trivial implementation:

60ac6b499.

I added also new test case called with parameter --test enter. It is not

very sufficient as both experiments naio171129_025616.log and

naio171129_030850.log left the field (robot did not manage to enter the

second row … maybe I should for simulation use hard-coded "0.1s" for time

period???)

3:30am — Refactoring and Joke of the Day

I did the turn right/left 90 degrees refactoring

(d73cebc7a),

but now comes the „joke of the day” or rather „of the night”. I lost my

Internet access! And guess why?

I use UPC for 5 years basically without any problem and yesterday I received

mail: Dovolte nám, abychom Vás touto cestou informovali o plánované údržbě

sítě UPC ve Vaší lokalitě (XXX), která bude probíhat od 29.11.2017 00:00 do

29.11.2017 04:00. V průběhu této údržby může docházet k výpadkům našich

služeb. … it is in the middle of the night, so who cares, right? I love

„Major effects” (in Czech „generálský efekt”). On the other hand I am very

happy that git works off-line.

Now even our local network is confused :-(

d:\md\git\myr2017-naio\naio>d:\Python35\python.exe myr2017.py ––host 192.168.23.

13 ––note "test World1 with enter_field - hacked turn (1st run)" ––verbose

could not open socket ('192.168.23.13', 5559)

… OK, it is running now, and also the Internet.

Sure enough with

the

0.1s hack World1 works fine … so what to do about it?! I wish Naio robot

sent also the timestamps, maybe NAIO02? Now I see the cut corners I was

going to fix … and worse (naio171129_034825.log).

4am — Cutting corners

It is time for

cutting

corners and get some sleep …

Maybe Alicia from Naio was right at the end: I understand that affraid you to

participe with so little time. … I am getting a bit tired of the view of

destroyed field, sigh. This time it is really „massive destruction”

(naio171129_041525.log).

I need a break … hopefully Jakub will wake up soon (in France), to have a

look at pull-7.

7am — Continuous Integration

… would be nice, i.e. while I was "sleeping" last 2 hours, the computer would

run the code on all worlds as many times as the machine managed to run it,

including final screenshots. … and I would check now. Well, next time,

maybe.

I should also try to run it locally, on Jakub's machine, and mainly on

competition server. They behave differently, which is pity and I will leave it

as comment to my summary. Well, in meantime

naio171129_072415.log was

just perfect — so we have to pray for good luck!

… and time to run to work without breakfast … maybe

7pm — Home, sweet home …

This was crazy day! I was like zombie, but nobody noticed, which is good. It

was also very interesting experience — probably something like the drone

pilots fighting on the other side of the planet Earth (yeah, Toulouse is not

that far).

Until today I still expected some „mud experience” in the soaked field like

the one on the Naio motivation picture. I was like blind, until

Joan recorded and shared with me this video:

What a beast! And what a funny living room setup?! But the behavior seems

somehow familiar … it is my code! Notice destruction after the return …

how could I expect that there are some people sitting just next to „the

field”? There are basically two modes implemented

in the code: navigate in the row of plants

and navigate along the outside boundary (which means at least 1 meter of space

from the robot lidar).

I still have several logs from Joan to review and generate some „interesting”

graphs for you. If I should pick the highlights, it would be:

- the „camel shape” for angular speed is due to stopping the robot (it is actually perfectly clear on the YouTube video). The motors have to receive update every 50ms and I used laser scans for robot.update() running at 20Hz. Do you see any coincidence?

- odometry on simulator is at 100Hz while in reality it is 20Hz

- the option --video-port 5557 completely froze the navigation (so I do not have any „war documentary”)

Tomorrow I should know more about the other teams and results …

30th November 2017 — The Results??

I do not have any official results yet (well, it is 5am, so no wonder), but I

can share with you all the bits I have so far. I would start with the list of

mails I got over the day from Juan. According to the original schedule, there

was supposed to be Simulation (10am-12pm), Open field (2pm-4pm) and Freestyle

(4pm-5pm).

This is still valid screenshot from

https://www.naio-technologies.com/Fira/en/move-your-robot/ so you may

better understand why I expected trials in the field.

Here is the list of all mails from Joan from yesterday (note, that I had also

opened chat with him later on, so he could share with me news like one team

drive successfully the robot between the crops with pretty line changing (not

on place):

- 10:04am ––test 90deg of the morning on the robot : the robot turns to right about 45, and come back to its starting direction. naio171129_100319.log

- 10:19am Just tell me when you are ready, if you want we can open a hangout.

- 10:31am No problemo. you can add them into git, and for robot prefill ip/port 10.42.0.243 5555

- 10:38am It runs between crops :) well done. it's running 1m, stope a little, restart 1m..and so on, not very smooth, but operational. do you want i open a gmail hangout ? naio171129_103540.log

- 10:39am we start simulation tests, you have some time, i will pass you the last.

- 12:20pm level 1 and 2 naio171129_114313.log, naio171129_114421.log, naio171129_120150.log

- 1:52pm 1m test on real platform naio171129_135139.log

- 1:54pm now 90deg, not so bad, a little bit too strong on the right, but it turned back on the starting position naio171129_135321.log

- 2:00pm naio171129_135823.log

- 2:47pm i ran your test with 85 instead 90 in the trun90, it works well. on a line, the robot stops every 1 seconds and restart, maybe a timeout motor, you have to send commands every 50ms. i try to send you the video :) naio171129_144334.log, (video)

- 2:56pm gyro at 15ms if i remember

- 5:55pm a complete run. naio171129_174152.log

I uploaded all log files to OSGAR storage if you

would like to see all the details.

I also created two tags on GitHub:

You can replay the code 1:1 with --replay parameter:

python3 myr2017.py ––replay logs\france\naio171129_114313.log REPLAY logs\france\naio171129_114313.log gyro_sum 30398 0:00:02.496553 23 gyro_sum 29780 0:00:02.467529 23 Exception LogEnd REPLAY logs\france\naio171129_114313.log

Add --verbose if you want to see ASCII row navigation:

python3 myr2017.py ––replay logs\france\naio171129_114313.log ––verbose

REPLAY logs\france\naio171129_114313.log

enter_field

3966 (' X xxxx.. C …x x . XX. . ', 5, 5) (3, 2)

3666 (' XX xx xxx. C ..xx xx… X . ', 4, 4) (3, 2)

3640 (' X xx xxx. C ..xxx x .. XX . ', 4, 4) (3, 2)

3617 (' X X xx.. C .xxx XX. . X . ', 5, 4) (3, 3)

3793 (' X XX x x.. C .xxx XX . ..XX ', 5, 4) (3, 3)

3728 (' XX xxxx.. C ..x x . X . . ', 5, 5) (3, 3)

3818 (' XX x xxx. C .xxx x ..XX. . ', 4, 4) (3, 3)

3902 (' XX x xxx. C .x x xx. .XX . ', 4, 4) (3, 3)

3727 (' XX X xxx. C .xxx XX . .XX. ', 4, 4) (3, 3)

3727 (' XX X xxx. C .xxx XX . .XX. ', 4, 4) (3, 3)

If the output commands are not identical to what was used during navigation

(I somehow see this as one of the most important feature! Especially if there

is somebody else on the other side of the world and you cannot be 100% sure

that he/she used the latest version with proper parameters and configuration)

you will see something like:

python3 myr2017.py ––replay logs\france\naio171129_100319.log

REPLAY logs\france\naio171129_100319.log

Traceback (most recent call last):

File "myr2017.py", line 402, in <module>

run_robot(robot, test_case=args.test_case, verbose=args.verbose)

File "myr2017.py", line 365, in run_robot

play_game(robot, verbose=verbose)

File "myr2017.py", line 335, in play_game

navigate_row(robot, verbose)

File "myr2017.py", line 179, in navigate_row

enter_field(robot, verbose)

File "myr2017.py", line 161, in enter_field

robot.update()

File "d:\md\git\myr2017-naio\naio\robot.py", line 42, in update

self.put((MOTOR_ID, self.get_motor_cmd()))

File "myr2017.py", line 62, in put

assert naio_msg == ref, (naio_msg, ref)

AssertionError: (b'NAIO01\x01\x00\x00\x00\x02pp\xcd\xcd\xcd\xcd', b'NAIO01\x01\x

00\x00\x00\x02p\x90\xcd\xcd\xcd\xcd')

… what is wrong? Open the log file and at the beginning (beware it is binary

file!) are stored command line parameters. In this file it is ['myr2017.py',

'--test', '90deg'] … so just let's double check:

python3 myr2017.py ––replay logs\france\naio171129_100319.log ––test 90deg REPLAY logs\france\naio171129_100319.log gyro_sum 29549 0:00:02.223993 43 gyro_sum -29620 0:00:02.152708 42 REPLAY logs\france\naio171129_100319.log

Yes, now we are sure that Joan run the simulation code on the real robot (I had

to hacked and made modified copy named real_robot_only_myr2017.py) so it is

expected that the robot turns again only 45 degrees. OK? Any questions?

Note, that you may also ask for help: python3 myr2017.py --help

usage: myr2017.py [-h] [––host HOST] [––port PORT] [––note NOTE] [––verbose]

[––video-port VIDEO_PORT] [––replay REPLAY] [––force]

[––test {1m,90deg,loops,enter}]

Navigate Naio robot in "Move Your Robot" competition

optional arguments:

-h, ––help show this help message and exit

––host HOST IP address of the host

––port PORT port number of the robot or simulator

––note NOTE add run description

––verbose show laser output

––video-port VIDEO_PORT

optional video port 5558 for simulator, default "no

video"

––replay REPLAY replay existing log file

––force, -F force replay even for failing output asserts

––test {1m,90deg,loops,enter}

test cases

… you can tell, I was very bored last week:

There would be a lot of fun now to check all recordings (BTW it looks like the

hack to switch to GYRO_ID instead of LASER_ID was probably not used at the end:

git checkout MYR2017_REAL_ROBOT and python3 real_robot_only_myr2017.py

--replay logs\fran ce\naio171129_174152.log gives you the error I was

speaking about: AssertionError:

(b'NAIO01\x01\x00\x00\x00\x02\x00\x00\xcd\xcd\xcd\xcd', b'NAIO01

\x01\x00\x00\x00\x02pp\xcd\xcd\xcd\xcd') … so what code was used for my

participation in MYR2017 contest?! Probably the previous one??

git checkout 6619ea4c2924dd50460d25be3a3a69f54517dee4, no, the same failure.

Modify here the 90 to 85 … no, it does not work neither. Hmm … so I am

really wondering … maybe you got my point … note, that there does

not have to be anybody else involved. Do you remember what parameters and

version you used on your robot yesterday at 10:13am? No? Well, rather double

check that it is 1:1 to what you are debugging now

The last comment for today: Milan, our team leader, called last night around

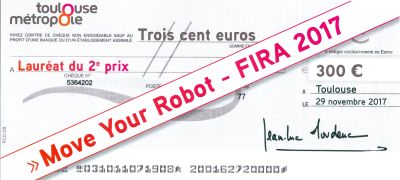

9:30pm from France, that we got **Silver** and there was only 1 point missing

to the winner. The organizers probably decided to merge all categories and

evaluate them as one. … so let's wait for the official results.

1st December 2017 — Summary

It is „funny” how the energy after the action exponentially decreases.

The other waiting tasks start to call for attention and there is no longer time

pressure to complete this one. So let's rather hurry before I will leave it

with open end …

One real trace is better than ten simulations

I do not like simulators (yes, and all my friends know it ). And this

contest MYR2017 just confirmed it. Later I realized that I already played with

robot navigation in simulated world — see

ALife III in

Webots environment. There it was much simpler, and

the simulation was rather robot oriented. It was not as nice as Naio

SimulatoZ with complete physics, bending sticks and destroyed plans, but it

run in iteration cycles, so the results were the same independent of the speed

of your computer. There was even option to turn visualization off (yeah, I like

console tools) and then you could only see, what the robot sees.

Simulation helps you to get started (if installation is trivial, and „hello

world” is immediately working). But it should be clear from the beginning „what is

the goal?” In my case it is always working real robot, and for that I see

much more value in just to turn motors on and collect some data. You will see

strange artifacts like lidar selfie from the first log. Also all sensors

update frequencies and requirements for control (50ms for motors) should be

solved within the first few experiments.

In MYR2017 case, the simulation actually slowed me down. Yes, it was dedicated

category, so there was no way around it, but at the end I had two different

code versions (for real robot and for SimulatoZ) and I should actually have

three versions due to my slower computer and fast competition computer in

France.

Naio protocol

I actually liked it. I did not spend any time trying to understand their

ApiClient or

ApiCodec, because it was

written in C/C++ and I planed to use pure Python. So maybe some Hello Naio

World! in Python would be nice?

I would appreciate also some table with expected update times for various

sensors. Yes, you can estimate it from the first log, but why not to summarize

it in some table? In particular when the simulation update rate is different to

the real robot.

What I did not like were missing timestamps of the real-time (ECU or the

microcontroller) unit. We had this many many years ago (see

communication protocol used in

robot Daisy for example). The main program should not worry

about microseconds and the precise timing and the lower level should provide

that.

I also did not like the encoders/odometry implementation. There is no single

robot, as far as I remember, in our robots portfolio having only

single encoders and not quadrature

encoders (see nice

animation of the

principle). Also resolution 1bit is hard to believe these days. I would hope

for byte handling signed updates, maybe 16-bit to be sure about overflow,

underflow and failures. I know that the resolution is very rough — 6.465 cm,

so one 8-bit overflow is in 16.5 meters (!), but that is another HW issue.

I also missed some speed controller, i.e. I would set the speed instead of

motor PWM duty cycle.

Stereo camera

That could be fun, but unfortunately I did not manage to record a single frame

on the real robot yet. I wonder if Joan would have more time for me to resolve

this issue.

Remote development

It was crazy idea , but I am really glad I had the opportunity to try it.

You realize how difficult are even the simplest (you would guess) steps! Now I

see that to record video of every test case is must have — the context is

important and without surround 360 degrees camera on the robot is almost

impossible to guess. That first video was

really shock for me … but then everything was clear (or easier to

understand).

I am glad that I wrote the simple tests (thanks

Cogito to teach me the usefulness

back to first Eduro outdoor times). That

helped. What I did not prepare was some kind of „test scenarios”, i.e.

traffic cone in front of the robot, so I could measure the distance traveled or

the angle turned.

Thanks

I am again running out of time, so it is definitely time for thanks.

First of all I would like to thank

Joan Andreu

without who I would not be able to participate. I would like to thank him a

lot for patience — it must be hard to organize the contest and at the same

time to support some crazy outsiders.

Then I would like to thank

Alicia Vionot

for handling contest administration and pushing me with her comment, that she

understand that I am afraid to participate, when there is so little time (a

week) left. Nothing else could motivate me more!

Then I would like to thank my friends Honza (Simi) and Jakub for their help to

get some Ubuntu working with SimulatoZ. Although I instructed to give it up

if it will not work immediately, Simi tried to recompile kernel and graphic

card drivers to help. (If I would know I would stop him!) And Jakub was the

first who confirmed that it moved in his setup so naio171124_121400.log is

probably the oldest log, when simulated robot moved.

Finally I would like to thank my wife for patience and borrowing me her

notebook … which I reinstalled from Win8 to Ubuntu. Well, she does not know it

yet, and it is dual boot … but … and it was much harder week with me

than usual.

And for sure big thanks to Naio

Technologies for organizing this contest and good luck to them with further

robot development!

Thank you all

m.