Field Robot 2025

Milano, Italy

Field Robot Event 2025 (FRE2025) will take place in Milano, Italy, from June 9 to June 12, 2025, at Agriturismo da Pippo. This competition is open to universities worldwide, offering a unique opportunity to push the boundaries of autonomous farming and agricultural robotics. Blog update: 18/06/2025 — Official results task 3, 4, 5

Official announcement

Competition Challenges FRE2025:

- 🚜 Task 1: Autonomous Maize Field Navigation

- 🌽 Task 2: Autonomous Maize Field Navigation with Cobs Detection

- 🍎 Task 3: Fruit Mapping

- 🍄 Task 4: Bioluminescent Fungi Discovery

- ✨ Task 5: Freestyle

💡 Want to compete? Registration opens on March 3, 2025, and closes on April 25, 2025. The registration fee per team will be announced at the opening of registration.

🤖 Seeking sponsorship? New teams can apply for sponsorship to support their participation, with funding up to €1500 per team by CLAAS-Stiftung. More details and application link: https://www.claas-foundation.com/students/field-robot-event/application.

📢 Have questions about the rules? Join our FRE2025 Discord server now to get updates, connect with teams, and participate in the Q&A discussion: https://discord.gg/56unzZVBqn.

Read the rules now!

🌐 Website Update: Further event details will soon be available on our website: https://fieldrobot.nl/event/

📍 Getting here: The event venue is 12 km from Milano Linate Airport and 17 km from Milano Central Station (Google Maps).

CULS Robotics Team Blog (CRT-B)

CULS Robotics is a new Czech student team for Field Robot 2025! Yes, it took only 10 years to find a group of excited students and „start again”!

The team of 3 students was founded in Czech University of Life Science Prague. The members are all in the last year of their study, so the priorities a bit colide with with

details like finishing diploma thesis or final exams … but their self-motivation is still strong so hopefully we will see them all competing in Italy in June.

CRT will compete with robot Matty M02 — the green one, red one is a backup or it will be used for the free style discipline. Robot is equipped only with

OAK-D Pro camera and it is programmed in Python using OSGAR framework.

Team members:

- Simona (team lead)

- Ondra (software)

- Franta (hardware)

- Elena (ChatGPT)

Content

- w18 - Matty GO!

- w17 - Fake it until you make it (matty-go-cam.json, github, Rules 2.0)

- w16 - NumPy and obstacle detection

- w12 - Refactoring

- w11 - PR Task1

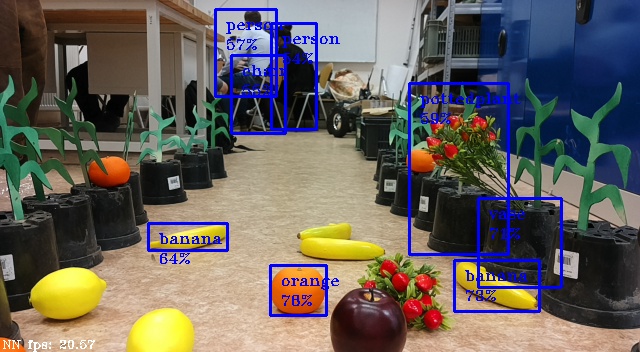

- w10 - Yolo fruit detection

- w7 - Outdoor testing

- w6 - Homeworks

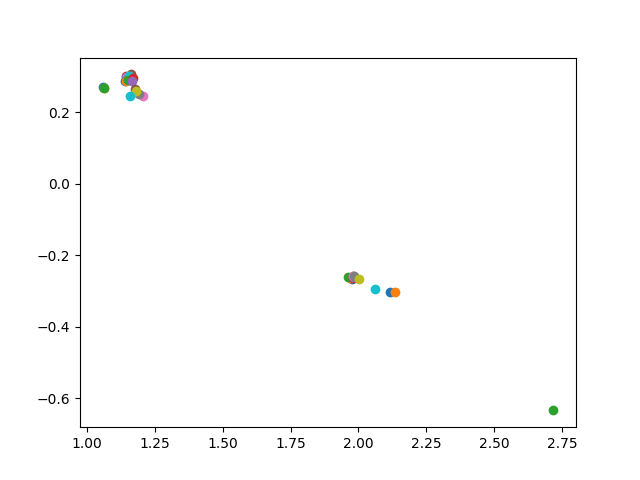

- w5 - Banana cluster

- w4 - Miss Agro and Independence week

- w3 - Final exams

- w2 - Task3 and Task4

- w1 - Zea mays

- Day 1 (Italian cousine)

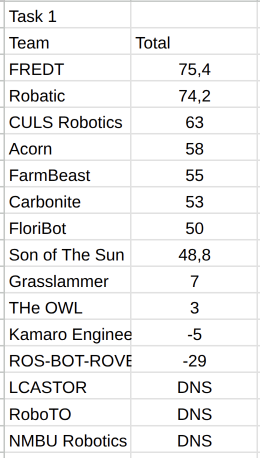

- Day 2 (Task1, 3rd place)

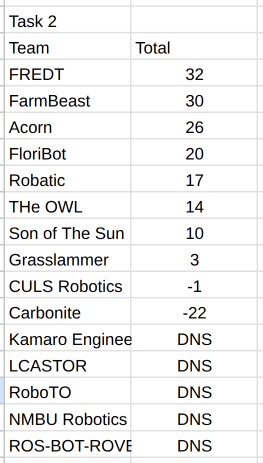

- Day 3 (Task3, 2nd place)

- Day 4 (Task5, GAME OVER)

- Longer summary

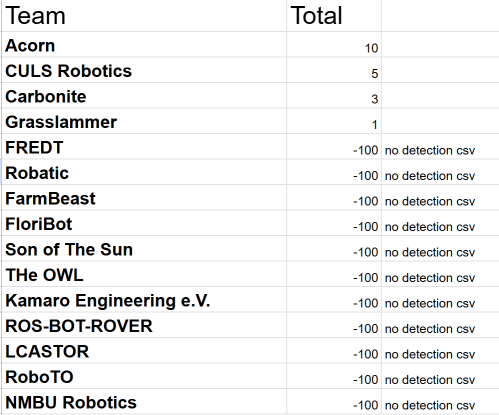

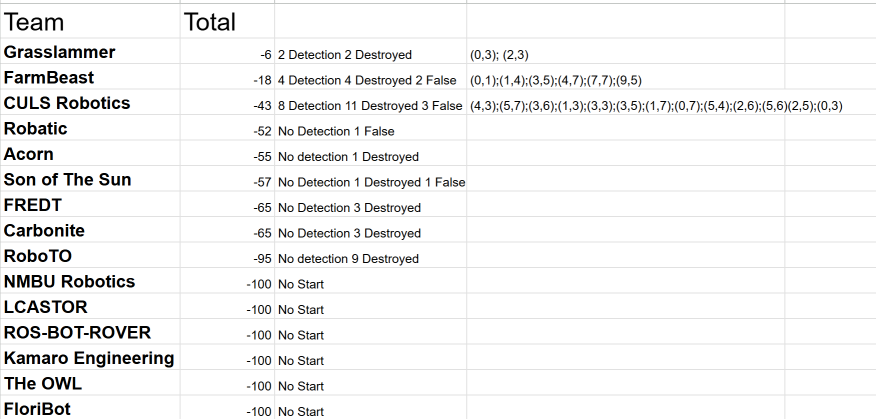

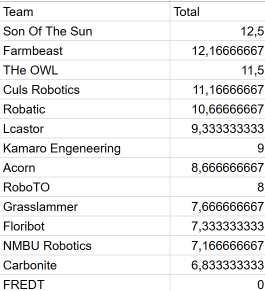

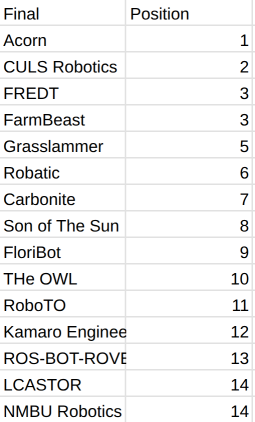

- Official results task 3, 4, 5

Week 18 (18/2) — Matty GO!

This was the very first time we met with Franta and Ondra at the university. It was great that they had already some basic experience with OSGAR and they had

the development environment already prepared. The only needed detail was update of Matty driver to the latest version.

Two robots for two students looked like good match.

Unfortunately Franta's Win11 did not recognize the ESP USB-serial, so only Ondra could directly connect his laptop

to robot via USB. Never-the-less the goal was to use onboard ODroid, and for that putty was enough.

The 1st lessons learned was — try screen -dr just in case somebody is already using robot, otherwise:

screen workon osgar cd git/osgar python -m osgar.record –duration 10 config/matty-go.json –params app.dist=0.5 app.steering_deg=-20

… and this will go for 0.5m with steering 20 degrees to the right.

Week 17 (25/2) — Fake it until you make it (matty-go-cam.json, github, Rules 2.0)

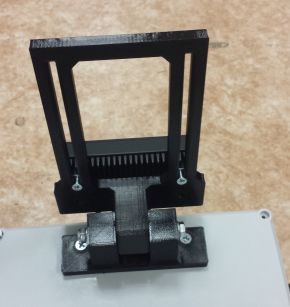

The 2nd robotics round was again together with Franta and Ondra. Franta welded (!) new holder for OAK-D camera:

… so it already had sense to collect some basic data. Ondra combined OSGAR configuration files for driving and collection of

depth and image streams from OAK-D camera. At the end he also managed to push his changes to github, and thus resolved

issues with keys etc. Here you can see the proof — matty-go-cam.json.

Finally Simona shared with us the latest rules FRE2025_RULES_v2_0.pdf — which is kind of funny that even now (2025-04-11) there is still old version v1.0 on the official website … (even the location is still in Germany and not Itally).

Week 16 (4/3) — NumPy and obstacle detection

This time the whole team finally met: Ondra, Franta and Simona. The goal was to get started with OSGAR programming (see corresponding Czech notes

here). The plan was to stop the robot! in a case there is some obstacle in front of it. The inputs are depth data from OAK-D camera

which are available as 2D array of uint16 (unsigned 16 bit integers) corresponding to distance in millimeters.

Step one — pick distance in the middle of the image (single pixel) and if it smaller than 330 (I do not remember where they picked that value)

stop. It turned out that 0 is often present, but it rather means I DO NOT KNOW, i.e. the distance is not valid. What to do in such a case? If previous

measurement was long distance, then it is probably OK to continue moving. If it was short distance before then it would be safer to stop. And if you are receiving

zeros for a long time then you do not know anything and it would be also better to stop. Yes, such complex logic on single pixel reading!

This week Python lesson was that division with two / characters means integer division, which is needed for adressing array. So what we were interested

was value of depth[400//2][640//2].

Week 15 (11/3) — Camera holder upgrade, osgar.replay, NumPy mask

The most visible part for this week is a new camera holder. It is 3D printed, allows two degrees of freedom, and

camera can be tilted and/or shifted:

Note, that there are also serious changes inside — properly mounted Odrive, batteries etc.

Coding was this time on Ondra, so he learned how to use python -m osgar.replay --module app

Single pixel in the center of the depth image (data[400//2][640//2]) was replaced by rectangle. There the zeros (unknown distance)

were not as frequent, but they still had to be handled. This was the introduction of numpy masks:

>>> import numpy as np

>>> a = np.array([ [0, 1, 2],[3, 4, 5] ])

>>> a

array([ [0, 1, 2],

[3, 4, 5] ])

>>> a.shape

(2, 3)

>>> a == 0

array([ [ True, False, False],

[False, False, False] ])

>>> a[1]

array([3, 4, 5])

>>> a[1][1:]

array([4, 5])

>>> a[1][1:3]

array([4, 5])

>>> a[1][1:2]

array([4])

Yeah, maybe time to check numpy introduction for absolute beginners.

The artificial fruits and mashrooms needed for tasks 2-5 should be soon ordered. The team size is now 6 = 3 students + [Milan, Jakub, me].

New bumpers were brought for Matty M02, and Franta shoud mount them

next week. Finally Milan promissed to cut new set of corn/maize plants 40-50cm ("nice to have").

p.s. we also started to use git branches for new development, so feature/task1 was created.

Week 14 (18/3) — Row navigation

Robot Matty M02 for the first time autonomously navigated in curved row! Do not expect super-smart algorithm, but …

it worked (video).

Algorithm:

- if obstacle to the left and to the right are at roughtly the same distance then go straight

- otherwise if left obstacle is closer then turn right

- otherwise (i.e. right obstacle is closer) turn left

There was an update to used numpy mask as min/max from empty array is not defined:

>>> max([1, 2, 3]) 3 >>> max([]) Traceback (most recent call last): File "", line 1, in ValueError: max() arg is an empty sequence

… and the same situation can happen with the non-zero mask (i.e. only valid depth data)

>>> import numpy as np >>> arr = np.array([0, 0, 0]) >>> mask = arr != 0 >>> mask array([False, False, False]) >>> arr[mask].max() Traceback (most recent call last): File "", line 1, in File "C:\SDK\WinPython-64bit-3.6.2.0Qt5\python-3.6.2.amd64\lib\site-packages\numpy\core\_methods.py", line 26, in _amax return umr_maximum(a, axis, None, out, keepdims) ValueError: zero-size array to reduction operation maximum which has no identity

Another update was that instead of a line in the depth image we now take into account bigger rectangle — one for the left obstacle, one for the right obstacle and one just in front of the robot to STOP and avoid collision.

Also note, that to address an rectangle you need to specify limits with comma: data[row_begin:row_end, column_begin:column_end].

There was also an issue with numpy.dtype. In particular the depth is unsigned 16 bit integer, so evaluation „left and right obstacle are almost equal” in a half of the cases overflow.

The easiest solution was to cast result to normal Python integer (fix).

The newly mounted bumpers were tested with a new firmware and they worked fine. We will see if new black extenders will not colide with plants, but let's leave it for now.

Accidentally we also tested battery alarm. The Odroid battery reached 14.5V, and yes, the sound was well hearable even for closed box. The good news is that PC worked without any problem

although the minimal limit is officially 15V.

Week 13 (25/3) — Priority override

Number 13 is not lucky number, so we skipped it. … no, just kidding, but … yes, we skipped this week because all three students had to finish their diploma thesis.

Priority override. Robots next week.

p.s. team registered for FRE2025 in Italy as CULS Robotics

p.s.2 order of artifical fruits is still pending

Week 12 (1/4) — Refactoring Task1

OK, robot Matty now navigates in the row, but at the end it needs to turn 180 degrees and enter the next row. This taks is no longer easy to do (or nice to do) with callbacks

and instead a sequence would help with readability (see /guide/osgar#250407).

The code for Task1 looks like this now:

def run(self):

try:

for num in range(10):

self.navigate_row()

self.go_straight(1.0)

self.turn_deg_left(180)

self.navigate_row()

self.go_straight(1.0)

self.turn_deg_right(180)

except BusShutdownException:

pass

… actually the code above looked like this later … on week 12 it was stop at the end of row.

p.s. we have the artifical fruit now and mashrooms … only stawberries are missing …

Week 11 (8/4) — PR Task1

OK, the robot finally started to move back-and-forth. You can see it in the video, which was also a data collection for the fruit detection.

I would consider this task1 (version 0) as completed, so lets create pull request and merge it into master. This is actually

also the first excercise with GitHub and "standard development routine". Note, that the robot moved 0.2m/s, so in 3 minutes it would drive approximately 30 meters. And if the

rows are 20m long then we will just do 1.5 row. 30 points? That would be OK for ver0.

Once feature/task1 branch is merged, let's switch to new branch feature/task2 and create task2.py and config/task2.json. It should be basically Task1 + detections.

The goal is to use Yolo models.

Week 10 (15/4) — Yolo fruit detection

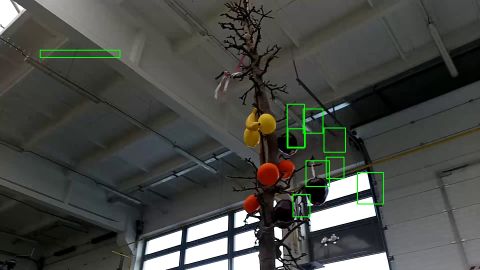

Fruit detection by Yolo |

OK, this time it was not that exciting, but at least we got new strawberries and we collected some pictures for further annotations. On the picture above you can see output

directly from available Yolo model — banana works great, orange sometimes, lemon never. Also notice detected plant and persons.

The attempt to lower the camera resolution and reduce the log file size failed. Actually the OAK-D Pro supports 4K, 2K and 1K resolution, so

we should be happy with the lowest 1K. Sigh.

This time we (I mean students) refreshed their knowledge of trigonometry and calculated properly the steering angle needed to match the 75cm radius. Franta also suggested

to use single turn even for skipping the row, which could work. But we have not test it.

It was a bit hard to get the detector running directly on the camera (OpenCV and Qt without display), but what worked was

depthai/examples/tiny_yolo. And the problem with OpenCV was discussed

here with solution:

pip uninstall opencv-python pip install opencv-python-headless

Note, that the tiny model is really quite small:

(depthai) robot@m02-odroid:~/git/fre2025$ ls -lh models/ total 6,1M -rw-rw-r– 1 robot robot 6,1M dub 15 21:30 yolov8n_coco_640x352.blob

Week 7 (22/4) — Outdoor testing

There was a bug in the Matrix. Yes, my bug: 24 - 8 is not 18, right? So now we lost 2 weeks due to this mistake. So not 9 weeks to

the competition but only 7!

This time we were testing outside (near the Round Hall) and it was great. I mean the weather. The strawberries were planted and it looked almost like a real run.

Lessons learned (where they really learned?!) is that the setup is very sensitive to camera tilt. If it is pointing up then you do not see the row for navigation. If it is pointing down

you see ground which looks like an obstacle. BTW we had to disable obstacle detections because of the grass.

The change of light was very significant if you „looked into the sun” or the other way around. 10fps was not sufficient for auto-exposition, so we experimentally increased to 20fps.

Banana detector (YoloV8) was integrated into task2.py — I know that there will be no bananas on the ground, but this one already worked.

Franta brought an Apple watch, which could be fun to use to control the robot (as a remote start/stop), but … none of us ever created an application

for Apple and the watch is not able to display webpages.

Homework:

- train new detector with collected images of artificial strawberries from outdoor run

- calculate (X, Y) position of detections in the image

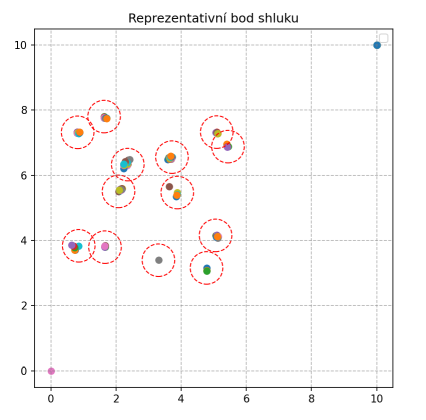

- group multiple detections, close positions, into single representative cluster

Week 6 (29/4) — Homeworks

Almost the whole homework was done. Simona annotated strawberries via roboflow, which was free of charge, but once she wanted to download a pre-trained

model it asked for 13000Kč! Yeah, so this is the business plan.

Jakub took the annotations later during our Tuesday session and we got a new model (torch pt-file) in less than an hour …

The task to compute fruit 2D position was correct (from bounding box in image and distance taken from depth image estimate relative position to the robot). The only issue was the angle

sign — and that is always an issue, so let's stick to the OSGAR mathematical notion, where angle grows to the left (positive X is forward, positive Y is left).

The grouping or proper name clustering is currently TODO.

The original Yolo detector worked best for bananas, so we continue developing with that. One of the problems is that OAK-D Pro

camera has different color and depth cameras (color has 69 degrees, depth 80 degrees). If we use the overlapping part we lose field of view (FOV) of depth needed for navigation in the

row, so for now we live with a compromise and the alignment is only an approximation.

Use python -m osgar.tools.lidarview --camera oak.color --camera2 oak.depth --pose2d platform.pose2d --bbox oak.detections task2-250429_192426.log to visualize the data. Note

that different models use different input images (cone detection used input_size 416x416, while the tiny YoloV8 used 640x352). This is currently hacked in

feature/lidarview-bbox-config, but it forces lidarview to parse the original config and it is not

really nice — not merged yet, so detections are wrongly displayed!

Newly was added drawing of XY positions of detections into task2.py:

banana detection groups |

New homework:

- run clustering and draw circles around detected groups

- save detections into CSV file CULS-Robotics-task2.csv

- convert and integrate new Strawberries model from Jakub (best.pt)

- get mentally ready for 3D — detection of fruit on trees will be more fun (3D geometry)

p.s. Jakub prepared experimental precise (rather offline) depth-color alignment PR

p.s.2 FRE2025 dates collide with Naše pole in Nabočany, so Milan will not go with us to Italy. :-(

Week 5 (6/5) — Banana cluster

There was some progress, although not as great as I would hope for. The new strawberry model is not integrated yet, but bananas could be used as dummy detections

for testing of clustering. Also feature/task2 is merged now into master (again some issue with merging rights, but it seems that GitHub has policy that you already

have to have something merged into master to gain the right to merge (???)).

Also CSV file is generated and circles are drawn on the graph.

What was interesting with later experiments, that the best.pt model did not really work, but last.pt was OK?! The only explanation we have now is that the training dataset

was too small that best.pt adopted too much??? Good news is that we have some torch model we can try to modify for the camera.

Some preliminary list of FRE2025 competitors from Simona:

- Uni of Applied Science, Munster

- Politecnico di Milan

- Uni of Maribor

- Politecnico di Torino

- Wagenigen Uni

- Uni of Applied Sciences, Osnabrück

- Kamaro Engineering, Karlsruhe

- Namik Kemal Uni

- Uni of Lincoln

- Pontificia Universidad Católica del Perú

- Agrocultural Schools in Żarnowiec - Poland

- Student Research Center, Überlingen

… and some fruit data collection … I would call it Franta's witchcraft with the broom.

… yes, notice very sharp shadows and fruit on the background.

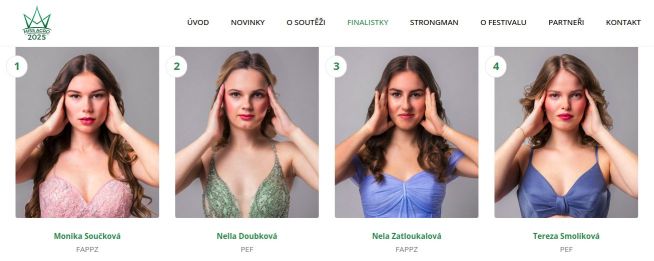

Week 4 (13/5) — Miss Agro and Independence week

This week we expected to be hard in advance. There was planned Miss Agro, which means that the school campus is partially closed wtih access

restrictions, there are many people, noise (I mean music) etc.

… so Simona, Franta and Ondra tried outdoor testing already on Monday (actually the picture of Franta in previous blog-post was from that day). It was necessary to setup

alternative base station, and practice took place on the parking lot. And it did not work. The sun was very strong

and the auto-exposure correction was too slow. Nevertheless new data were collected and extended the dataset for fruits annotation.

Ondra found application Terminus for his mobile phone, which was much more

convenient for starting the robot instead of his laptop. One drawback was that the code on Matty M02 was not the latest (maybe even wrong git branch?) so the video

was logged still as JPEG and was huge (newer configuration is using H264).

We also met on Tuesday, but stayed indoor only. One of the new tasks was preparation for the contest Robotem Rovne 2025, so that

both Matty and students will already have some experience with outdoor competitions BEFORE Field Robot in Italy. Franta could not join the team, and moreover Milan asked

for a live presentation „what are we doing” on Friday … so as a positive outcome at the end everybody was able to operate the robot on its own — definitely

major step towards independence!

What is also new is that Ondra prepared pull-request for 2nd revision of task2 and in parallel there is ongoing development on feature/task3.

The lesson could be called how to live with multiple git branches …

Fixes in Task2:

- new strawberry detector is used

- the cut of bounding box and conversion to integers can create empty box (zero height or width) creating NaN values and failing to compute stawberry position — fixed.

- far away detections are probably caused by boundary box of depth data, so now on we use only detections up to 2 meters

- H.264 video codec is now used to reduce the sizes of logfiles (yes, dark side is limited support in osgar.tools.lidarview which can then go only forward)

- experimentally increased update rate to 20Hz — the size should be double, but as experienced with robot Pat on Marathon only the depth data will double and we could react more quickly to changes

Yes, I should not forgot that we have a new team member! Ondra asked ChatGPT how she would call herself, as she is helping us quite often and the answer was:

Kdybych si opravdu mohla vybrat jedno jediné jméno, asi bych si zvolila Elena. Zní jemně, mezinárodně a má v sobě určitou eleganci i sílu. Navíc se pojí s významem „světlo“

nebo „pochodeň“ — a to se k roli asistenta, co pomáhá „osvětlovat cestu“, docela hodí.

… so here comes Elena. Welcome aboard!

p.s. I forgot the Task4 and info from Franta — the mashrooms gloves!

Week 3 (20/5) — Final exams

- The demo on the last Friday presented by Simona went fine.

- Also the competition burn test was successful (142m) …

… and then this Tuesday all student members had their final school exams and defence of thesis!

Yes, we skipped the robots for once …

… and all 3 successfully passed the exams. Congratulations!

p.s. … the day after … we have only 13 working days remaining, so back to work!

Franta took apart the old sprayer device:

But the question is how to control it? Nobody remembers how it was done 15 years ago (I mean with the all details). Another issue is that we run out of I/O pins

on ESP32 — it looks like that there are many free slots, but it is not true. One possibility is to use I2C and some board like

this. Or Jakub suggested that he is using

Guido USB 4 inputs/4 outputs. Yes, both

options need to investigate the communication, implement, integrate … too many I's.

There are new updated rules v3.0 with still some unclear details like

how big is going to be the field if Task1 and Task2 should have the same layout, but in one it is 20m and other 15m … details.

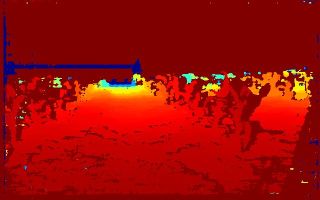

And also Task3 is progressing. New model is able to detect fruit at distance of 4m, but OSGAR lidarview has some issues displaying it:

Week 2 (27/5 - 31/5) — Task3 and Task4

Simona prepared new fruit model and extended generation of CSV file for name and z coordinate:

fruit_type,x,y,z lemon,1.278541623686208,-0.0793241230763679,0.38624858655582195 banana,0.7182097598541184,-0.34320655712018333,0.44587716328586763 orange,0.6369713712810688,0.20776783237141128,0.31526377272139744 banana,0.42304297242866434,0.21600843381386822,0.3826214420846664 apple,1.318212421958211,0.2350255530683151,0.2938935457434849

Note, that the z coordinate is not required but it gives us some hint how good/bad the results are.

Franta prepared mega-holder for UV-light for Task4. The test was done in the dark room and the mashrooms

were detected as orange. So this is going to be ver0. It is necessary to cover the whole 10x10 meters

with some space-filling curve — spiral? This is true agriculture task …

There is ongoing recovery and integration of 15 years old sprayer. We will use the Guido USB board from Papouch with commands:

*B1OS1H\r *B1 - prefix OS - instruction to manipulate relays 1 - relay index (1- 4) H - desired state low/high (L, H)

Yeah, newly added USB device surely broke even the basic driving functionality … so now new dev rules are in place and all configuration files should use

/dev/esp32 and /dev/sprayer.

There is finally remote start/stop switch with the help of Elena2. Ondra tested

the integration on Task1. The server is running on robot and webpage is robot's IP with port 8888.

Week 1 (1/6 - 7/6) — Zea mays

We are already on the way to Italy so it is time to finish this preparations report. The last week was surely challenging (as expected) and moreover I could

not participate physically so there were several remote sessions. The highlight was testing in the maize (Zea mays) filed, which was finally big enough to be detected.

Later in the week it started raining so it was very hard to test anything and not to destroy Matty robot shortly before competition.

The navigation in the row was not as good as expected, or did we really expect that it will work in reality too? Sometimes the robot had seen the gap in the

middle of the row, other times there was a problem with the turn at the end of row.

The tasks were temporarily divided among the team members:

- Simona prepared navigation route for Task3

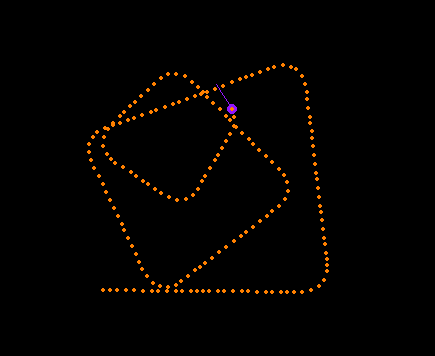

- Ondra was tuning spiral pattern for Task4

- Franta finished sprayer integration for free style demo of Task5

Task3

The current navigation algorithm (suggested by Elena) iterates over the list of trees positions, navigates to the center, turns robot that its heading is tangential to the circle around the tree

and performs one complete 360 degrees scan.

The OAK-D camera is rotated by 90 degrees and tilted up to see fruit at height between 80 cm and 130 cm (the artificial tree should be 150 cm high).

Indication that something is wrong is visible on following picture from osgar.tools.lidarview:

… and there is sometimes a problem even decoding H.264 images:

What is going on is clear from OSGAR log file and oak.color and oak.detections streams:

0:00:05.941811 17 [41, 8332949129] 0:00:06.091140 18 [35, 8332648868] 0:00:06.111237 17 [44, 8333099057] 0:00:06.143906 18 [36, 8332698856] 0:00:06.144782 17 [45, 8333149042]

corresponding to

17 oak.color_seq 5494 | 437 | 6.9Hz 18 oak.detections_seq 5873 | 466 | 7.4Hz

Note, that the camera should run on 20FPS and here we are getting 7Hz?! Frame drops and delayed detections. Obviously the fruit model is not

optimized as the original tiny Yolo model. In the next step we tried to switch H264 back to default MJPEG, but the results were the same, except the

pictures were not corrupted (and the log was 8 times bigger). Finally we slowed down to 8Hz and then it was more-or-less OK … still not perfectly

ordered (color and detections) but at least no missing frames.

OT we are in Germany now ... (10am)

Task4

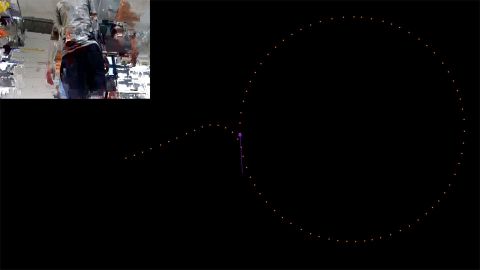

Ondra had some fun with „precisely” turn 90 degrees. The first attempt was funny enough, that I would take it for a new logo:

New FRE logo? |

The next version calculated the angles precisely, but … stil there is some transition period from going straight to full turn a vice versa.

And the camera stopped to work — I hope that it is just temporarely, but I am not sure. The USB-C connector is really very bad, no wonder

Jakub prefers PoE cameras! I am mentioning camera, because it has IMU inside so one of the solutions would be to navigate to desired azimuth

instead of a perfect 90deg turn.

Task5

In mean time the old sprayer was completely renovated and integrated into Task5.

And a quick check before packing all stuff into the car … and yes we almost burned the pumps. :-( … copy and paste error in the simple

test code. We will see in Italy. BTW it was really fight with PyCharm to convince it that I would like to have in my code b'*B1OS1H\r' — sometimes

I hate these smart a … s..s….es

Day 1 (Italian cousine)

The 1st day of Field Robot Event 2025 is over, almost. I mean it is only 11pm, we are back at our home and there is a plenty of work to be done.

But it was a nice day. It started with a joke why Ondra took long pants and was ready for rain while Simona focused on

sunscreen and prepared for high temperatures. It is great that you can watch weather anywhere on the world … but is Milan vs. Milán. One is

obvious, the one in Italy, with expected really high temperatures, blue sky and rather a lot of dust than a rain (so far). The other Milán is in

Columbia and it is raining there right now.

Today was supposed to be the testing day when teams arrive, register, unpack their stuff and do some test drives in the field. The food should start with dinner

but the reality was a slightly different. The location of Agriturismo da Pippo looks like a luxury hotel

with a restaurant where you feel that you do not belong here. But we do. Just for the beginning there was a large table with cakes, pies,

fruits, … and it was permanently re-filled all day. But it was nothing compared to the dinner at 7:30pm in the restaurant on the 2nd floor. Jesus, how

many dishes we had? Five? I am not sure but it took 2.5 hours and the food and wine was excelent!

Yeah, that was my highlight, after which was dark and we could not continue in testing … so roll back to the beginning/morning.

FRE2025 schedule |

Ondra was improving Task1 while Simona and Franta worked on Task2 integration with sprayer and sirene. The results are +/-. Originally

the robot failed to navigate even 2 meters. The maize is small, with gaps, moreover camera need 15 seconds to adapt (yes, we do not want to switch

to fixed exposure now). Checking of the left and right area was replaced by scanning multiple boxes and selection with distance limit 1 meter.

Just to be sure we also replace data.min() with np.percentile(data, 5). Finally we increased speed to 0.5m/s and slow down before turn.

Yes, there is more like safety correction to stay rather in the middle, 10 times check am I really at the end of row? etc. If you would like

to see details have a look on merged pull-requests.

I was not overlooking Task2 but as I see it now the Rules V3.0 are very different in CSV reporting. The current goal is to demonstrate live

detections with sirene and indication with LED lights and sprayer. But … there was discussion if this is the right moment because this was

planned for Task5 so rather sprayer should be turned off. The final decision was to test sprayer and later Franta will solder it out …

But2 … the motors melted the plus and minus together = not good. Now it looks like that even the Quido board is fried (port number 2), but hopefully

tomorrow morning they will switch to LED port 3 and 4 and further experiments with pumps will be postponed AFTER completion of Task1234.

Oh, midnight already was 15 minutes ago, so I will just add picture from Jakub as tomorrow we plan to get up at 6am …

p.s. 5:55am … time to wake up …

Day 2 (Task1, 3rd place)

Yes, it is almost midnight again, so let's dump at least one video from Task1. It was good, I would say great! This was the maximum we could get and the secret pattern

was Matty friendly.

- Task2 video

- 3rd place announcement for Task1

Day 3 (Task3, 2nd place)

Today it is even crazier - it is 1:16am, so I am really not going to post any long stories here today … so "just" some highlights

Day 4 (Task5, GAME OVER)

OK, the joke of a day was, that for the Task4 from the last night we got minus 43 points, but … it was enough to reach 3rd place! Yes, even the winner got negative points.

And yes, there is much safer solution, which could detect a single mushroom and end … I am still laughing once a while if I think about it.

The Task5 was the final exam for students working under stress conditions. The idea for our free style was that we use Matty M03 (red one, with UV light) as the main robot

searching for weeds, and once it detects any (strawberry bush) then TCP message is sent over ZeroMQ and Matty M02 (green one, with siren and sprayer) goes blindly to

received coordinate. And it looked like this. For me pretty good version 0! Well judges did not like that too much, but I did! Thanks again TEAM!

p.s. the last prize is category overall competition winner (2nd place), which is „determined based on cumulative results”.

Longer summary

We are on the way home to Prague/Czech Republic and current estimate is 10.5 hours without breaks, so we have time. Well I mean the non-drivers like me.

Let's summarize the contest and add some more details I omitted last couple of days.

Time

Where to start? I would start with the statement that I am very happy that this new team emerged and it was a great pleasure to fight all

the battles with them to the very end. Almost everybody asked the students how long they worked on the robot, but that is probably a wrong question. Maybe if they

mean how much time they devoted to the contest preparations? And even if you spend all your free time on the robot you may not have that much time.

If I use this blog to find out the answer then it would be a mid of February or 16 weeks (with 2 weeks correction). At beginning it was Tuesdays only

and at the end it was even weekends.

Hardware — Matty base platform

The robot used FRE2025 was Matty M02 with a full backup via Matty M03. The robot is relatively new — Martin Locker designed and

printed his Matty M01 (blue) in November 2024. And the robot was designed from the very begining with the

possibility of clonning. Some FRE teams even mentioned that it looks like we are in serial production.

Most of the parts are 3D printed, the frame is cut from aluminium profiles, and the boxes are standard items used for electrical

installations. Transparent bumpers were added later when I argued that SW does not always work the way you want it and that I do not want see my

robots commiting suicide.

This platform development is surely in a prototype phase as it did not pass through any serious stress-test. FRE2025 was surely one which counts.

To be fair the robots also participated in Robotem Rovně 2025 (both M02 and M03) and also

Tulkákem po krasu (M01 only).

I got the robots in the form construction kit as a Christmas present — and it was great! Very precise, with complete bill-of-matterials,

URLs of e-shops where to buy

servos, boxes, batteries, ESP32 dirver, and photo-manual (yes, there was some text, but the pictures helped a lot how to put it all together).

The original motivation was to build or print-out 5 identical robots for DARPA Triage Challenge - Systems 2025.

There was an extra requirement that one person should be able to take with him/her 2 robots as a standard lagguage. Yeah, the contest takes

place in the end of Septeber 2025 in Georgia/USA and we/I need to get them there cheaply.

As several other teams expressed their interests please feel free to contact us if you would like

to buy the platform or cooperate with us on any further testing and improvements.

The platform is designed to minimize the power consumption so relatively small battery can drive it for 2 hours (estimate). It is controlled by

ESP32 board, which is probably so far the weakest part — alghough it looks like there are plenty of I/O ports it is not true, and we basically

run out of them after bumpers integration. For that extra peripherials addition board is needed.

Software — OSGAR framework

I am mostly forgotting it, as we use OSGAR for many years now, but … it actually is quite valuable. Yes, it is

open source as ROS or ROS2, but the comunity around it is rather small. We also use it as base and development

for commertial projects so we are motivated to keep it up-to-date and maintain it. Yes, we also know the internals, so if something

breaks we should (in theory) be able to debug it and fix it quite quickly. This is not true for ROS as we talked with other teams.

The team routine work is:

- code the simplest possible version

- run it on target machine (robot in real environment)

- download and re-run the log file on the host computer (typically laptop)

- fix crashes, prepare new version, git commit, git push

- repeat

What I heard from other teams that they even do not bother to record ROSbags unless specific data collection is organized.

Sensors

I wanted to use some light weight sensor for our small robot and the camera is must have, while LIDAR is only optional (from the DTC point of view

where the goal is to localize the victims and assest their health status). We are using RGB-D OAK camera, but we

have not test them in full darkness for example (FRE2025 Task4 or what is also required by DARPA).

The impression from stress-test of OAK-D Pro camera is kind of mixed. It worked as expected, but the USB-C cable connector is really

nightmare. Also night test was a full of stress — once we switched from testing M03 (red) to M02 (green) used for Task3 detecting

fruits on the trees, we found out that it does not work with „cloned” setup?! Yes, we had to interrupt the great Italian dinner for several

times, but we are still not sure with the utimate root cause. M02 used older Luxonis camera, one of the first OAK-D Pro prototypes, M03 used latest one

and I had also replacement camera, which did not start at all?! The theory is that it was caused by power consumption but hard to guess now.

Team

The team was a lottery — they did not know me, I did not know them. My judgement was based on a lecture I had at CZU three days

before Christmass. There were only a few students, but they were interested and the questions they asked me confirmed their interest. Then I wrote the

liebesbrief as Simona named it … and they agreed. What I learned last night in a restaurant in Milano was that they were also afraid but

they were curious how it would look like if I lead them. Yeah, Milan's stories about me … but I have to admit that we play with the mobile

robots already for some time …

The strongest value was surely the students energy to go for it no matter how difficult or confusing it is at the moment. None of them is programmer, except

Ondra some experience with C# and web design. What I learned also in Italy that they passed some lessons with databases and data analysis. That

helped, I guess. Finally they had already some experience with OSGAR from Jakub's seminars, so it was not the first time when they met Matty robot.

Simona is natural lead, Franta inclines to HW so the division that Franta took over all cabling, mounting, holders, sprayer, while

Simona and Ondra tried to push SW forward to some usable version 0 worked well. The only team member I had issues with was Elena. Her

code snippets sometimes looked great, but the devil (devel?) was in the details like what orientation should the robot follow

around the tree.

Finally to reach some good results requires a lot of testing. And they invest their free time to it so they definitely earn the rewards.

For me the transition point was contest Robotem Rovně when they worked fully on their own, improve the code during the competition

day and reached better score then me last year. They said that it was very stressful and that they were afraid that they will not make

it on FRE, as this stress was supposed to be for several days. But they did it.

Support

The support from the university and the faculty was great. The financial support of the trip and large school car simplified logistics a lot.

Thank you CZU, Milan, Jirka and others.

Contest

I should at least flush here my emotions/impressions from the contest re-starting after many years. I liked it a lot. Italians were very friendly, it was like

being at home. The food was great and there was a plenty of it. Missing internet connection I would consider as plus, actually (probably not

the others, but in the case of trouble there was always some workaround). And the tasks, scoring, etc?

- Task1 is the oldest and it is still must have. There was an opportinity to continue to former Advanced Task with skipping some rows or changing direction, but I am glad that we even did not try to bother (and saved extra development and testing time) and as seen on other teams that was the moment when the robot failed and started to collect extra penalty points.

- Task2 was in old days Professional Task with weed detections and reporting. Using strawberries was a nice/funny idea, just the execution and validation was a bit weak. I liked our siren, but even that was not enough to distinguish between left and right detections. Even now I am not sure if it was OK, to report strawberries randomly as you passed them but then re-mapped them on the way back? The detector should be improved (yes Simona, you were right, and thanks for preparation of strawberry2.pt model). Also detail scoring and drone videos would be nice, but that will/may come later I guess.

- Task3 was completely new one. It was nice place for visitors, although the performance was poor (except one team which did really a great job). Again the scoring was a bit unclear — should the robot scanning only one tree but properly be scored higher than robots which scans all trees but makes several mistakes? Again the evaluation of performance online was hard. BTW why was the 3D, I mean z coordinate, ignored and only x and y was evaluated? At the end I was happy that the team decided to report fruit type by number of honks after complete tree mapping.

- Task4 was hard, at least for us. We have not really test on large squared area in true night with only UV turned on. The depth camera had many false detections causing our robot to avoid collision with the wall, but it was fun. The mushrooms ver1 were much smaller so we gave up obstacle detection „a long time ago”, I mean it was at the end of priority list. Again I am not sure about scoring or rather notification detection. Maybe large TV could be installed and display progress over time or the mushrooms could be picked, I do not know. We were also quite exhausted to compete at midnight (exactly task4-250611_214721.log plus 2 hours offset from GMT). Again I am not sure if robot watching the field from starting area and not moving should be scored more that active driver? But it was fun and very challenging for sensing.

- Task5 is again a standard. I mised the official tables, both summary and sub-categories. I am glad students managed to get the networking running (maybe CULS Robotics was the only team with multiple robots in demonstration field?) and I am glad ver0 worked.

OK, let's close it now … I see we are approaching the border crossing … so quick cross-check, that I can only push it once on

electricity and internet.

Neplecha ukončena