SubT STIX in Ten Weeks

Robotika.cz Team

DAPRA organizes training rounds called STIX (SubT Integration Exercise), where the first one is in April 2019 in Colorado. Although the STIX is for System Track only we plan to work simultaneously also on Virtual Track. This is English blog for the next three months … Update: 20/5/2019 — CERBEROS and CRAS videos from Colorado

Week 1

23rd January 2019 — official announcement

Last night there was an official DARPA announcement of qualified teams for STIX

(SubT Integration Exercise):

So now it is suitable time to start the blog and report status. Note, that

there was a relatively long „prelude” period, which you read at

Rules and

Prelude (both in Czech).

The Robotika.cz team is based on several former

Robotour teams:

- ND Team

- Eduro Team

- Cogito Team

- Short Circuits Prague

and members of robotika.cz. We suppose that it will

evolve as the DARPA SubT Challenge is scheduled for several years …

For STIX in Denver/Colorado we plan to use robots Robík and Eduro:

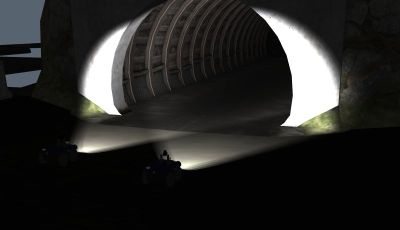

24th January 2019 — Štola Josef

Today we visited Štola Josef

(tunnel Josef) as potential place for testing and preparation for STIX in

April. It was „joint event” with the opponent team

CRAS (Center for Robotics and Autonomous

Systems), which is the 2nd Czech team qualified for

DARPA SubT Challenge STIX. The

goal today was to discuss the possibility and conditions with the tunnel

owner.

It was definitely worth it, although there were so many failures starting with

dead battery in the car, bad contact number, dead battery in my camera (I am

used to that one, so I had spare), but also dead Eduro computer, which I really

did not expect and I did not have backup. Next time. It was also freezing and

the entrance was decorated with jaw-like stalactites.

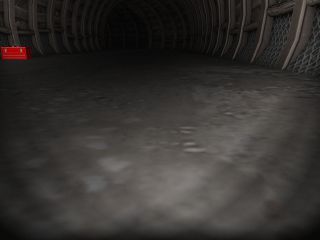

The tunnel was very nice and it could surely imitate Edgar's mine in

Colorado. It was actually too nice (during summer it is open for public), the

rails were below floor surface or covered by stones, clean, dry. We learned

that dripping water is typical as well as mud or small pools.

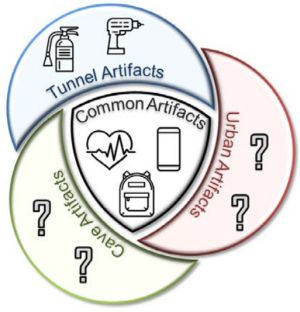

27th January 2019 — STIX Artifacts Specification

The teams for STIX in April 2019

already received a list of five selected artifacts, which will be used. They are

grouped into common artifacts for all three events (Tunnel, Urban, Cave)

and event specific.

STIX Artifacts |

Drill |

Fire extinguisher |

Backpack |

Cell phone |

Survivor |

This selection is good for us — our qualification video used trained network

for backpack, person/survivor and fire extinguisher. The processing was

relatively slow, so we may start with „detect red pixels” as our first

submission to Virtual Track.

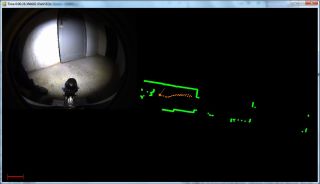

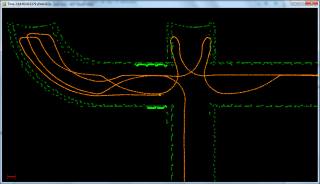

28th January 2019 — Virtual Track - narrow passage

There is a very narrow passage in the virtual environment of qualification

world. It is on the left side behind the fire extinguisher (our first

successfully reported artifact). Yesterday I placed robot X2 at -x 158 -y

139 -z -14.8 in order to examine LIDAR data and check if the passage is

passable with the wheeled machine … and it is!

The robot actually went through, turned right and followed the wall until it

has seen red backpack, and because I did not change the strategy, it turned 180

degrees and tried to return to the original start position (via measuring

distance traveled). The way back did not work. After 3.5 hours of simulation I

could see on terminal that the pose did not change:

…

3:29:00.023172 (3.8 32.2 0.0) [('pose2d', 452), ('rot', 453), ('scan', 181)]

3:30:00.016049 (3.8 32.2 0.0) [('pose2d', 458), ('rot', 457), ('scan', 183)]

3:31:00.060268 (3.8 32.2 0.0) [('pose2d', 448), ('rot', 448), ('scan', 179)]

After review of the output (time, (X, Y, Z) position and statistics of received

OSGAR messages in the last minute) I could see that the artifact was found

after 2 hours:

…

1:59:00.000973 (54.7 61.0 0.0) [('pose2d', 418), ('rot', 418), ('scan', 167)]

2:00:00.041863 (55.6 61.0 0.0) [('pose2d', 419), ('rot', 419), ('scan', 168)]

Published 106

Going HOME

2:00:02.348407 turn 90.0

2:00:18.360198 stop at 0:00:00.389300

2:00:18.360198 turn 90.0

2:00:34.706420 stop at 0:00:00.670223

2:01:00.038746 (55.2 61.2 0.0) [('artf', 1), ('pose2d', 405), ('rot', 405), ('scan', 162)]

2:02:00.028133 (54.3 61.2 0.0) [('pose2d', 419), ('rot', 419), ('scan', 168)]

2:03:00.039347 (53.5 61.4 0.0) [('pose2d', 426), ('rot', 425), ('scan', 170)]

…

and the robot was stuck 18 minutes later:

…

2:14:00.054387 (3.9 45.2 0.0) [('pose2d', 400), ('rot', 401), ('scan', 160)]

2:15:00.018687 (3.9 37.4 0.0) [('pose2d', 388), ('rot', 388), ('scan', 155)]

2:16:00.010633 (3.8 32.7 0.0) [('pose2d', 406), ('rot', 405), ('scan', 162)]

2:17:00.040365 (3.8 32.2 0.0) [('pose2d', 423), ('rot', 424), ('scan', 170)]

2:18:00.053812 (3.8 32.2 0.0) [('pose2d', 457), ('rot', 457), ('scan', 183)]

…

The position was at the entrance to narrow passage but on the other side. It

seems to me that it is asymmetric, and there is different slope on the left and

on the right side of the entrance.

Another reason could be simulation timeout (20 minutes of simulated time),

because I had to change distance to the wall from 1.5 meters to 0.8 meters and

so the robot moved automatically slower.

Finally a detail, which I realized this morning … I did not change the

parameter for wall following for the way back so it would not pass through

anyway … I guess.

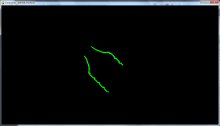

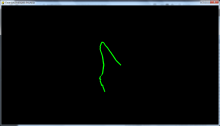

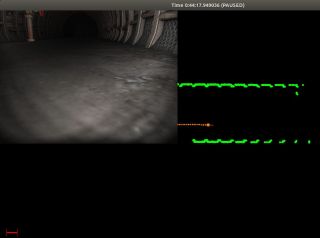

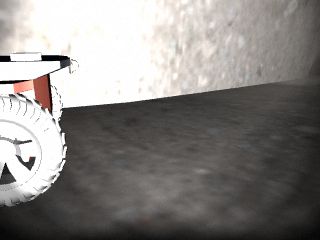

Note, that the surface is variable 3D terrain so to properly decide what to do

with 2D lidar scan only is a challenge:

Week 2

1st February 2019 — Eduro and Robík testing at home

We did a couple of test of Eduro and Robík robots at home. I took Eduro to dark

basement, where are many storage places and narrow passages and PavelS repeated

tests with osgar.go1m code to verify OSGAR driver functionality. Both log

sets are available for download:

robik-190130-hallway.zip

and

eduro-190129-basement.zip

(my bad with the zip file name for Robík, should be 190129 as log files inside).

If you would like to visualize the data you can use osgar.tools.lidarview

which now provides visualization of scan, pose2d and lidar data

(#132).

It did not work very well (probably no surprise). The entires were too narrow

so Eduro decided to turn around instead. What was worse it stopped in the

corner without further activity — that has to be fixed (we probably have

similar issue in the Virtual Track).

Test of Robík was slightly better. We learned that it is necessary to wait

for LIDAR until the rotation speed stabilize and data with orientation are

ready. Also ramping the speed was not properly working in the low level SW, so

we may need to add wrapper in OSGAR driver. Tonight we plan to test and

integrate OpenCV driver for camera

(#134) and then we should be

ready to use the same high level SW for both our SubT robots.

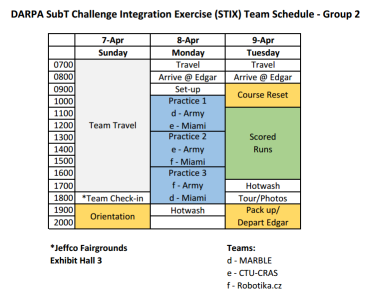

3rd February 2019 — STIX Group Assignments

Yesterday we received e-mail STIX Group Assignments. where my first

reaction was „proč zrovna my jsme Béčko” (why we are the B-team), inspired by

Jára

Cimrman/Posel z Liptákova. We decided to buy plane tickets on 31st January,

because we were warned that it was Lufthansa action price and it will change on

1st February … and it went up by 5000 CZK, but … never mind. I no longer

complain that we will test in Colorado with the second Czech team CRAS … it

could be fun.

Group 1

- CSIRO

- Pluto (Pennsylvania Laboratory for Underground Tunnel Operations)

- CERBERUS (CollaborativE walking & flying RoBots for autonomous ExploRation in Underground Settings)

Group 2

Group 3

- CoStar JPL/Caltech/MIT team-CoSTAR (Collaborative SubTerranean Autonomous Resilient Robots)

- Explorer

- CRETISE

p.s. as I was looking through the list of teams to find some URL there was

often funded by DARPA, so I check older announcements

DARPA Selects Teams to Explore

Underground Domain in Subterranean Challenge (2018-09-26):

DARPA has selected seven teams to compete in the funded track of the Systems

competition:

- Carnegie Mellon University (Explorer)

- Commonwealth Scientific and Industrial Research Organisation, Australia (CISRO)

- iRobot Defense Holdings, Inc. dba Endeavor Robotics (CRETISE)

- Jet Propulsion Laboratory, California Institute of Technology (CoStar)

- University of Colorado, Boulder (MARBLE)

- University of Nevada, Reno (CERBERUS)

- University of Pennsylvania (Pluto)

… so it is 7 sponsored teams + 2 Czech teams … hmm … interesting.

The Carnegie Mellon team, including a key member from Oregon State

University, is one of seven teams that will receive up to $4.5 million from

DARPA to develop the robotic platforms, sensors and software necessary to

accomplish these unprecedented underground missions.

(source)

Week 3

7th February 2019 — OSGAR v0.2.0 Release

Yesterday we released OSGAR v0.2.0. It

contains all HW drivers necessary for both Eduro and Robík robots and new

osgar.tools.lidarview for log files analysis and debugging. There are also

several small features like base class Node for easier creation of new

nodes and drivers, environment variable OSGAR_LOGS for definition of logs

storage place, and launcher for faster application prototyping.

From the programmers point of view LogReader is now directly iterator

so it can be used as file open():

for timestamp, stream_id, data in LogReader(filename, only_stream_id=LIDAR_ID):

print(timestamp, len(data))

There were also couple of tweaks motivated by SubT Challenge, primarily by

Virtual Track. As the simulation can take several hours there is a fix for time

overflow (resolution in microseconds, the limit used to be 1 hour). Also TCP

driver now supports various options necessary for communication with ROS. The

release of node ROSProxy implementation was postponed to the next release,

mainly with the goal to simplify necessary user configuration.

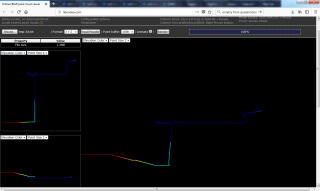

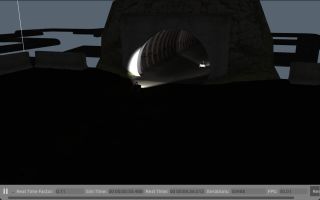

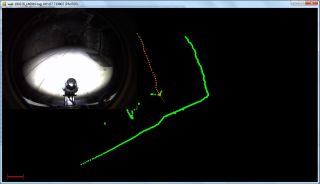

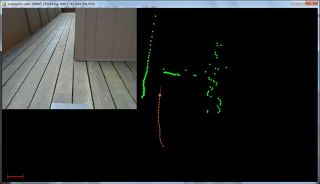

The most visible progress is on lidarview:

If you would like to replay this test, log file is available here and you can visualize it with:

python3 -m osgar.tools.lidarview subt-190204_192711.log –lidar

rosmsg_laser.scan –camera rosmsg_image.image –poses app.pose2d

You can use 'c' to flip camera on/off, +/- for zooming and SPACE for pause. A

simple map from point cloud is displayed in camera off mode.

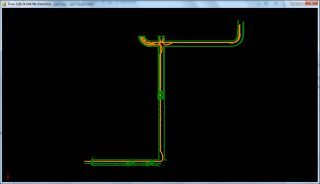

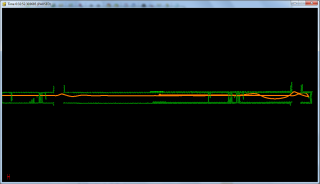

9th February 2019 — Virtual Track - two X2 robots

Last night we run simulation with two X2 robots (small and fast ground

vehicles). There was a need for small tweaks to run OSGAR ROS Proxy in two

instances (extra ports) but at the end it worked! And it was definitely

more interesting to watch than TV … but everything is, so no big deal.

The strategy was simple: one robot (X2L) followed the left wall while the

other (X2R) followed the right wall. The code was identical to version

0 — follow the wall until you find an artifact, turn 180 degrees and follow

the other wall for previously measured distance. This way the robot should

return close to starting area and report to Base Station should be

successful.

After few screenshots, I went to bed … and one robot finished simulation in

almost 3 hours, while the other in 5 hours. Both were convinced to reach the

start which was not quite right in the reality … and now it is time to review

the log files.

Well, the left robot went fine except when it reached the start area the

entrance was detected as wall (maybe bug in the world?) and returned couple of

meters. But the base station was close so the report was delivered

(theoretically).

The right robot „overlooked” the valve artifact and continued to red

backpack. But it did not make it back probably due to simulation time timeout

20 minutes.

It was interesting to see with a generated map how it is difficult to judge if

a tunnel is blocked (dead-end) or only the LIDAR view is temporarily blocked by

robot tilt.

On the generated map you can see that the integrated error is not too big,

approximately 1 meter, and thus it is sufficient for report with 5 meter

precision radius.

BTW why are we „wasting time” with virtual track when we have sharp

system track deadline?! Well, we will probably use the same or very similar

strategy and because the robots are not here at the moment, we can at least

prepare for virtual track qualification (locate 8 artifacts in 20 minutes

of simulation time by the end of April 2019).

p.s. after Jirka parameter tuning both robots returned to the start area, but

… somehow it looks like they „hit the wall”

Note, that X2L simulation took only 47 minutes to return with artifact

position while X2R timeout in 6 hours!? Well, the X2L went straight

until classified the other robot as an artifact and returned home.

Week 4

12th February 2019 — JPEG Red Alert!

We played with artifacts classification over weekend and when we tried to replay

detection of fire extinguisher it failed:

M:\git\osgar\examples\subt>python -m osgar.replay -module detector subt-190208_200349.log

b"['subt.py', 'run', 'subt-x2-right.json', '-note', '2x X2_SENSOR_CONFIG_1, rig

ht, commented out asserts for name size']"

['app.desired_speed', 'app.pose2d', 'app.artf_xyz', 'detector.artf', 'ros.emerge

ncy_stop', 'ros.pose2d', 'ros.cmd_vel', 'ros.imu_data', 'ros.imu_data_addr', 'ro

s.laser_data', 'ros.laser_data_addr', 'ros.image_data', 'ros.image_data_addr', '

ros.odom_data', 'ros.odom_data_addr', 'timer.tick', 'tcp_cmd_vel.raw', 'tcp_imu_

data.raw', 'tcp_laser_data.raw', 'tcp_image_data.raw', 'tcp_odom_data.raw', 'ros

msg_imu.rot', 'rosmsg_laser.scan', 'rosmsg_image.image', 'rosmsg_odom.pose2d']

['image'] ['artf']

{24: 'image'}

{4: 'artf'}

Exception in thread Thread-1:

Traceback (most recent call last):

File "D:\WinPython-64bit-3.6.2.0Qt5\python-3.6.2.amd64\lib\threading.py", line

916, in _bootstrap_inner

self.run()

File "M:\git\osgar\osgar\node.py", line 40, in run

self.update()

File "M:\git\osgar\examples\subt\artifacts.py", line 42, in update

channel = super().update() # define self.time

File "M:\git\osgar\osgar\node.py", line 32, in update

timestamp, channel, data = self.bus.listen()

File "M:\git\osgar\osgar\bus.py", line 81, in listen

channel = self.inputs[stream_id]

KeyError: 4

Hmm, that is really strange — there was no artifact reported at that time?!

Note that record & replay is the key feature in OSGAR: you record your

logfile as the robot navigate and later, typically on your notebook, you can

analyze various situations „what happened?”. The prerequisite is that the

replay is identical to original run. In this particular example the simulation of

SubT Virtual Track was on different notebook with Ubuntu and ROS.

The simplified artifact detection is split into two phases:

- search for sufficiently red objects

- if you find local red maxima run classification

So what happened was that the redness of the JPEG compressed image was

different on each machine. If we classify pixels as RED then their count

differs!

It is yet another detail you should check before you deploy the code on the

target machine. Surprisingly newly added unit tests for counting red pixels

in uncompressed JPEG image pass on machine with GPU support (our primary

simulation platform).

This has to be investigated and we surely have to check also installations on

Eduro and Robík robots.

Fire Extinguisher |

Backpack |

And one joke at the end: this is recording from the run when two X2 robots

met. You would guess that they are blue, but they are obviously not.

|

p.s. note, that these are 1:1 output images from ROS simulation, topic

/X2/camera_front/image_raw/compressed with X2_SENSOR_CONFIG_1 and

resolution 320x240.

p.s.2 Loading jpg files gives

different results in 3.4.0 and 3.4.2 … and yes, the versions are different

3.4.4 on ROS notebook, 3.4.0 on simulation machine with GPU and 3.3.0 on my old

notebook …

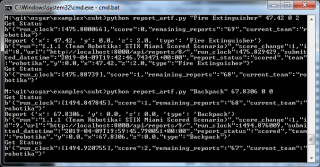

14th February 2019 — Return strategy ver1

Yesterday we tried to complete Virtual Track with two robots X2 returning

after detection of the first artifact. If I ignore some some typo detail both

robots returned and send these reports:

TYPE_VALVE 231.74 -60.89 -14.80 TYPE_EXTINGUISHER 157.37 138.87 -15.09

But the Base Station did not accept it for some reason, so it is still work

in progress, again.

Last night we also discussed the faster return strategy. Current version 0

simply follows the wall back for given distance traveled. This means that if

there is some dead-end branch robot will go there and repeat the same

trajectory.

In version 1 we plan to use shortcuts: There must be a pose from the way

there (if not you are already at the start), so pick the nearest/oldest one

within given radius (say 2 meters). The odometry corrected from IMU should be

good enough (must be for the basic mapping of the artifacts) so it could help

the robot navigate back. Moreover we may use VFH (Vector Field Histogram) for

navigation to avoid potential shift and the old poses would define direction

how to return HOME with the shortest path, right?

Do you like it? Do you see some weak point? Well, do not worry, we will share

it with you as we „hit the wall” …

p.s. note, that this strategy can be used for both Virtual as System Track

17th February 2019 — XP Mission 2 completed

OK, we moved a bit in the Virtual Track — two artifact detected and

successfully reported to Base Station:

0 0 starting 15 991000000 scoring_started 477 328000000 new_artifact_reported 477 328000000 modified_score 1 477 328000000 new_total_score 1 684 329000000 new_artifact_reported 684 329000000 modified_score 1 684 329000000 new_total_score 2

As usual it was not that straightforward. The run was based on two X2

robots (X2L and X2R), where the left one reached "fire extinguisher" as

before while the right one should see "the valve" (and later a backpack). We

switched to full image resolution (1280x960) so the image was 16 times larger

than for low resolution (320x240). Originally was the valve detected by

accident — the wheel is red but it is relatively small — so we converted it

into a feature, but … it was incorrectly positioned (approximately by 9

meters). The valve was on the opposite side of the tunnel and the red part was

visible only in some narrow angle. The simplest solution was to skip this

artifact and continue to the red backpack to get the second point.

Note, that the scoring scheme changed recently, so now 2 points are more then

before 4 points:

… and Jirka is right, that it may look like regression . The game engine

was using scale factor for precision and reported time, so 4 points are

actually 1 artifact but with higher precision and in shorter time.

Week 5

23rd February 2019 — The Valve and Backtracking

Again at least some news from the virtual world (there are also some

updates in the system track but they are not very exciting yet and there

should be some summary tomorrow??).

The mission for this week was to properly detect position of the valve (last

week it was off by several meters) and score 3 points. Later on we changed the

sub-goal to still report the very first approached artifact (left —

extinguisher, right — valve) and get slightly better score for faster

return to the start. Moreover we implemented „shortcuts” on the way back, so

the robot no longer follows the other wall after 180 degrees turn but uses

pruned trajectory to the base station area. It helps with the score time by

20 seconds only, but it is still an improvement.

Original score with the valve detection:

0 0 starting 15 842000000 scoring_started 445 243000000 new_artifact_reported 445 243000000 modified_score 1 445 243000000 new_total_score 1 476 238000000 new_artifact_reported 476 238000000 modified_score 1 476 238000000 new_total_score 2

Optimized return with backtracking:

0 0 starting 15 996000000 scoring_started 435 375000000 new_artifact_reported 435 375000000 modified_score 1 435 375000000 new_total_score 1 455 372000000 new_artifact_reported 455 372000000 modified_score 1 455 372000000 new_total_score 2

Also new approach for artifact localization is used — it combines LIDAR data

with the camera. The image is used for recognition that we are approaching an

artifact of some type and LIDAR scan is used to determine precise angle and

distance relative to the robot. All three classified artifacts (fire

extinguisher, backpack and valve) are high enough to be visible also on the

scan. The precise calibration is omitted at the moment (camera field of view is

60 degrees but we are ignoring the fact that it is pointing down) and +/-5

degrees tolerance is used to find the nearest obstacle.

Note, that this method is dependent on the artifact type — as already

observed nor electric box neither a phone is visible by horizontal LIDAR.

- Video from X2L, detecting fire extinguisher, seeing X2R returning home

- Video from X2R, detecting the valve

25th February 2019 — Three Artifacts and Eduro/Robík Status

I wish the real robot's world would be as simple as the simulated one.

Together with Jirka we pushed it a bit further version 2 for virtual

track and let the robots continue until timeout occurs. While the

right-following-robot visits the valve, the backpack and sees white

electrical box (not classified yet) from distance, the left-following-robot

ends up near the fire extinguisher and fails to enter narrow passage. After the

timeout it returns to the old path.

0 0 starting 15 797000000 scoring_started 582 173000000 new_artifact_reported 582 173000000 modified_score 1 582 173000000 new_total_score 1 760 177000000 new_artifact_reported 760 177000000 modified_score 1 760 177000000 new_total_score 2 760 177000000 new_artifact_reported 760 177000000 modified_score 1 760 177000000 new_total_score 3 $ less examples/subt/call_base_x2l.txt TYPE_EXTINGUISHER 155.89 141.26 -14.83 $ less examples/subt/call_base_x2r.txt TYPE_VALVE 239.55 -57.94 -14.91 TYPE_BACKPACK 339.50 -21.94 -19.87

Note, that the positions are relative to initial robot positions which is -x

2 -y 1 -z 0.2 for the left robot and -x 2 -y -1 -z 0.2 for the right one.

So that was easy virtual world where you do not have to leave the comfort of

living room and can watch simulation as TV.

Now the System Track. There has been some progress on both robots Eduro and

Robík. The main pushing force is scheduled whole day testing in the tunnel

Josef for Feb 28th.

Eduro is stripped down to minimal construction, but it still does not fit into

transportation box for travel to Colorado. IMU is mounted on the top of the

robot, so now we should get some reasonable results. If I ignore issues with

power-up sequence then the second biggest issue is with TiM 571 LIDAR. It works

fine but on the edge of obstacles it generates many false reflections causing

even wall following algorithm to panic (obstacle is too close, what should I

do?!).

Another relict was amout of data fro IP camera. We use high resolution camera

and to limit the size of the logfiles we set config parameter sleep … and

it did not help:

python -m osgar.logger –stat e:\eduro\190224\myapp-190224_175122.log 0 sys 5374 | 21 | 0.3Hz 1 app.desired_speed 112 | 32 | 0.4Hz 2 eduro.can 11480 | 1435 | 18.7Hz 3 eduro.encoders 9866 | 1434 | 18.6Hz 4 eduro.emergency_stop 0 | 0 | 0.0Hz 5 eduro.pose2d 12250 | 1434 | 18.6Hz 6 eduro.buttons 26 | 1 | 0.0Hz 7 can.can 95832 | 11395 | 148.1Hz 8 can.raw 11516 | 1441 | 18.7Hz 9 serial.raw 82465 | 2647 | 34.4Hz 10 lidar.raw 12274 | 646 | 8.4Hz 11 lidar.scan 1426248 | 645 | 8.4Hz 12 lidar_tcp.raw 2317317 | 2581 | 33.5Hz 13 slope_lidar.raw 12540 | 660 | 8.6Hz 14 slope_lidar.scan 453779 | 659 | 8.6Hz 15 slope_lidar_tcp.raw 1300052 | 1389 | 18.1Hz 16 camera.raw 185714842 | 1079 | 14.0Hz 17 imu.orientation 347136 | 3072 | 39.9Hz 18 imu.rot 28358 | 3072 | 39.9Hz 19 imu_serial.raw 386786 | 5910 | 76.8Hz Total time 0:01:16.930032

It is patched now, but not tested yet.

Regarding robot Robík we had 2 hours telco with Blansko/ND Team and hopefully

we sorted most of the critical issues for OSGAR cortextpilot driver. So

robot was running calibration figure 8 and more results we should see

tonight.

Week 6

28th February 2019 — Czech STIX

Today we (Robotika.cz team) tested together with CRAS

(Center for Robotics and Autonomous

Systems) team at tunnel Josef. It was real STIX (integration exercise) when

we ported and tested code from Eduro and Virtual Track to robot

Robík.

At the end we executed the scenario when both robots run osgar.explore code

and the only difference was that Robík followed the left wall, while

Eduro followed the right one. Yes, it ended in the hole of mine railroad

switch, but we still consider it as success.

The primary goal, beside integration, was data collection and this particular

log with odometry, IMU, horizontal and slope LIDARs and camera are available

here:

wall-190228_140910.log.

3rd March 2019 — The Electrical Box = Graveyard of Robots

Today we were „relaxing” again with Virtual Track (yes, I know that the

flight to Denver/Colorado is exactly in one month today). The goal for this

sprint is to collect 4th artifact and the proposal was to locate the electrical

box at the far end of the tunnel (x=400m). And, as usual, it was not as trivial

as it may look like:

- the box is invisible for LIDAR (too low)

- the white color is everywhere due to strong robot light

- the box is blocking the road

And the result? Graveyard of robots (Jirka's copyright):

Now there is a detector of collision (as backup solution), verification

of simulation time (in 10 minutes the robots should return home) and besides

RED objects we are now looking also for rectangular WHITE objects.

Week 7

10th March 2019 — The Toolbox, narrow passage, 7 artifacts and Eduro upgrade

There are some good news both from Virtual and Real World. After detection

of toolbox, collision detection with the electrical box, and setting

exploration time to maximum we were able to collect 5 points (5 artifacts).

Then new local planner helped a lot to guide the robots also through narrow

passage (actually passages, there are at least two in the qualification world)

and suddenly we reached 7 points (there is another backpack and toolbox

behind the narrow passage). Now all submissions are in pending state … 8

points is the limit for qualification, so almost there. See the videos of

both robots:

Left X2 robot

Right X2 robot

Eduro pictures

Week 8

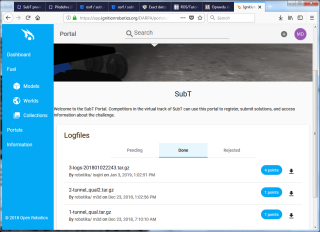

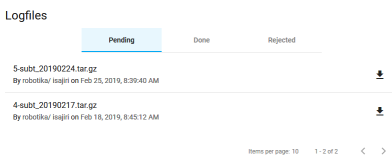

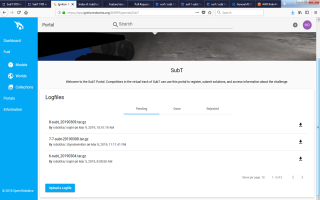

14th March 2019 — The Radio, 8 points, "sRA SCdevicestate 2" and subt-artf repository

There couple of small news, some are good some are bad …

I would start with the Virtual Track where we have good news. We have

extend our detector and classificator and now we can report TYPE_RADIO. See

the picture. If you overlook it, it is the small yellow blob on the right

side. Yes, and from the other side it is brown. But it is

blinking, so maybe alternative would be to turn lights off and wait …

The navigation strategy remained the same as in the previous week. It is

sufficient and after proper detection, positioning and also classification of

mobile phone we could get 11 points. Nevertheless 8 points should be enough for

qualification into Virtual Track competition, so now we are converting the

data, and it takes some tome before they are approved on

app.ignitionrobotics.org

DARPA SubT portal.

0 0 starting 14 872000000 scoring_started 805 207000000 new_artifact_reported 805 207000000 modified_score 1 805 207000000 new_total_score 1 805 207000000 new_artifact_reported 805 207000000 modified_score 1 805 207000000 new_total_score 2 805 207000000 new_artifact_reported 805 207000000 modified_score 1 805 207000000 new_total_score 3 805 207000000 new_artifact_reported 805 207000000 modified_score 1 805 207000000 new_total_score 4 1094 191000000 new_artifact_reported 1094 191000000 modified_score 1 1094 191000000 new_total_score 5 1094 191000000 new_artifact_reported 1094 191000000 modified_score 1 1094 191000000 new_total_score 6 1094 191000000 new_artifact_reported 1094 191000000 modified_score 1 1094 191000000 new_total_score 7 1094 191000000 new_artifact_reported 1094 191000000 modified_score 1 1094 191000000 new_total_score 8 1094 191000000 new_artifact_reported 1094 191000000 modified_score 0 1094 191000000 new_total_score 8

So that was the good news, and now the bad news …

0:00:00.047178 12 b'\x02sRA SCdevicestate 2\x03'

If you are not expert on SICK lidars (we are not), you may not see it as bad

news. Shortly this is failure report: Device failure - please check

operations environment and since Monday we have no scan data from the

horizontal SICK sensor:

b'\x02sRA LMDscandata 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0\x03' b'\x02sRA LMDscandata 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0\x03' b'\x02sRA LMDscandata 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0\x03'

Our impression is that even the motor in the sensor is not turning, so now we

are in discussion with SICK support what can be done in the remaining short

time before STIX.

The last news is related to new

robotika subt-artf repository, which

contains some pictures of discovered artifacts in the virtual qualification

world:

These pictures are taken with X2 robots with configuration #4, i.e. high

resolution camera.

Week 9

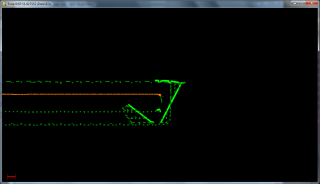

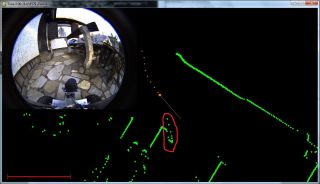

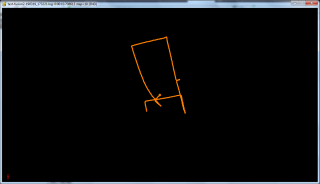

20th March 2019 — Back on Track + reprocessing

Thanks to great support of SICK company we are now back on track with

replacement sensor TIM551!

The first tests were not as smooth as one would expect, but the problem was no

longer related to the sensor. For some unknown reason CAN terminal was missing

(yes, it worked before and it should be inside the electronics box) and Eduro

behaved very strangely — from approximately 10 modules it has seen only one,

and after restart and checking the cables it was different one. Quite a

nightmare two weeks before we fly to Colorado …

After addition of new CAN bus terminal everything worked fine as before.

In mean time we tested reprocessing of old logfiles with a "new" code — it is

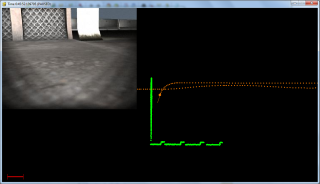

not really that new, because it was already used in Virtual Track but not

tried on real machines yet. It is related to fusion of position computation,

when IMU yaw defines the heading and it looked quite promising:

These are relatively long corridors at the university (the scale is hardly

visible in the bottom left corner). I was told that near the loop end is

machinery generating strong magnetic field and that is probably the reason

while it failed closing the loop.

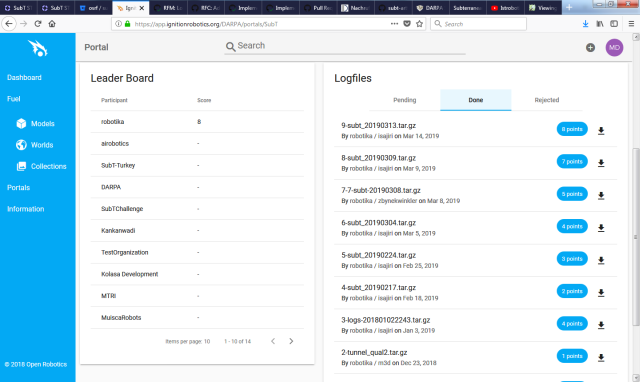

21th March 2019 — Virtual Track — Leader Board!

I just discovered that our logs from Virtual World were just reviewed!

Moreover there is also LEADER BOARD and it looks the way ROBOTIKA TEAM

would like it:

p.s. I made another mistake regarding dead-lines: April 22, 2019 is not

beginning of the Virtual Track competition but Qualification Deadline!

… so I am glad we already have 8 points a month in advance.

Week 10

29th March 2019 — No news good news?

There are only 5 days remaining to takeoff to Denver and it may look like there

is nothing going on. It is actually not the opposite, but the tasks are not

necessarily technical, except issues like Eduro charger, which is on 230V

only, so we will have to prepare alternative.

Example of difficult question is what to wear and take with us. It is

possible to check directly

web

cams, 7 degrees of Celsius, snow:

There are some code bits like reporting artifacts (see

SubT STIX - add tool for manual

artifact report pull request … it was tested with DARPA django server, but

some updates are received today: The competition scoring server will accept

keyword strings as in the section headers of the Artifacts Specification STIX

Event document (i.e., capitalized words with spaces as necessary). See below

for the set of competition artifact type strings: ["Survivor", "Cell Phone",

"Backpack", "Drill", "Fire Extinguisher"]

Another chapter is presentation of the team. Last Monday there was a call with

organizers, and were informed there will be their media crew and it is

necessary to get ready for theirs questions etc.

Eduro and Robík already went through packed state (both robots will be in

normal travel suitcases) but we should check at least one more unpack/pack

cycle before leaving to DEN.

30th March 2019 — Robotika is becoming International!

As preparation for DARPA media call we were asked to provide twitter and

instagram links. This was also the last moment we had to decide how we will

present our Robotika.cz Team during whole SubT Challenge. There are two

main points which were not obvious when you mention https://robotika.cz

website:

- we are not only Czech (CZ) team

- we are also involved in commercial activities and we hope that SubT will help us to reinforce this direction

So we decide to go International! — Robotika

International (the web is currently mirror image of Robotika.cz but it will

change during this last week). There are also new

twitter and

instagram pages available.

Robotika Internationa - SubT banner |

At the moment Robotika International includes Czech, Swiss and U.S.

sub-teams.

p.s. we have a new Swiss member — Bella welcome on board!

3rd April 2019 — Time to pack for STIX

There is only less then 10 hours left to take-off and our journey to

Colorado mountain mines can begin. I am actually looking forward to rest on

the plane right now …

I will only mention some bits piled in e-mails …

1) There is a new website subtchallenge.world

for Virtual Track and Leader Board is publicly available there.

Nate also mentioned in

issue

#58: The leaderboard is also live, and shows that "robotika" has 8 points,

which is enough to pass qualifications. … so I guess we are officially

qualified!

2) I did not give enough room for Robík, so here are at least some pictures

from testing in Štola Josef:

3) Eduro is packed in standard bag … 77cm x 47cm x 27cm

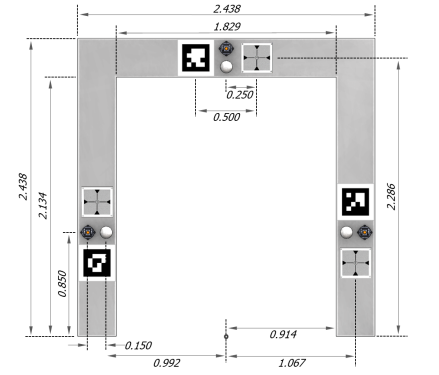

4) There is a new revision of

SubT_Challenge_Competition_Rules_Tunnel_Circuit.pdf

(Revision 2, March 25, 2019) and it includes detail specification of Star(t)

Gate (tunnel entrance)

Starting gate and reference frame dimensions |

5) The schedule for Robotika.cz Team is already given:

6) We do not have any team photo yet … so let's at least publish again one

from Štola Josef:

Wish us good luck and hopefully next news will be from

Idaho Spings, Colorado,

USA.

4th April 2019 — Idaho Springs, CO, USA

We are now in Idaho Springs and what is even more important the robots are

with us! Yes, I am not joking — I just exchanged a mail with CRES team and

they got here on Tuesday, but their robots were for two days delayed and

delivered to wrong address! So we are lucky on this part, for sure.

Eduro already made some exploration of our new home:

Corresponding testing logfiles are here:

Here are also some pictures from the journey:

Unfortunately, as I was taking the picture of American flag my camera displayed

Error E18: The E18 error is an

error message on Canon digital cameras. The E18 error occurs when anything

prevents the zoom lens from properly extending or retracting. The error has

become notorious in the Canon user community as it can completely disable the

camera, requiring expensive repairs. … so that is the only current dark

side. :-(

Now is time to charge Robík LiPol batteries (they had to be discharged for the

trip), fix resets on Eduro (we confirmed some lags up to 20ms causing it) and

maybe some small hike to the mountain across the road …

p.s. you can have a look at nice article about

SubT

on Spectrum IEEE with link to video

Demonstrations of

DARPA's Ground X-Vehicle Technologies

6th April 2019 — SubT traps, OSGAR slots, Robík API, delayed video processing …

Yesterday was quite busy. The ever-growing TODO list contained some major

points which had to be addressed with high priority. So while we are staying in

rented house in Idaho Springs we can exercise and test some basic

functionality on the terrace.

Our base in Idaho Springs |

The spacious house has nice deck and sure enough our robots Eduro and Robík

behaved differently despite having the same code and strategy. While Eduro was

reasonably following the allowed playground in 1 meter distance from the

border, Robík got stuck at the house corner!? Curious?

The reason is design flaw of simple exploration algorithm we used back in

Virtual Track in December 2018. The navigation uses only left side of the

scan to follow the wall, but it uses front sector for speed controller. It is

not only necessary to explore the tunnel circuits but also to do it fast, so

at least in Virtual Track the robots are moving at their maximal feasible

speed. Do you see the „stupid” problem? The robot was perfectly aligned with

the straight left wall and the gap is exactly 1 meter, which was also the input

parameter desired distance from the wall. So the algorithm navigated the

robot straight while the speed controller stopped because of the house

corner.

And why Eduro was not caught in this trap? Eduro SICK lidar is at 30cm from

the ground while Robík has it slightly higher, at 38cm. The wall is not

perpendicular but it is tilted outside … in other words the gap sensed by

Eduro is slightly narrower then for Robík! Finally Robík navigates on

straight line quite steady and Eduro twists a little … but we could reproduce

the problem with 90cm desired distance on Eduro too. Fixed — both

algorithms have to share the same front sector space.

There was another detail related to this issue, which is Robík API. The

robot drives forward without any problem, but the interpretation of reverse

motion was different. The author PavelS gained know how from other outdoor

robot competitions, and he found out that it is much better to turn his robot

on a circle than on the spot. So the direction is not angular speed but

rather circle on which the robot should move. This bug/misinterpretation

had also negative influence in the situation mentioned above, when for very

close object in front the robot leads to backup. Fixed — just high level

cortexpilot diver swapping direction for reverse motion.

The biggest issue with Eduro were motor resets due to watchdog timeouts. They

are set tight from times when Eduro was a small robot capable of speed up to

4m/s (I think that we never reached that speed because it needed a lot of test

space). After discussions we decided to implement something similar to

Qt slots, so module which

parses data from CAN bridge, transfers them to CAN messages (and monitores also

the health of all CAN modules), then Eduro specific driver and then back to the

CAN bridge. With the OSGAR slots which is all done in one raw without any

internal communication overhead. Fixed.

The next issue on the list is delayed processing of recorded video. It serves

two purposes:

- post-processing analysis by human and/or powerful computer(s) at the Base Station

- simple automatic artifact detection on board as a trigger for the early return and fast report of the very first artifact

So while for the post-processing you need complete video record, if possible,

for real-time you just need something what is robot currently capable to

compute.

Yes, we are going to be busy again. BTW the first group is already

testing at Edgar's Mine today … so I am happy, at the end, that we are the

2nd group starting on Monday 8th April 2019.

p.s. Jirka send us link to IEEE Spectum article

DARPA

Subterranean Challenge: Meet the First 9 Teams from yesterday

p.s.2 we still save some time to hike a little in the nearby mountains (Idaho

Springs has elevation 2410m above the see and this (sub)team picture is from

3400m)

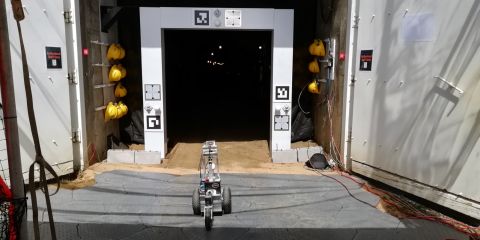

8th April 2019 — First points, first bruises …

Today was the first day of STIX testing in Edgar's Mine, tunnels Miami and

Army. Our first stop was Miami, nice, straight tunnel with lights … in other

words beautiful … yes, except the railway in the middle.

In the morning we discussed the final strategy regarding sequence of

deployed robots, desired distance to the wall, side selection, return on

detected artifact, expected log download and processing … so we end up with

conclusion that all we can reasonably do, is to define one timeout for

robot return and completely ignore automatic artifact detection.

The first victim (deployed robot) was Eduro, 4 minutes timeout for return HOME

and 60cm from the wall. The robot did not return.

The second test was robot Robík, 4 minutes timeout, 60cm, left wall. Game

changer!

There are not many people who would appreciate these impressionistic images,

but I really did. Yes, the first great news was that Robík actually managed

to return and already picked our first artifact! Mission completed, we can go

home now, right?

M:\git\osgar\examples\subt>python report_artf.py Backpack 43.276 0 0

Get Status

b'{"run_clock":0.003218,"score":0,"remaining_reports":"70","current_team":"robotika"}'

Report {'x': 43.276, 'y': 0.0, 'z': 0.0, 'type': 'Backpack'}

b'{"run":"0.1.1 (Team Robotika: STIX Miami Practice Scenario)","url":"http://loc

alhost:8000/api/reports/2/","run_clock":0.0,"submitted_datetime":"2019-04-08T18:

55:38.102984+00:00","score_change":0,"report_status":"run not started","team":"r

obotika","y":0.0,"x":43.276,"z":0.0,"type":"Backpack","id":2}'

Get Status

b'{"run_clock":0.000916,"score":0,"remaining_reports":"70","current_team":"robotika"}'

Well, as you can see we did not get the point … but it turned out that the

server was not in the „competition mode” and we got confirmation that

position of our first artifact is correct.

Then my memories become a bit fuzzy, I should keep log of all parameter which

we changed over 5 hours total in both tunnels. There was a nice Fire

Extinguisher at around 125m from the gate, but we did not report the position

precisely enough. As we analyzed the log files there was another Fire

Extinguisher much closer and that worked even on the server:

M:\git\osgar\examples\subt>python report_artf.py "Fire Extinguisher" 64.757 -2 0

Get Status

b'{"run_clock":1374.175583,"score":0,"remaining_reports":"69","current_team":"robotika"}'

Report {'x': 64.757, 'y': -2.0, 'z': 0.0, 'type': 'Fire Extinguisher'}

b'{"run":"0.1.1 (Team Robotika: STIX Miami Practice Scenario)","url":"http://loc

alhost:8000/api/reports/4/","run_clock":1374.188928,"submitted_datetime":"2019-0

4-08T19:27:41.959721+00:00","score_change":1,"report_status":"scored","team":"ro

botika","y":-2.0,"x":64.757,"z":0.0,"type":"Fire Extinguisher","id":4}'

Get Status

b'{"run_clock":1374.340545,"score":1,"remaining_reports":"68","current_team":"robotika"}'

Well, yes, you have to be a bit crazy to get excited by this JSON output, but

note "score_change":1

Robík returned several times while Eduro never, unfortunately. On the other

hand Eduro had much nicer videos (better light and camera).

Later we moved to Army tunnel — what a change! Dirty, dark, curved tunnel

already at the entrance. The robots were trapped in various mess-objects …

sigh. Twice was Robík returned by „rescue party” in pieces … once one wheel

was loose and the fix was easy. The last was really bad, when the robot

(according to recorded video) was up-side-down for 15 minutes crying in the

tunnel, but we did not hear it … well, hopefully PavelS will fix the tower

for tomorrow competition run.

Videos

… to be contined

p.s. Sometimes it is better not to know what the kids are doing when they leave

the home, but … I made a collection of „death pictures” i.e. the last

moments when was the robot Eduro stuck. There was one particular, which really

scared me! BTW I also found one artefact in Army tunnel …

Also note, that all logfiles are uploaded to:

- http://osgar.robotika.cz/subt/robik/miami/

- http://osgar.robotika.cz/subt/eduro/miami/

- http://osgar.robotika.cz/subt/robik/army/

- http://osgar.robotika.cz/subt/eduro/army/

p.s.2 sometimes I feel really stupid … why I did not even check the detected

artifacts from the robot?! Sometimes they are smarter, and do not get tired

easily as humans …

10th April 2019 — Mission Impossible Completed!

The SubT Challenge STIX for Group 2 is over now. I would consider it as a big

success for Robotika Team as we reached our minimalistic goal with

currently available hardware platforms. Both robots were used in the simulated

run and both autonomously scored! Life is good (R) … and now some

details …

The main task for the competition like day was to choose strategy and maybe

to improve our tools. We did not change the code for robots as there was not

enough time for any reasonable testing. If you would like to see the dirty code you

can have a look at

OSGAR

with tag SUBT_CHALLENGE_STIX_2019 with the commits from the last two days:

1de7305 lidarview.py - accept user rotation via –rotate in degrees efdd16c lidarview.py - temporarily increase window size for easier analysis 60590d0 lidarview.py - use max image size for VGA (Robik, 640x480) 3bb2f93 log2video.py - fix timestamp display for Robik camera (640x480) c8a8cdd subt.py - add move 2.5m straight 997891d eduro-subt-estop.json - FIXUP missing pose3d b422528 estop integration for robik. 24ec9a9 estop.py - shutdown on EStop 6c1f70d Add eduro-subt-estop.json configuration 5a125c8 estop.py - first implementation with publish emergency_stop feb41b2 Add estop.py test for SubT STIX

As one of the few teams we integrated DAPRA E-Stop device, which we got on

Sunday night and on Monday morning it was already integrated. Usually I would

refuse to do any modification at this stage, but STIX is also exercise for the

organizers so we did it as a forthcoming gesture. The E-Stop specification is

already publicly available (see

Transponder_and_Emergency_Stop_Integration_Guide.pdf)

and with a single switch DARPA can kill all robots at once. This is also

mandatory for the real competition so we are glad we already pass.

The second code modification on Monday (the testing day) was adding line:

self.go_straight(2.5) # go to the tunnel entrance

to the subt.py code. There are several markers available in front of the

gate helping to define DARPA global coordinate system. We decided to pick the

one at 2.5 meters so we see the gate on cameras before the robot enters the

mine.

And finally changes for lidarview.py to increase the displayed camera image

(up to now it was used only for a reference what was happening, but now it

was the only source for artifacts detection), increase the size of window to

see more) and finally some tweaks to display time in seconds in collected Robík

video stream (low VGA resolution). And that is it.

Back to the yesterday simulated run. There is an hour to map as many

artifacts in the tunnels as in the real competition scheduled for August 2019.

As it was still the test we were allowed to restart once, but the time

restriction applied.

After the first day we decided to use Robík as the primary robot and Eduro as

backup when Robík fails to fulfill its mission. The first exploration was with

the same parameters as on Monday, when we scored the very first point: 0.6

meters from the left wall, slow speed 0.3m/s, return in 4 minutes. And Robík

performed very well — see video. It is worth

pointing out, that compass did not work very well at the Miami tunnel

entrance:

Here the robot followed the straight! wall. Team CRAS confirmed that they

would destroy their drones if they would keep the compass enabled. Off topic

the

GPS

week overflow caused some troubles to GPS devices used in SubT (not ours,

again story from CRAS team). The software required some update, but GPS was

not even physically mounted(?)) … I will try to get more details later on

this subject (the 10bits counter reached week 1023 on 6 April 2019).

(hmm, I have incomplete log on my notebook now --- yesterday we changed

strategy and started to download log file as soon as the robot was available on

WiFi, and then we focused on restarting Robík for the second tunnel exploration

instead of downloading complete log ... maybe later we will also publish

content of the 'black boxes')

Log file was (partially) downloaded and robot restarted for the next mission.

This time we picked faster speed (0.5m/s), slightly further distance from the

wall (0.7m) and longer exploration time (9 minutes). Yes, here we used

experience from Monday as 10 minutes was enough to reach the complex crossing

with some deadly caves, so we did not want to risk it yet. Never the less Robík

was lost and finished moving in circles on the way.

Note April tags deeper in the tunnel — they are very useful for definition of

the DARPA coordinate system as the robot reaches them in approximately 25

meters and can update its measurements.

Robík was out of WiFi reach (we really did not worry about connection much, as

the primary goal was to autonomously return to the Base Station), so we sent

Eduro robot: 0.75m from the left wall, 0.5m/s, 4 minutes timeout … i.e. if

everything goes well, we should reach further point then Robík at the first

run. We were lucky and Eduro discovered "Backpack" artifact before it got stuck

on the rails (video).

Luckily enough Eduro was still on the network so we could download

the logfile and report our second artifact:

This was the end for the first run. We still had almost 30 minutes left so

tried more dangerous exploration on the right side but the score did not

change (details and logfiles will be published later).

!! TWO POINTS FOR ROBOTIKA !!

p.s. our vacation starts today and I can already hear the rain …

18th April 2019 — There and back again

The journey back was not as smooth as we hoped. The problems already started at

the Denver airport. The Lufthansa rules were different there: while in Prague

we could check-in baggage as a group and each bag could be up to 32kg as long as

the sum was less then 4 * 23kg, in Denver all the bags had to fit in 23kg

limit. :-( So we repacked our robots at the airport, removed laser from Robík

and protective staffing from Eduro.

The next surprise was at Frankfurt airport. Li-on batteries have limit 100Ah

per person and they have to be in carry on luggage (so in the case of a fire it

can be detected and handled by passengers). It is prohibited to check it as

baggage. There was another security check at Frankfurt (as far as I remember

there was none on the way to U.S. — we simply transferred from one gate to

another). And German security police refused battery pack on board :-(, that

it has to be checked in. Yes, it looks like a pack of explosives, but … it is

not allowed to be checked in. And we had less than 1 hour for transfer so after

back and forth runs and arguments PavelS left his bag with four batteries at

check-in counter. This way we throw away 10 000 CZK (approx. $500)! We could

stay at hotel for a week fot that price … sigh.

So the only workaround is direct flight or send it as a cargo or to use

different types of batteries.

p.s. we received mail from DARPA regarding qualification for August Tunnel

Circuit: We hoped you all made it back safely form Colorado last week. We

wanted to follow up and let you know no additional Qualifications materials are

required for the Tunnel Circuit, as your STIX participation has qualified you

for participation.

We will still require a demonstration of integration with the Tier 2

Emergency Stop and Transponder, with a deadline of July 1st. In addition

any/all new platforms you anticipate using at Tunnel Circuit must also need to

be qualified by July 1st.

24th April 2019 — The STIX Teams

There were 9 teams testing their robots at STIX in Colorado, 7 sponsored by

DARPA and 2 Czech teams training for the circuit tunnel competition in August.

Unfortunately there was not enough space for information exchange as each team

had own time slot and assigned tunnel (Miami or Army). At the moment there are

at least team pictures available on DARPA

twitter:

Group 1

Group 2

Group 3

I asked the teams following questions:

- number and type of deployed robots?

- autonomous or teleoperated or combination?

- score for simulated run? (I suppose that all teams were in Miami tunnel at the end?)

- maximal distance reached from the base station?

- number of people at STIX event? (although I could count it on the team picture on DARPA twitter)

And here is the summary (the table will be updated as soon as I receive

answers):

| Team | Deployed Robots | Navigation | Score | Distance | Num People |

|---|---|---|---|---|---|

| CERBERUS | |||||

| CoSTAR | 25 | ||||

| CRETISE | |||||

| CSIRO Data61 | |||||

| CTU-CRAS | 2x TRADR UGV + 1x hexacopter | combination | 4 | 150m | 12 |

| Explorer | |||||

| PLUTO | |||||

| MARBLE | |||||

| Robotika.cz | 4W Robík, tricycle Eduro | autonomous | 2 | Eduro 68m, Robík 221m | 5 |

3rd May 2019 — DARPA STIX Video

The original DARPA article about STIX results is here:

https://www.darpa.mil/news-events/2019-04-29.

11th May 2019 — DARPA STIX Robotika.cz pictures

20th May 2019 — CERBEROS and CRAS videos from Colorado

There are couple videos from STIX in Edgar's Mine in Colorado available at

YouTube:

CERBEROS

- ANYmal at DARPA SubT STIX — ANYmal, a quadrupedal robot developed by RSL (ETH Zurich) and ANYbotics, is deployed in the dark and dirty corridors of Edgar Experimental Mine in Idaho Springs, Colorado, in preparation for the Circuits Stage of the DARPA Subterranean (SubT) Challenge.

CRAS

- DARPA Subterranean Challenge - ČVUT v Praze, FEL (CZ) — ČVUT v Praze, Fakulta elektrotechnická se účastní americké soutěže DARPA, ve které plní úkol autonomního hledání předmětů a osob v podzemí za pomocí robotů a bezpilotních dronů.

- Czech TV (from 33rd minute)